AWS Batch 101: Guide to Scalable Batch Processing

.png)

Introduction

AWS Batch is a fully managed service from Amazon Web Services that enables you to run batch computing workloads of any scale on the cloud. It handles the heavy lifting of provisioning and managing the underlying compute resources, so you can focus on your batch jobs and analysis instead of infrastructure. In other words, AWS Batch plans, schedules, and executes your batch workloads by dynamically allocating the optimal quantity and type of compute resources based on your jobs’ needs . This means you don’t have to install or manage your own batch processing software or server cluster – AWS Batch takes care of it for you.

Batch computing typically involves executing a series of jobs (like scripts or executables) without manual intervention, often on large datasets or across many parallel tasks. AWS Batch excels at this by leveraging containerized workloads. Under the hood, it runs your jobs in Docker containers on AWS compute services such as Amazon ECS (Elastic Container Service), Amazon EKS (Elastic Kubernetes Service), or AWS Fargate (serverless containers). It can even utilize low-cost Spot instances to reduce costs. In this article, we’ll explain what AWS Batch is and how it works, cover its key components, walk through an AWS Batch example of submitting a job, discuss common AWS Batch use cases, compare AWS Batch vs AWS Lambda, review AWS Batch pricing and cost optimization, and clarify the difference between AWS Batch and AWS S3 Batch Operations.

What is AWS Batch and How Does It Work?

AWS Batch is designed to run batch processing jobs – think of things like processing millions of records, running simulations, transcoding media files, or performing large-scale computations. It is optimized for applications that can scale out through the execution of many tasks in parallel . To do this efficiently, AWS Batch dynamically provisions compute resources (CPU or memory-optimized instances, GPU instances, etc.) based on the volume and resource requirements of your jobs . As a fully managed service, it abstracts away the compute fleet management. You simply submit your jobs, and AWS Batch handles the rest: it chooses where and when to run the jobs, launches additional capacity if needed, monitors the progress, and then terminates resources when they’re no longer required. This elastic scaling ensures you aren’t constrained by fixed server capacity, and you pay only for the resources you actually use.

Key AWS Batch Components:

There are a few fundamental concepts to understand in AWS Batch’s architecture:

- Jobs: A job is the basic unit of work in AWS Batch. It can be a shell script, an executable, or a containerized application that you want to run. Each job runs as a Docker container in AWS Batch, on either Fargate or EC2 resources in your environment . You give the job a name, and you can also specify dependencies between jobs. For example, you might have job B wait for job A to finish successfully before B runs. Jobs can be run individually or as part of an array or pipeline of jobs. When you submit an AWS Batch job, you specify which job definition and job queue it uses (more on these below), and you can pass in parameters or overrides at submission time.

- Job Definitions: A job definition in AWS Batch is like a blueprint for your jobs. It defines how the job should be run – including the Docker image to use for the container, the CPU and memory requirements, environment variables, and an IAM role if the job needs AWS permissions . You can think of it as a template for the containerized workload. For instance, a job definition might say “run the my-data-processing:latest image with 2 vCPUs and 4 GB of memory.” Many of the settings in a job definition can be overridden when you actually submit a job, allowing flexibility (for example, you might use one job definition but override the input file name or memory requirement for different runs). In short, the job definition describes what to run and with what resources.

- Job Queues: When you submit jobs, you send them to a job queue. An AWS Batch job queue is essentially a waiting area for jobs. The job will sit in the queue until the AWS Batch scheduler picks it up and assigns it to run on an available compute resource in a compute environment . Job queues can be associated with one or more compute environments. You can also assign priorities to multiple job queues and to compute environments within a queue. For example, you might have a high-priority queue for urgent jobs and a low-priority queue for background jobs – AWS Batch will always try to run jobs from the higher priority queue first. The queue system, combined with scheduling policies, lets you implement control over job order and resource usage.

- Compute Environments: A compute environment is the underlying compute resource pool where AWS Batch runs your jobs. Think of it as the execution environment for the jobs in your queues. An AWS Batch compute environment can be managed (provisioned by AWS) or unmanaged (managed by you) . In a managed compute environment, you specify preferences like what types of EC2 instances (or Fargate) to use, any constraints (e.g., “use Spot instances up to 50% of on-demand price” or “use c5 and m5 instance families”), and the minimum, desired, and maximum number of vCPUs in the environment. AWS Batch will then automatically launch and terminate EC2 instances or allocate Fargate tasks within those bounds to handle your job queue’s workload . It essentially integrates with Auto Scaling to ensure enough instances are available for queued jobs and will scale down when idle. In an unmanaged compute environment, you handle the provisioning of the compute (for example, you might maintain your own ECS cluster or custom hardware). Unmanaged environments are useful if you need to use specialized resources (like a particular EC2 Dedicated Host or an on-prem resource connected via Outposts). In either case, the compute environment is where the worker nodes exist that will actually run the container jobs.

- Job Scheduler: AWS Batch includes a scheduler that determines when and where jobs run. By default, the scheduler uses a simple first-in, first-out (FIFO) strategy within each job queue . This means jobs are picked in the order they were submitted (respecting any job dependencies). However, AWS Batch also supports fair-share scheduling policies for more complex scenarios. With fair-share scheduling, you can define share identifiers and weights – essentially allocating portions of compute capacity to different teams or workload groups. This prevents one heavy queue or user from monopolizing the cluster; the scheduler will distribute resources according to the configured shares. If no custom scheduling policy is attached to a queue, it’s FIFO by default. For fine-grained control over job execution order, you can also use job dependencies (via the dependsOn parameter when submitting jobs) to ensure certain jobs run only after others complete. In practice, many users rely on EventBridge (CloudWatch Events) or Step Functions to orchestrate and trigger Batch jobs on a schedule or as part of workflows, which brings additional flexibility beyond the Batch service’s built-in scheduling.

How a Batch Job Runs (Lifecycle):

To summarize the workflow: you package your code or processing logic into a container image (e.g., a Docker image) and register a job definition for it. You create a compute environment (managed or unmanaged) and a job queue associated with that environment. When you submit a job (specifying the job definition and queue, plus any runtime overrides or parameters), the job enters the queue. The AWS Batch scheduler continuously monitors the queue; when it finds a job to run, it will look for available capacity in the linked compute environment. If capacity is available (e.g., an EC2 instance with free CPU/memory or an open slot for a Fargate task), the job is dispatched to run on that resource. If there isn’t available capacity, AWS Batch may scale up the environment – for example, launch a new EC2 instance – to accommodate the job. The job’s container image is pulled (from Amazon ECR or another registry), the container is started on the compute node, and the job moves to “RUNNING” state. AWS Batch monitors the job, and if it finishes successfully or fails, Batch will update the job status (and can trigger retries if configured). Logs from the job’s container (stdout/stderr) can be sent to Amazon CloudWatch Logs for you to review. Once jobs complete and the queue is idle, Batch will scale down the compute environment (if using a managed environment) by stopping or terminating excess instances so you’re not paying for unused capacity. This entire cycle is managed by AWS Batch behind the scenes, giving you a hands-free batch processing system.

Common Use Cases for AWS Batch Processing

Because AWS Batch can run large-scale workloads efficiently, it is well-suited for a variety of high-performance and throughput-intensive tasks. In fact, AWS Batch is optimized for applications that scale out by running many jobs in parallel or that require significant compute resources for each job. Here are some common use cases:

- High Performance Computing (HPC) and Scientific Simulations: AWS Batch is frequently used for scientific research and engineering simulations that require large compute clusters. Examples include computational fluid dynamics, weather forecasting, climate modeling, seismic analysis, and protein folding simulations. Batch can run multi-node parallel jobs with MPI libraries, enabling traditional HPC workloads in the cloud. It supports jobs with demanding CPU/GPU needs and large memory requirements, which are common in scientific computing.

- Machine Learning & Data Analytics: For machine learning training jobs or large-scale data analysis, AWS Batch can spin up the required number of instances (including GPU instances for deep learning) to process data in parallel. Deep learning model training, hyperparameter tuning (by running many training jobs in parallel), genomics data analysis (DNA sequencing pipelines), and financial risk modeling (e.g., Monte Carlo simulations) are all examples where Batch is useful. These jobs might run for hours or days, far exceeding the limits of serverless functions, making Batch a better fit.

- Media Processing and Rendering: Studios and media companies leverage AWS Batch for video transcoding, image processing, and animation rendering. For instance, if you need to transcode thousands of video files or render 3D animation frames, you can put those tasks into AWS Batch. It will queue and distribute the work across as many instances as needed (taking advantage of Spot instances to save costs, if possible). Animation rendering and video encoding are batch jobs that can easily scale to hundreds or thousands of cores in parallel, which Batch can manage.

- Big Data ETL and Batch Data Processing: AWS Batch is a great fit for periodic big data jobs, such as nightly ETL (extract-transform-load) processes, report generation, or bulk data conversions. You might have a pipeline that aggregates logs, transforms data and loads it into a data warehouse. Instead of maintaining a fixed Hadoop/Spark cluster for periodic jobs, you could use AWS Batch to spin up instances on demand to run your data processing scripts or even containerized Spark jobs, then shut them down when done. Image processing at scale (e.g., applying filters or computer vision algorithms to millions of images) or bulk file processing (compressing files, converting formats in bulk) are other examples of batch processing tasks well-suited to Batch.

- Rendering and Parallel Compute in Life Sciences: In life sciences and healthcare, AWS Batch is used for workloads like genomics (processing gene sequencing data), drug discovery simulations, and bioinformatics pipelines. For example, a genomics analysis might involve running a sequence alignment job for each of thousands of samples – Batch can queue these as array jobs and execute them concurrently. Given that AWS Batch jobs can be containerized, researchers can bring their own tools and software in containers, ensuring reproducible environments.

In general, any workload where you have a large volume of tasks to run, especially tasks that are compute-intensive or long-running, can benefit from AWS Batch. It shines when you need to process jobs in parallel at scale – whether it’s tens, thousands, or even hundreds of thousands of jobs – without having to manually manage the computing cluster. This makes it a powerful tool for organizations dealing with large-scale batch processing requirements.

(It’s worth noting that AWS Batch is not intended for real-time processing or highly latency-sensitive workloads – those are better served by other services like AWS Lambda or streaming systems. Batch is about throughput and scale for background or offline jobs.)

AWS Batch vs AWS Lambda – When to Use Which?

.png)

It’s common to compare AWS Batch with AWS Lambda, since both can be used to run code without managing servers. However, they are designed for very different use cases. Here we’ll highlight the key differences between AWS Batch vs Lambda and when each service is more appropriate:

- Execution Model and Duration: AWS Lambda is a serverless, event-driven compute service that runs your functions in response to events. Lambda functions have a maximum execution time of 15 minutes per invocation. This makes Lambda ideal for short-lived tasks like processing a single event (e.g., an image upload trigger) but impractical for long-running computations. AWS Batch jobs, on the other hand, can run as long as needed – there is no fixed timeout (you can optionally set your own timeout per job, but by default a Batch job will run until it finishes). If your workload might take hours or requires heavy computation, AWS Batch is the clear choice, whereas AWS Lambda should be reserved for tasks that complete in minutes or seconds.

- Compute Resources and Concurrency: With Lambda, you don’t provision any servers – AWS handles all scaling. You simply allocate memory (which indirectly allocates CPU) for your function, up to 10 GB of memory (and 6 vCPUs at the max memory). You cannot use GPUs with Lambda, and you’re limited in how much CPU/memory each invocation gets. AWS Batch provides much more flexibility here: you can run jobs that need large instances, custom hardware, or GPUs. For example, if you have a machine learning job that requires a GPU or 64 vCPUs and 256 GB of RAM, you can do that on AWS Batch by choosing an appropriate EC2 instance type in your compute environment. Lambda cannot handle that scenario. Also, Lambda functions are stateless and ephemeral—each run is isolated. AWS Batch jobs can be part of a larger context; for example, you might have a job that uses an EBS volume for scratch space or writes output to a shared file system like Amazon EFS (which Batch supports by mounting file systems on EC2 compute environments). In terms of concurrency, Lambda can automatically scale to thousands of concurrent executions (with certain account limits), which is great for handling many small events. AWS Batch can also handle thousands of parallel jobs (array jobs up to 10,000 tasks, for instance), but it does so by provisioning actual instances/containers to run them. In practice, Lambda is superb for high concurrency of small tasks, whereas Batch is better for high throughput of large or numerous tasks that may need substantial compute per task.

- Triggering and Orchestration: AWS Lambda is designed to be triggered by a wide array of event sources: e.g., S3 events (object uploaded), API Gateway HTTP requests, DynamoDB table updates, SNS messages, CloudWatch Events/EventBridge timers, etc. . This makes Lambda extremely flexible for event-driven architectures – you write a function and AWS will run it whenever the specified event happens, automatically. AWS Batch is not event-driven in the same way. You generally submit jobs to Batch via an API call (or via the console/CLI). To automate Batch jobs, you often use an event or scheduler in combination with Batch. For example, you might set up a CloudWatch Events rule to trigger a Batch job submission every hour, or use an S3 event to invoke a Lambda that submits a Batch job. Batch doesn’t directly integrate with as many event sources on its own. That said, AWS Batch can be integrated into workflows using Step Functions or triggered on a schedule using EventBridge , but it’s not as instantaneous as Lambda responding to an event. In summary: use Lambda when you need to respond to events in real-time (with minimal latency) and when each task can run independently and complete quickly. Use Batch when you have a lot of work to run that isn’t tied to individual event triggers, or you have large processing jobs that need to be queued and executed reliably to completion.

- Cost Model: The cost structures of Lambda and Batch differ significantly. AWS Lambda charges you based on the number of times your function is invoked and the duration (in milliseconds) of each execution, multiplied by the amount of memory/CPU allocated. There is a generous free tier (1 million requests and 400,000 GB-seconds per month) after which you pay per request and per 100ms of execution time. This model is great for sporadic, short executions – you pay precisely for the time your code runs, and nothing when it isn’t running. AWS Batch, in contrast, has no direct service fee – you don’t pay for “Batch” itself at all. You pay for the AWS resources that are launched to run your jobs . That means if Batch spins up EC2 instances or Fargate containers for 2 hours to run your job, you pay for 2 hours of those instances’ cost. There is no free tier specifically for Batch (aside from the normal free tier for EC2 if applicable). In general, Lambda can be more cost-effective for short, low-volume workloads, because you avoid having idle infrastructure and the granular billing works in your favor . But for long-running or compute-heavy workloads, AWS Batch often is more economical . With Batch, you can use cost-optimized instances (e.g., Spot instances at up to 90% discount) and achieve lower $/compute for large jobs. For example, processing a 10 GB file might take a few hours on a Batch EC2 instance – you pay for those hours. On Lambda, you might have to split the task into many 15-minute Lambda invocations and the cost could end up higher due to the per-invoke overhead (and managing state between invocations becomes a headache). So, the rule of thumb is: small, intermittent tasks -> Lambda; big, intensive tasks -> Batch.

- Use Case Fit: Lambda and Batch actually complement each other in many architectures. AWS Lambda is excellent for event-driven tasks like thumbnail generation on image upload, validating form data on a trigger, streaming data processing in chunks, lightweight APIs, or gluing AWS services together. It excels at real-time processing and micro-tasks. AWS Batch is excellent for batch workloads such as nightly processing jobs, analytics crunching, bulk file conversions, or any kind of large-scale parallel computation. As an example, you might use Lambda to handle individual data ingestion events, but once enough data is accumulated, use AWS Batch to run a heavy analysis across the entire dataset. Another example: Lambda might be used to pre-process or trigger things, but if someone needs to run a one-off computation on a million records, Batch is a better fit. One concrete comparison: to process 1000 image files, you could invoke 1000 Lambda functions (one per file) or submit a Batch job array of 1000 tasks. The Lambda approach might be faster to start (no containers to provision) but each Lambda is limited in runtime and memory, whereas Batch could use bigger instances and possibly complete the tasks with fewer nodes in parallel but each working faster or on multiple images sequentially. There’s also the question of pipeline complexity: AWS Batch jobs can be part of multi-step workflows with dependencies (e.g., a pipeline of jobs) directly, whereas with Lambda you might need to orchestrate with Step Functions if you have multiple steps. In summary, use AWS Lambda for event-driven, real-time, short tasks; use AWS Batch for scheduled or on-demand batch processing of large or long-running tasks. A common adage is that AWS Lambda is great for milliseconds to minutes, AWS Batch is great for minutes to days of compute.

To illustrate the difference, here are some example use cases side by side:

- Image resizing: If a user uploads a single image and you need to create a thumbnail, an AWS Lambda function triggered by the S3 upload event is ideal (quick, seconds of runtime). If you need to process a million images to apply a new watermark, AWS Batch would let you queue this large job and process images in parallel batches without timing out.

- Data processing: If you have a stream of individual records (say, a Kinesis data stream) that need slight augmentation, Lambda can be used for each record in real-time. But if you want to aggregate and analyze a day’s worth of records (billions of events), you might dump them to S3 and then run an AWS Batch job that crunches the entire dataset in one go.

- Machine learning: A Lambda might be used to do a quick inference (e.g., trigger a Lambda to classify one image using a pre-loaded ML model – assuming it runs under 15 minutes and within memory). Training a machine learning model on thousands of images, however, is a multi-hour task requiring GPUs – something AWS Batch would handle by provisioning the right EC2 GPU instances.

In essence, AWS Batch and AWS Lambda serve different purposes. They are not mutually exclusive and often work together in architectures, but understanding their differences helps in choosing the right tool for your workload. If your job can be broken into quick, independent tasks triggered by events, Lambda offers a serverless, instantly scaling solution. If your job is a heavy-duty batch processing task or a set of tasks that need to be managed and scaled over time, AWS Batch is usually the better choice.

AWS Batch vs AWS S3 Batch Operations – Not the Same Thing

.png)

Before we conclude, it’s important to clarify a common confusion: AWS Batch (the service we’ve been discussing) is different from Amazon S3 Batch Operations. The names sound similar, but they serve very different purposes.

- AWS Batch (our topic here) is a compute service to run arbitrary batch jobs on AWS compute resources (EC2, Fargate, etc.). It’s about running your code or containerized workloads in batch.

- Amazon S3 Batch Operations is a feature of Amazon S3 (the storage service) that allows you to perform bulk actions on S3 objects. S3 Batch Operations lets you, for example, take a large set of S3 objects and apply the same action to all of them – such as copying objects to another bucket, modifying object ACLs, restoring archives, or invoking an AWS Lambda function on each object. It is a managed way to automate repetitive tasks for potentially billions of S3 objects.

The key distinction is that S3 Batch Operations is specific to S3 and object storage tasks, whereas AWS Batch is a general compute service for any type of batch job.

To avoid confusion, if you hear “batch” in the context of S3: S3 Batch Operations does not use AWS Batch (though under the hood it might use AWS Lambda or other mechanisms for each object operation). It’s an S3-centric tool. For example, you could use S3 Batch Operations to initiate a resize on every image in a bucket by invoking a Lambda function for each object, or to copy every object to a new storage class. You would not use AWS Batch for that task, because AWS Batch doesn’t natively iterate over S3 objects – that’s what S3 Batch Operations is designed for.

AWS Batch Pricing and Cost Optimization

One of the advantages of AWS Batch is its pricing model: there is no additional charge for AWS Batch itself. You only pay for the underlying AWS resources that Batch provisions on your behalf. In other words, if AWS Batch launches EC2 instances or runs tasks on AWS Fargate to execute your jobs, you pay the normal cost for those instances or Fargate CPU/memory usage. AWS Batch doesn’t add a surcharge for the orchestration. This means AWS Batch can be extremely cost-effective, especially if you optimize your compute environments for cost.

Here are key points and best practices for AWS Batch pricing and cost optimization:

- Resource Selection: When creating a compute environment, you have control over what instance types can be used (for EC2) and whether to use On-Demand or Spot. For cost savings, consider enabling Spot Instances in your managed compute environment. Spot Instances can be up to 90% cheaper than On-Demand EC2 prices. AWS Batch is a great fit for Spot because if a Spot instance gets interrupted (AWS reclaims it with a 2-minute notice), Batch will automatically re-queue the interrupted jobs and can spin up a new instance to continue the work. This makes Spot feasible even for long jobs, provided your jobs are checkpointable or can handle restarts. By default, AWS Batch will try to spin up the lowest-priced Spot instance that meets your requirements , which maximizes savings. For example, if your job needs 4 vCPUs and 16 GB RAM, Batch might choose a smaller instance type if available cheaply or pack multiple jobs on a bigger Spot instance if that’s more cost-efficient.

- Reserve or Savings Plans: If you have steady batch workloads, you can use EC2 Reserved Instances or Savings Plans to reduce costs. AWS Batch will happily utilize any Reserved Instances you own (it just launches EC2 instances in your account). So if, for instance, you know you always need at least 32 vCPUs of baseline capacity for nightly jobs, you could purchase a Savings Plan for 32 vCPUs worth of compute. Batch will then use that, and any additional burst above that could use Spot. The AWS Batch pricing page explicitly states you can use your Reserved Instances or Savings Plans and those discounts will apply.

- Right-sizing and Scaling Policies: A common cost optimization is to ensure that your Batch compute environment is not configured to keep unnecessary instances running. By default, a managed compute environment lets you set a minimum vCPUs. If you set the minimum to 0, AWS Batch will scale down to zero instances when no jobs are queued (which saves money). If you set a non-zero minimum, you will always have that capacity running (which might be useful if you have constant jobs or want to reduce cold-start latency, but it incurs cost). Many users keep min=0 for cost savings, and maybe a small desired level if they have frequent jobs. Idle instances = wasted cost, so tune the min/desired capacity to your workload patterns. AWS Batch will scale up quickly when jobs come in, so in many cases you don’t need to keep idle servers around. Also, after jobs complete, Batch will scale down – but you can control how aggressively. Check your Compute Environment settings and remove or minimize the baseline capacity when possible . It’s also a good practice to periodically review that old, unused compute environments are disabled or updated to 0 min vCPUs so they don’t accidentally keep instances alive.

- Job Scheduling and Packing: Because you pay for instances by the second (for EC2 or Fargate), how you queue your jobs can affect cost. For example, if you have many small jobs, it might be efficient to run them on one instance sequentially versus spinning up new instances for each – AWS Batch will try to binpack jobs onto available instances. You can influence this by how you define jobs and their resource needs. If each job asks for a whole 4 vCPU instance but only uses 10% of it, you’ll be wasting capacity. Instead, if possible, define jobs with just the resources they truly need. AWS Batch can run multiple container jobs on one EC2 instance if the instance has enough CPU/memory for all. So accurately setting resource requirements helps the scheduler pack jobs and avoid fragmentation (unused resources). This yields better utilization and lower cost per work done.

- Spot Best Practices: If you opt for Spot, design your jobs to be fault tolerant. That means maybe writing intermediate results to S3 or checkpoints so that if a job is cut off midway (due to a Spot interruption), it can resume or restart without losing all progress. AWS Batch will automatically retry an interrupted job by launching it on a new instance, but if your job restarts from scratch each time, an unlucky series of interruptions could waste time. Consider using the -checkpoint pattern or splitting large jobs into smaller chunks so they can complete within typical Spot availability windows. Additionally, you can configure job retries in AWS Batch (e.g., try up to N times) so that if a job fails (maybe due to an interruption or transient error), it will retry automatically. Overall, Spot instances offer tremendous cost savings for batch workloads – companies regularly save 50-90% on batch processing costs by using Spot where appropriate. The trade-off is handling possible interruptions, but for many batch jobs (like rendering frames or crunching numbers where a retry just re-processes the same chunk), this is manageable.

- Fargate vs EC2 cost considerations: AWS Batch can use AWS Fargate which is serverless compute charged per vCPU-minute and GB-minute. Fargate is often more expensive per unit of compute than EC2 (especially compared to Spot EC2), but it has the advantage of fine-grained billing and no need to manage instances at all. If your batch jobs are sporadic and short, Fargate might actually save money by not having to keep an EC2 instance running idle between jobs. If your jobs are long-running or you have a lot of them, EC2 (with Spot) will likely be cheaper. Another factor: Fargate has resource limits (currently max 4 vCPU and 30GB memory per task for regular Fargate, or 16 vCPU/120GB for Fargate in EKS with platform 1.4). Large jobs may not fit in Fargate’s limits, necessitating EC2 anyway. You can mix and match – for example, small jobs on Fargate, big jobs on EC2 via separate compute environments, and assign your jobs to different queues accordingly.

- Monitoring and Tuning: Use Amazon CloudWatch metrics to monitor your AWS Batch environments. Look at metrics like CPUUtilization on your instances, or the number of Running vs. Pending jobs. If you consistently see low utilization, you might reduce the number of vCPUs requested by jobs (so more jobs can share an instance). If jobs are waiting while instances are underutilized, maybe the instance type is too large – allow smaller instances or adjust the job definitions. Also, take advantage of AWS Cost Explorer or cost allocation tags. AWS Batch lets you tag jobs or compute environments; you can then break down costs by those tags (e.g., cost per project or per workflow). This can help identify which batch workloads are costing the most and if they have inefficiencies.

In summary, AWS Batch pricing is simply the sum of the resources it launches. To optimize costs: use Spot instances where possible, right-size your jobs’ resource requests, avoid idle capacity, and leverage reserved capacity if you have steady workloads. With these strategies, AWS Batch can deliver vast amounts of compute power for a fraction of traditional costs. For example, using AWS Batch with Spot, organizations have run computational jobs like genomic analyses or rendering tasks at 10% of the on-demand cost , all while the service handles the provisioning and scaling automatically. Always remember that any data transfer or storage used by your jobs will also incur standard AWS costs (e.g., if your jobs read from S3 or write output to S3, you pay for those requests and data volumes, and if output is stored, you pay S3 storage). Those aren’t AWS Batch fees per se, but part of the overall cost consideration for your batch workload.

Conclusion

AWS Batch is a powerful service for anyone needing to perform large-scale batch processing in the cloud. It abstracts the complexities of provisioning, managing, and scheduling compute resources, allowing you to run everything from one-off jobs to massive parallel workloads with ease. We covered what AWS Batch is, its core components (jobs, job definitions, queues, compute environments), and how it works behind the scenes to automatically manage compute capacity. We also provided a simple AWS Batch example of submitting a containerized job, explored typical use cases like HPC, data processing, and media rendering, and compared AWS Batch vs Lambda to highlight when to use each. In terms of cost, AWS Batch’s model of only paying for underlying resources (with no extra service fee) combined with options like Spot instances makes it quite attractive for cost-conscious compute-intensive tasks, especially compared to trying to force-fit those tasks into a 15-minute serverless function. Finally, we clarified the difference between AWS Batch and Amazon S3 Batch Operations to prevent any confusion between the two.

For beginners and intermediate users, the key takeaway is that AWS Batch lets you focus on your batch jobs and algorithms, while AWS handles the infrastructure. You don’t need to maintain your own cluster or worry about scaling – you define your jobs and let Batch orchestrate them at any scale. Whether you’re rendering a film, analyzing genomic sequences, or converting millions of files, AWS Batch provides a reliable, managed platform to get it done efficiently . With the information and tutorial above, you should be well-equipped to start experimenting with AWS Batch for your own batch processing needs. Happy batching!

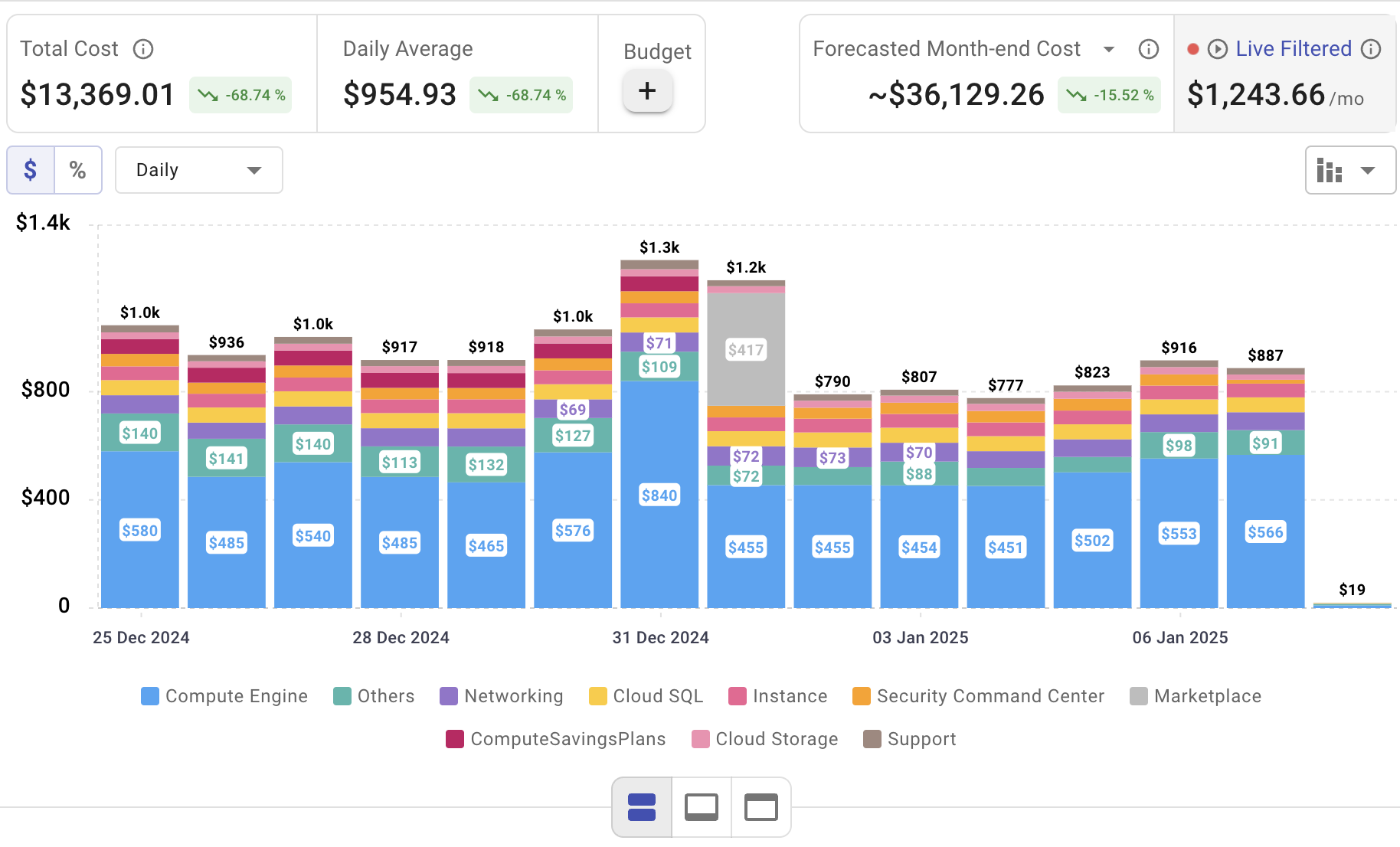

Optimize Your Cloud Expenses with Cloudchipr

Setting up AWS Batch efficiently is only the beginning—actively managing cloud spend is vital to maintaining budget control. Cloudchipr offers an intuitive platform that delivers multi-cloud cost visibility, helping you eliminate waste and optimize resources across AWS, Azure, and GCP.

Key Features of Cloudchipr

Automated Resource Management:

Easily identify and eliminate idle or underused resources with no-code automation workflows. This ensures you minimize unnecessary spending while keeping your cloud environment efficient.

Receive actionable, data-backed advice on the best instance sizes, storage setups, and compute resources. This enables you to achieve optimal performance without exceeding your budget.

Keep track of your Reserved Instances and Savings Plans to maximize their use.

Monitor real-time usage and performance metrics across AWS, Azure, and GCP. Quickly identify inefficiencies and make proactive adjustments, enhancing your infrastructure.

Take advantage of Cloudchipr’s on-demand, certified DevOps team that eliminates the hiring hassles and off-boarding worries. This service provides accelerated Day 1 setup through infrastructure as code, automated deployment pipelines, and robust monitoring. On Day 2, it ensures continuous operation with 24/7 support, proactive incident management, and tailored solutions to suit your organization’s unique needs. Integrating this service means you get the expertise needed to optimize not only your cloud costs but also your overall operational agility and resilience.

Experience the advantages of integrated multi-cloud management and proactive cost optimization by signing up for a 14-day free trial today, no hidden charges, no commitments.

.png)

.png)

.png)