Getting Started with AWS DevOps Services: Build, Test, and Deploy Faster

Introduction

DevOps is all about speeding up software delivery without sacrificing quality. AWS (Amazon Web Services) offers a broad set of DevOps services that help teams automate software development and operations tasks, from code creation to deployment and monitoring. In this guide, we’ll explore core AWS tools that enable continuous integration, continuous delivery, infrastructure as code, and monitoring. No matter if you’re writing code, running ops, managing teams, or scaling a global business, knowing how AWS DevOps services fit together is the shortcut to faster, more dependable releases. Here’s a look at the core AWS tools that high-velocity teams weave into their daily workflows.

Key AWS DevOps Services at a Glance

To implement DevOps on AWS, you’ll encounter several specialized services. Here’s a quick overview of the core tools we’ll cover and what they do:

- AWS CodeBuild: A continuous integration service that compiles code, runs tests, and produces deployment-ready packages.

- AWS CodePipeline: A continuous delivery service to orchestrate your release process through multiple stages (build, test, deploy).

- AWS CodeDeploy: A deployment automation service that releases application updates to various compute targets (EC2, containers, Lambda, etc.).

- AWS CloudFormation: An infrastructure-as-code service for provisioning and managing cloud resources through templated stacks.

- Amazon CloudWatch: A monitoring and observability service for collecting logs, metrics, and events from your applications and infrastructure.

- Amazon ECS & EKS: Managed container orchestration services (ECS for AWS-native containers, EKS for Kubernetes) to run and scale containerized applications.

These AWS DevOps services cover the end-to-end software lifecycle, helping teams implement DevOps best practices on AWS. Now, let’s look at each in more detail and see how they work together.

Quick note: *AWS CodeCommit stopped accepting new customers on July 25 2024, and AWS has said no new features are planned; existing repos still work, but most new pipelines start with external Git providers such as GitHub or Bitbucket via AWS CodeStar Connections.

Continuous Integration with AWS CodeBuild

Once code is in a repository, the next step is building and testing it. AWS CodeBuild is a fully managed continuous integration (CI) service that compiles your source code, runs tests, and produces software packages that are ready to deploy . With CodeBuild, you don’t need to set up or manage any build servers; AWS handles the provisioning and scaling of the build environments on the fly.

CodeBuild supports a variety of programming languages and build tools out-of-the-box (through pre-configured build environments for languages like Python, Java, Node.js, .NET, and more). It can also use custom build environments packaged as Docker containers, giving you flexibility to build in almost any environment you need. During a build, CodeBuild can run your unit tests or integration tests and even generate reports for test results. It scales automatically to run multiple builds in parallel, so your CI process won’t be a bottleneck even as your development team grows.

Integrating CodeBuild into your pipeline ensures that every code change is compiled and verified in a consistent environment. This catches errors early. For example, when a developer pushes new code to repo, a CodeBuild project can be triggered to compile the code and run test suites. If the build or tests fail, CodeBuild will report the failure and stop the pipeline, preventing bad code from deploying. If everything passes, the output artifact (such as compiled binaries or a Docker image) is stored (often in Amazon S3 or Amazon ECR) for the next stage of the process. In short, CodeBuild provides the automation and consistency required for true continuous integration, which is a cornerstone of DevOps practices.

(Developers benefit from faster feedback on their commits – instead of waiting for a manually run build or tests, CodeBuild provides results in minutes. This automation boosts team productivity and confidence in the code.)

Continuous Delivery with AWS CodePipeline

After code is built and tested, you need a mechanism to move it through various stages (like staging and production) automatically. This is where AWS CodePipeline comes in. AWS CodePipeline is a fully managed continuous delivery service for modeling, visualizing, and automating your software release process. You can define a pipeline with stages such as Source, Build, Test, and Deploy, and CodePipeline will orchestrate the flow of your application changes through these stages every time there is an update.

Think of CodePipeline as the automation glue that ties together all the other services and steps in your DevOps workflow. It can pull in the latest code from GitHub, kick off a CodeBuild job to run tests, then deploy the artifacts using CodeDeploy or other deployment actions. You define the sequence of actions and the rules (for example, only promote to production if all tests pass). CodePipeline handles all the heavy lifting of invoking the right tools in the right order. It even has integration points for manual approvals (if you want a human to review before deploying to production) and can integrate third-party tools like Jenkins, GitHub webhooks, or testing services, making it very extensible.

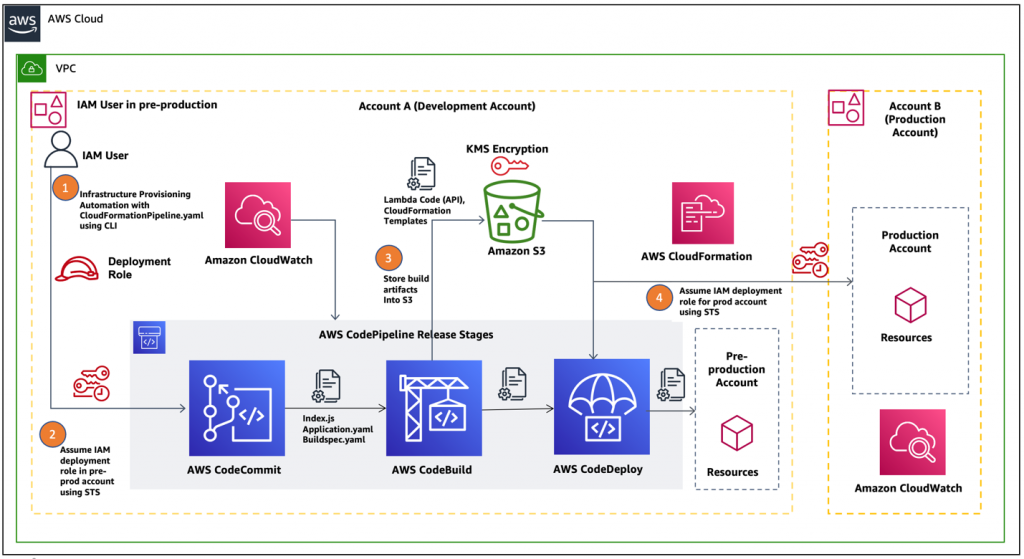

To illustrate a typical workflow, here’s an example of how a CI/CD pipeline on AWS might operate:

- Source Stage: A developer commits code to a GitHub or BitBucket repository. This commit triggers a pipeline execution (often via an Amazon CloudWatch Event that detects the commit) .

- Build and Test Stage: AWS CodePipeline retrieves the latest code and starts a CodeBuild project to compile the code and run tests. If the build succeeds and tests pass, CodeBuild outputs an artifact (e.g., a deployable package or container image) which gets stored in Amazon S3 or Amazon ECR for later use.

- Deploy to Staging: CodePipeline then uses CodeDeploy (or another deployment mechanism) to deploy the new build to a staging or dev environment (for example, a group of Amazon EC2 instances or a Docker container on ECS). This allows testing the deployment in an environment similar to production.

- Deploy to Production: If the staging deployment is successful (and any manual approval gates are passed), CodePipeline proceeds to deploy the application to the production environment using CodeDeploy. Users then have access to the new version of the application. CodePipeline will mark the pipeline execution as complete.

This entire process is automated and repeatable, which means you can release updates to your software quickly and consistently. In fact, CodePipeline can perform these steps rapidly whenever changes are detected, enabling rapid delivery of new features (one of the key DevOps goals). Because each stage is defined and scripted, you also reduce the chances of human error that often come with manual deploys.

Automated Deployments with AWS CodeDeploy

Deploying software to running servers or services can be tricky, especially when aiming for zero downtime. AWS CodeDeploy is a service that automates application deployments to various compute platforms, including Amazon EC2 instances, on-premises servers, serverless AWS Lambda functions, and even Amazon ECS containers. Its goal is to make deploying as easy as clicking a button (or running a pipeline) and letting AWS handle the process of updating each instance or resource safely.

With CodeDeploy, you define a deployment configuration that determines how the rollout should occur. For example, you can deploy to one server at a time, half of them at a time, or all at once, depending on your risk tolerance. More advanced strategies include blue/green deployments, where CodeDeploy launches a fresh set of instances (or new containers) with the new version, switches traffic to them when ready, and then terminates the old ones – all automatically. This can greatly reduce (or eliminate) downtime during releases and provides an easy rollback mechanism if something goes wrong. AWS CodeDeploy makes it easier to rapidly release new features and avoid downtime during deployments by handling the complexity of updating applications in a controlled way.

Another powerful feature is that CodeDeploy can monitor the health of your application during deployments. It can integrate with Amazon CloudWatch alarms or run validation tests to ensure the new version is working. If it detects failures (e.g., instances failing health checks), CodeDeploy can automatically roll back to the previous version, thus protecting your users from broken releases. This aligns perfectly with DevOps principles of continuous deployment – you can deploy fast but also fail fast and recover fast, thanks to automation.

In practice, teams often use CodeDeploy through CodePipeline (as we discussed above). For instance, CodePipeline can have a deploy action that uses CodeDeploy to push out the build artifact to a fleet of EC2 instances running a web application. CodeDeploy agents installed on those instances will take care of pulling the new version and updating the application according to the deployment strategy you choose. CodeDeploy keeps a history of deployments, so you can track when and where a certain application revision was deployed.

By automating deployments, CodeDeploy removes a lot of the error-prone manual steps (copying files, restarting servers, etc.) from your release process. This not only saves time but also standardizes the procedure across environments. Whether you’re a small startup deploying a simple app to a couple of servers, or an enterprise rolling out a complex microservices update to hundreds of instances, CodeDeploy can scale to your needs and ensure each deployment is done in a repeatable, safe manner.

Infrastructure as Code with AWS CloudFormation

Infrastructure provisioning and management are crucial in DevOps. Rather than manually clicking around in the AWS console to create resources (which is error-prone and hard to reproduce), DevOps practices encourage treating infrastructure as code. AWS CloudFormation is the go-to service for this on AWS. It allows you to define your AWS infrastructure in template files (written in JSON or YAML) and then deploy those templates to create stacks of resources.

With CloudFormation, you can describe all the AWS resources your application needs – such as EC2 instances, load balancers, databases, VPC networks, IAM roles, etc. – in a single template. Deploying the template will launch all those resources in an orderly and predictable fashion. Because the template is code, you can version control it and review changes just like you do application code. This means your infrastructure changes go through the same pipeline and approval processes as any software change, bringing infrastructure management into the DevOps workflow.

Key benefits of CloudFormation include repeatability and consistency. If you need to set up a new environment (say a dev, test, or additional production environment), you can reuse the same template to ensure it’s identical to the others. CloudFormation will handle the provisioning and configure dependencies between resources for you. If you need to update resources, you can update the template and apply it; CloudFormation will figure out what changes to make (it even lets you preview changes with a Change Set before applying, to avoid surprises ). In case something goes wrong during creation or update, CloudFormation can automatically rollback to the last good state, which is a lifesaver.

From a DevOps perspective, CloudFormation enables self-service and automation for infrastructure. Developers or operations engineers can propose changes to infrastructure via templates, and those can be rolled out via pipelines just like application deployments. In fact, CodePipeline has built-in actions for CloudFormation, so you can have a pipeline stage that updates your infrastructure stack (for example, deploy a new AWS Lambda function or microservice infrastructure) as part of a release. This makes it possible to automate not just code deployments but also infrastructure changes (sometimes called GitOps or Infrastructure as Code pipelines).

Another advantage is that CloudFormation serves as documentation of your architecture. Anyone with access to the templates can understand what resources are in play, which helps with onboarding and troubleshooting. And because CloudFormation is an AWS-native service, it is continuously updated to support new AWS services and features – you can manage a wide array of services through it, from EC2 and S3 to higher-level services like AWS Step Functions or API Gateway .

(In summary, AWS CloudFormation lets DevOps teams provision and manage infrastructure in a safe, repeatable manner , treating infrastructure changes with the same rigor as application code changes. This is essential for achieving consistent environments across dev, test, and prod, and it greatly supports the agility and control that DevOps culture strives for.)

Monitoring and Logging with Amazon CloudWatch

The DevOps lifecycle doesn’t end once code is deployed – continuous monitoring and feedback are equally important. Amazon CloudWatch is AWS’s primary monitoring and observability service, providing a unified view of the operational health of your systems. CloudWatch collects metrics from AWS services (and even your own applications), aggregates logs, and can emit alerts or trigger automated actions based on defined thresholds.

In practice, CloudWatch helps DevOps teams keep an eye on everything running in AWS: CPU utilization of your EC2 servers, memory usage of containers, error rates of your web application, latency of your APIs, and much more. By collecting data across AWS resources, CloudWatch gives visibility into system-wide performance and allows you to set alarms and automatically react to changes . For example, you might set a CloudWatch Alarm to notify the team (or trigger an AWS Lambda function) if a production server’s CPU stays above 90% for 5 minutes, indicating a possible issue.

CloudWatch has several components that are particularly useful in a DevOps context:

- CloudWatch Metrics & Alarms: Metrics are numerical data points (like CPU %, network bytes, etc.) emitted by AWS services or custom instruments. You can monitor these in dashboards and create alarms that notify you (via email, SMS, Slack integration, etc.) or trigger automated actions (like scaling up resources or running a Lambda function to remediate an issue) when thresholds are crossed.

- CloudWatch Logs: This feature aggregates application and system logs from various sources (EC2 instances, Lambda functions, container logs, etc.) into a centralized service. Instead of SSH-ing into servers to read log files, you can view and search all logs in one place. You can also set up log-based metrics or alerts (e.g., trigger an alarm if the word “ERROR” appears in the logs too many times within an hour).

- CloudWatch Events / EventBridge: CloudWatch Events (now evolved into Amazon EventBridge) allows event-driven actions. For instance, as we saw earlier, a CloudWatch event rule can listen for push events and then start a CodePipeline execution . You can also trigger events on a schedule (cron jobs in the cloud) or in response to AWS resource changes. This is critical for automating responses in your DevOps pipeline – not just for CI/CD triggers, but also for auto-remediation (like automatically reboot a server if a health check alarm fires).

Using CloudWatch, teams achieve observability into their systems, which closes the feedback loop of DevOps. Deployments done via CodeDeploy can be monitored via CloudWatch to ensure everything is operating smoothly. If something goes wrong, CloudWatch alarms can quickly alert the team or even trigger rollback scripts. This constant monitoring means issues can be detected and resolved faster, contributing to higher reliability of applications even as release frequency increases.

(For DevOps teams, CloudWatch is like the nervous system of your AWS environment – it senses and reports the state of all parts of the system. By leveraging CloudWatch dashboards and alarms, both startups and enterprises can gain actionable insights and ensure that the AWS services DevOps engineers have put in place are delivering the desired outcomes in production.)

Containerized Deployments with Amazon ECS and EKS

Modern application architectures often rely on containers and microservices. AWS offers two powerful container orchestration services – Amazon ECS (Elastic Container Service) and Amazon EKS (Elastic Kubernetes Service) – which make it easier to deploy and manage containerized applications at scale.

Amazon ECS is AWS’s native container orchestration service. It’s a fully managed service that helps you deploy, manage, and scale Docker containers in the cloud. ECS abstracts away the complexity of setting up cluster management infrastructure. You can run ECS in two modes: one that uses EC2 instances as the underlying hosts for containers, or AWS Fargate, which is a serverless mode where AWS manages the compute for you. Amazon ECS is deeply integrated with other AWS services (like IAM for security, ELB for load balancing, ECR for container images, CloudWatch for logging) to provide an easy-to-use solution for running containers. In short, ECS allows you to run containerized applications without having to install your own container orchestrator – AWS handles the heavy lifting of cluster management.

.png)

Amazon EKS, on the other hand, is AWS’s managed Kubernetes service. Kubernetes is an open-source orchestration system many companies use for containers. With Amazon EKS, you get a fully managed control plane for Kubernetes, so you can run Kubernetes clusters on AWS without needing to manage the master nodes yourself. Amazon EKS enables you to run Kubernetes seamlessly on AWS and even on-premises data centers, as it can integrate with your own infrastructure via EKS Anywhere . Essentially, AWS takes care of the Kubernetes management and automatically handles tasks like patching the control plane, ensuring high availability, and scaling the cluster management components. You can then deploy your containerized applications to EKS using standard Kubernetes tools (kubectl, Helm, etc.), and EKS will leverage AWS resources (like EC2 or Fargate for running pods, IAM for security, and so on) under the hood.

.png)

So when should you use ECS vs EKS? It often comes down to familiarity and requirements. ECS is simple and opinionated – great if you want a no-frills, AWS-integrated way to run containers. EKS is Kubernetes-compatible – ideal if you need portability or are already invested in the Kubernetes ecosystem and want to migrate workloads to AWS without re-architecting. Both services are widely used in AWS DevOps scenarios. In fact, many AWS DevOps pipelines include steps to build container images and deploy them on ECS or EKS:

- For ECS, AWS CodePipeline can directly trigger a deployment to Amazon ECS. You might use CodeBuild to build a Docker image and push it to Amazon ECR (Elastic Container Registry), then have CodeDeploy (with an ECS blue/green deployment configuration) or the ECS service scheduler pick up the new image and deploy it. AWS CodeDeploy integrates with Amazon ECS to support blue/green deployments for ECS services behind a load balancer, making rolling out a new container version quite straightforward (CodeDeploy handles shifting traffic to the new Task set and verifying health before terminating the old Task set).

- For EKS, because it is Kubernetes, you might incorporate a step in CodePipeline that uses a containerized tool (like a CodeBuild job running kubectl or a Helm chart) to apply changes to your cluster. For example, after building a new Docker image and pushing to ECR, a pipeline can update a Kubernetes Deployment to use the new image. While AWS doesn’t (at this time) have a native “CodeDeploy for EKS”, the flexibility of CodePipeline means you can integrate Kubernetes deployment commands or even use additional tools like Argo CD or Flux in concert with EKS for GitOps style continuous delivery.

In both cases, AWS provides a robust platform for running containers in production with minimal hassle. These services handle tasks like scheduling containers onto servers, scaling them based on load, and recovering from failures – all essential for keeping modern microservices applications running smoothly. From a DevOps perspective, ECS and EKS allow teams to package their applications into containers (ensuring consistency across environments) and then deploy/manages them with ease. Combined with the earlier tools (CodeBuild, CodePipeline, etc.), you can create a fully automated pipeline that goes from code commit to container deployment on ECS/EKS, enabling rapid and reliable delivery for containerized apps.

(By leveraging Amazon ECS or EKS, organizations can adopt containers as part of their DevOps strategy without needing to stand up complex orchestration systems themselves. This is particularly helpful for startups looking to innovate quickly with microservices, as well as enterprises aiming to modernize legacy apps by shifting to containers. AWS provides the container management, and you plug it into your DevOps pipeline – achieving scalability and consistency at the infrastructure level to complement your continuous integration and delivery processes.)

Where AWS DevOps Services Stop—and Cloudchipr Picks Up

While AWS supplies the building blocks, many organizations still need hands-on guidance to architect, optimize, and operate their pipelines. That’s where Cloudchipr comes in.

Cloudchipr delivers comprehensive DevOps services through a certified team, removing the complexities of hiring and onboarding. Our approach includes:

- Day 1: Setup and Automation

- Implementation of Infrastructure as Code and automation.

- Provisioning and deployment automation.

- Establishment of monitoring, metrics collection, and log aggregation.

- Performance tuning to ensure resource utilization efficiency.

- Day 2: Maintenance and Operation

- Ensuring reliability and scalability of systems.

- Providing 24/7 on-call rotation with aggressive SLA support.

- Automating backup and restore processes.

- Handling troubleshooting, debugging, and operational incident responses.

With a proven track record of assisting over 100 companies, Cloudchipr’s team of 50+ certified DevOps professionals offers end-to-end support, including 24/7 emergency assistance and a vast knowledge base. Cloudchipr’s customized DevOps strategies are tailored to meet the unique needs of each client, ensuring optimal performance and efficiency.

Conclusion: Bringing It All Together

AWS offers a comprehensive suite of services to support DevOps practices end-to-end. From writing code to building, testing, deploying, and monitoring, the AWS DevOps services we’ve covered are designed to snap together like LEGO bricks. You can start small—say, a single CodePipeline that pushes to one EC2 instance—and later grow into a multi-stage pipeline that provisions infrastructure with CloudFormation, ships containers to ECS or EKS, and keeps an eye on everything through CloudWatch.

One of the biggest advantages of AWS’s DevOps toolkit is flexibility. Use as many—or as few—services as make sense for your team. If your source lives on GitHub, CodePipeline can pull from it. If you already love Jenkins, CodeBuild can act as a downstream build node (or vice versa). AWS fills the gaps and removes undifferentiated heavy lifting without locking you into an all-or-nothing approach, making these tools suitable for organizations at any DevOps maturity level.

For teams new to AWS—or seasoned crews looking to tighten their pipelines—investing time in these services pays dividends: fewer deployment headaches, faster feedback loops, and more time spent building features instead of fixing brittle scripts. Plus, because every component is managed by AWS, you inherit out-of-the-box scalability, security, and high availability—vital for enterprises with mission-critical workloads and equally helpful for lean startups that need to move fast.

Of course, DevOps success isn’t just about tooling; culture and process matter just as much. If you’d like expert guidance on that journey, Cloudchipr’s DevOps as a Service offering can help. Our 50-plus certified engineers design, implement, and operate AWS-native pipelines—handling Day 1 setup (IaC, CI/CD, observability) and Day 2 operations (24/7 on-call, performance tuning, automated backups). Think of Cloudchipr as your hands-on partner in applying AWS best practices so your team can focus on innovation.

In short, AWS supplies the building blocks, and partners like Cloudchipr help you assemble them into a high-velocity delivery engine. Embrace the tools, cultivate the culture, and watch your release velocity—and confidence—soar.

.png)

.png)

.png)