Getting Started with AWS EMR: Architecture, Pricing, and Best Practices

.png)

Introduction

Amazon EMR (Elastic MapReduce) is AWS’s managed big data platform for running Apache Hadoop, Spark, Flink, and other distributed frameworks at scale. It automates cluster provisioning, scaling, and tuning so data teams can focus on analytics instead of infrastructure. With performance-optimized runtimes and seamless integration with AWS services, EMR delivers petabyte-scale analytics cost-effectively. In this blog we explore what AWS EMR is and why it matters, its architecture and deployment options (EC2, EKS, Serverless), tools and features, a comparison to AWS Glue, pricing and cost controls, monitoring/best practices, real-world use cases, and a hands-on tutorial with CLI examples.

What Is AWS EMR?

Amazon EMR (formerly Elastic MapReduce) is a managed cluster platform for big data processing. It simplifies running open-source analytics frameworks like Apache Spark, Hadoop, Hive, HBase, Presto/Trino, Flink, and more on AWS to process and analyze large data sets. EMR clusters can leverage YARN to coordinate distributed tasks, and support libraries like Spark SQL, MLlib, and GraphX for machine learning and graph processing. You can interact with EMR using high-level languages (Java, Python with PySpark, HiveQL, etc.) to build data pipelines, data warehouses, or stream processing jobs. In short, AWS EMR provides a managed, scalable environment to run big data frameworks without having to set up or maintain a Hadoop cluster yourself. (It also integrates with AWS Glue’s Data Catalog, Athena, and Redshift Spectrum for unified metadata.)

AWS EMR Architecture and Deployment Models

An EMR cluster typically consists of a master node (coordinator) and one or more core/task nodes running compute frameworks. The storage layer can be HDFS on the cluster nodes (ephemeral) or EMRFS on S3 (persistent). By default, EMRFS lets Hadoop read/write files directly to Amazon S3 as if it were a file system. (HDFS on EC2-backed instance storage is also available for intermediate data and is much faster, but data is lost when the cluster is terminated .) A YARN-based resource manager on the master node distributes jobs and handles Spot interruptions, ensuring application masters stay on core nodes for reliability.

Deployment Options: EMR supports three main deployment modes:

- EMR on EC2: This is the traditional model where you launch a cluster of EC2 instances. You have full control over instance types, custom AMIs, and software configuration. EMR on EC2 is ideal for long-running clusters and continuous processing tasks that need specific hardware or custom libraries. You can mix On-Demand, Reserved, and Spot Instances in instance fleets for cost-efficiency. For example, EMR on EC2 lets you install custom applications alongside Spark/Trino, and use Spot nodes to greatly cut costs. This mode offers the finest-grained control and is cost-effective for sustained workloads.

- EMR on EKS: EMR on Kubernetes (EKS) runs Spark and other frameworks in containers on your Amazon EKS clusters. Instead of managing separate EMR clusters, you submit EMR jobs to pods in an existing EKS cluster. EMR builds and manages the container images for Spark/Hadoop automatically. This approach improves resource utilization and simplifies operations, since you can run EMR analytics workloads alongside other containerized apps on the same EKS cluster. It’s well-suited for organizations already using Kubernetes. You can use EMR Studio or AWS CLI/Airflow to submit jobs to EMR on EKS, and benefit from shared Kubernetes tooling.

- EMR Serverless: EMR Serverless provides a fully serverless Spark/Hive environment. You create an EMR Serverless application (specifying the EMR release and framework) and simply submit jobs; you do not provision any EC2 cluster. EMR Serverless automatically scales compute up or down for each job and shuts down resources when the job finishes. This avoids over-/under-provisioning. It is ideal for ad-hoc analysis or interactive notebooks where you need fast startup and no cluster management. You still get EMR benefits (optimized runtimes, concurrency), but pay only for CPU, memory, and storage consumed by your jobs. (For example, Glue-like ETL use cases often use EMR Serverless to run Spark jobs without any cluster setup overhead.)

Each deployment mode has its place. EMR on EC2 offers full cluster control (good for heavy custom workloads), EMR on EKS provides Kubernetes-native analytics, and EMR Serverless gives infrastructure-free processing.

AWS EMR Tools and Features

Amazon EMR comes with a rich set of tools and features to help build, manage, and monitor big data workloads:

- Wide Framework Support: EMR runs Apache Hadoop, Spark, Tez, Flink, HBase, Hive, Pig, Presto/Trino, and more. It supports popular libraries (Spark MLlib, GraphX, Hive streaming, etc.) and storage formats. Notably, EMR supports modern table formats like Apache Iceberg, Apache Hudi, and Delta Lake to speed up analytics. You can write jobs in Java, Scala, Python, R, or SQL on these frameworks.

- EMRFS (S3 Integration): EMR includes EMRFS, a Hadoop-compatible file system client for Amazon S3. This lets you treat S3 as the main data lake, reducing the need for expensive HDFS storage. EMRFS automatically handles consistency and caching.

- EMR Studio & Notebooks: Amazon EMR Studio is a managed JupyterLab IDE for EMR. It provides an interactive environment to develop, visualize, and debug Spark applications (supporting R, Python, Scala, PySpark) running on EMR clusters. You can launch clusters on-demand from Studio, browse data catalog tables with the SQL editor, and share notebooks in real-time among team members. EMR also has EMR Notebooks (Jupyter notebooks attached to clusters) for ad-hoc exploration.

- Steps and Workflow Integration: EMR lets you submit jobs as steps (e.g. Spark-submit tasks, Hive scripts) either at cluster creation or to running clusters. Steps can run sequentially, and EMR records their status. You can orchestrate EMR via AWS Step Functions, Amazon Managed Workflows for Apache Airflow (MWAA), or other schedulers. EMR also integrates with AWS Glue Catalog for schema management.

- Managed Scaling and Auto-termination: EMR can automatically resize clusters with Managed Scaling. It continuously monitors workload metrics and adds/removes core/task instances to optimize cost and performance. You can define min/max limits and policies. EMR can also auto-terminate idle clusters (using the IsIdle CloudWatch metric or console setting) to save costs.

- Security and Access: EMR integrates with IAM for authentication and authorization. You can launch clusters in VPCs, use instance IAM roles, and encrypt data in S3, HDFS, or EBS volumes. EMR Serverless runs each application in its own secure VPC environment.

- Observability and Debugging: EMR provides rich monitoring via CloudWatch (see next section) and application UIs. For Spark jobs, you get a Spark History Server UI; for Hadoop, you get YARN/HDFS web UIs on the master node. EMR also allows you to archive logs to S3 (per step and Hadoop component logs). Since EMR 6.9.0, clusters automatically archive logs to S3 on scale-down so logs persist after nodes terminate.

- Data Tools Integration: EMR works with other AWS big data services. You can use the AWS Glue Data Catalog as the Hive metastore for EMR. You can push processed data to Amazon S3 data lakes, Amazon Redshift, DynamoDB, or analytics services like AWS Athena. Kinesis Data Streams can feed streaming jobs in EMR. EMR can also run on AWS Outposts for on-premises extensions.

In summary, EMR’s features (from file system to IDE) are designed to simplify large-scale data processing: you get managed Hadoop/Spark infrastructure plus development and monitoring tools, without the manual setup.

AWS EMR vs AWS Glue

.png)

People often confuse AWS EMR and AWS Glue because both can run Apache Spark, both promise “serverless” simplicity somewhere in their story, and both sit in the big-data corner of the AWS toolbox. Under the hood, though, they tackle different stages of the data lifecycle and expose very different operating models:

AWS Glue is a serverless data-integration and ETL service that comes with its own Data Catalog. It crawls and classifies data sources, offers a drag-and-drop (or code-based) ETL designer in Glue Studio, and executes Spark jobs on demand using Data Processing Units (DPUs). You never touch infrastructure; you pay per second for each job’s runtime plus small catalog storage fees. Glue excels when you need a low-ops, pay-as-you-go ETL engine with built-in schema management.

AWS EMR, by contrast, is a managed big-data computing platform. You spin up clusters on EC2, run Spark on EKS, or skip servers entirely with EMR Serverless—but you still decide the software stack, instance types, and scaling policies. EMR shines when you require fine-grained control (custom libraries, tuned JVM flags, mixed Spot/On-Demand fleets) or need to support a diverse slate of workloads beyond ETL: large-scale batch analytics, interactive SQL, streaming pipelines, machine learning, graph processing, and more. Billing is tied to cluster resources (EMR surcharge + EC2/EBS) or, in serverless mode, to vCPU/memory-seconds consumed.

Rule of thumb:

Choose AWS Glue when you want a simple, fully serverless ETL service with automatic metadata cataloging.

Choose AWS EMR when you need a flexible, customizable analytics environment—whether long-running clusters, Kubernetes-native Spark jobs, or on-demand serverless applications.

Remember, Glue’s Data Catalog can double as EMR’s Hive metastore, giving you unified schemas no matter which engine you run on. In short: Glue is the automated, opinionated ETL powerhouse; EMR is the heavyweight, do-it-all big-data workhorse.

AWS EMR Pricing and Cost Management

Pricing: Amazon EMR pricing is straightforward: you pay per-second for EMR instances (minimum 1 minute), plus the underlying Amazon EC2 instance and EBS storage costs. For example, a 10-node cluster running 10 hours costs the same as a 100-node cluster running 1 hour (all else equal). EMR charges differ by deployment: EMR on EC2 adds an EMR service fee on top of EC2/EBS pricing, while EMR Serverless bills only for compute resources consumed (vCPU, memory, storage per second). Standard AWS data transfer and IPv4 charges may apply.

Cost Management Strategies:

- Spot and Reserved Instances: Use EC2 Spot instances for task nodes to cut compute costs (often 70–90% off On-Demand). EMR can launch Spot nodes by setting a maximum bid price. For core nodes where interruption isn’t acceptable, use On-Demand or Reserved Instances. You can also use instance fleets to mix instance types and purchase models for flexibility.

- Managed Scaling: Enable EMR Managed Scaling so the cluster automatically adds or removes instances as workload changes, optimizing cost and performance. This avoids over-provisioning during idle times.

- S3 as Data Store: Keep persistent data in Amazon S3 via EMRFS rather than HDFS. S3 storage (≈$0.023/GB-month) is much cheaper than replicated EBS/HDFS storage. EMRFS allows independent scaling of compute and storage. Compress and partition data (e.g. Apache Parquet/ORC) to reduce I/O and S3 requests.

- Cluster Auto-Termination: Configure clusters to auto-terminate when idle or only run batch workloads on-demand. Avoid leaving idle clusters running. Use AWS CloudWatch or IsIdle metric alarms to shut down idle clusters.

- Tagging and Cost Visibility: Tag EMR clusters with project or team tags, and use AWS Cost Explorer or AWS Cost and Usage Reports to track spend. AWS provides guidance on generating detailed EMR cost reports with tags.

- Right-sizing: Choose appropriate instance families (compute-optimized for CPU-heavy jobs, memory-optimized for in-memory Spark, etc.) and instance counts for your workload. Newer instance types (e.g. AWS Graviton/Arm) may offer better price/performance.

- Serverless for Ad-hoc: For sporadic or unpredictable workloads, use EMR Serverless so you only pay per job. It eliminates the need to run a cluster 24/7.

By combining these strategies (Spot, autoscaling, data-lake storage, tagging), organizations keep their EMR costs under control while still scaling to large workloads.

AWS EMR Monitoring and Best Practices

Monitoring and observability are critical for EMR reliability and performance. Key practices include:

- CloudWatch Metrics: EMR automatically publishes cluster metrics (e.g. RunningMapTasks, HDFSUtilization, IsIdle) to CloudWatch every five minutes. These metrics incur no extra charge and are retained for 63 days. Use CloudWatch dashboards and alarms to watch for anomalies: for example, alarm on IsIdle to shut down idle clusters, or on HDFSUtilization to trigger adding nodes.

- Step and Application Monitoring: Track the status of steps using the EMR console, CLI or SDK. Steps transition through PENDING → RUNNING → COMPLETED states. You can describe steps (via aws emr describe-step) to see progress. For Spark jobs, review the Spark History Server UI (accessible via the EMR console). YARN and Hadoop web UIs on the master node show detailed logs for tasks and components.

- Logging to S3 or CloudWatch: Archive logs for debugging. Configure EMR to archive logs to S3 – this persists logs beyond the cluster life cycle. For EMR Serverless, enable sending driver and application logs to CloudWatch Logs; you can live-tail logs or use CloudWatch Logs Insights for analysis. (Just note that enabling real-time CloudWatch logging can add a small overhead on job runtime.) Always secure and rotate logs.

- Collect Comprehensive Logs (Serverless): For EMR Serverless apps, AWS advises collecting logs from all components (driver, application, AWS Glue data catalog, etc.) to debug multi-step failures. Use EventBridge to trigger notifications on job state changes.

- Resource Tracking: Use AWS EMR cluster metrics (like CPU, memory usage) and Ganglia or Spark event logs for performance tuning. Tune Spark settings (executor memory, shuffle partitions, etc.) as per workload.

- Best Practices: Use the latest EMR release (security patches and performance improvements). Pre-install libraries in bootstrap actions if needed. Use placement groups or EBS-optimized instances for I/O-intensive jobs. Follow AWS’s EMR best practice guides for Spark/Hive tuning. (For example, compress intermediate data, cache hot data in memory, and avoid small files .)

- Security and Compliance: Apply IAM policies and security groups. Use SSL for client connections, encrypt data at rest, and isolate networks as needed. EMR supports HIPAA, FedRAMP, and other compliance regimes.

By leveraging CloudWatch for metrics and alarms, archiving logs for post-mortems, and following AWS’s published best practices, teams can keep EMR clusters healthy and performant.

Real-World Use Cases and Customer Stories

AWS EMR is used by many enterprises for analytics at scale. For example:

- Yahoo Advertising: Yahoo migrated its ad analytics pipelines to EMR to handle hundreds of millions of users’ data globally. By running petabyte-scale Spark and Flink jobs on EMR, Yahoo Advertising accelerated its modernization and derived insights faster. The team found EMR cost-effective and scalable for their analytics needs.

- Thomson Reuters: The financial data leader transitioned 3,000 Hadoop/Spark workflows to EMR. They built elastic workflows on EMR, reducing cluster runtimes by 48% and cutting update cycles from 24 hours to 1 hour. EMR helped Thomson Reuters simplify big data processing and innovate faster.

- Foursquare: The location-data company switched to Amazon EMR Serverless. This let Foursquare run analytics without managing clusters, which reduced costs and overhead. In their migration, Foursquare achieved a 45% reduction in operational costs and boosted developer productivity by eliminating cluster setup.

- Mobiuspace: A media-tech company running recommendation algorithms moved to EMR on EKS with Arm-based instances (Graviton). They reported up to 40% better price-performance for Spark workloads . EMR on EKS enabled efficient use of shared Kubernetes infrastructure for their big data.

- Autodesk, McDonald’s, Ancestry, Zillow and many others also use EMR for scalable analytics and cost savings.

These customer stories (from AWS case studies) highlight that EMR can accelerate analytics, improve performance, and lower costs at petabyte scale. (See the AWS Customer Success Stories for more examples.)

Conclusion

AWS EMR is a versatile cloud platform for big data that balances control and convenience. It lets you run familiar frameworks (Spark, Hadoop, Hive, Presto, etc.) on fully managed clusters or serverless environments. EMR supports flexible deployment (EC2, EKS, or Serverless) and integrates deeply with the AWS ecosystem for storage (S3), cataloging (Glue), security (IAM), and monitoring (CloudWatch). The result is petabyte-scale analytics with minimal operational overhead. Key takeaways: EMR simplifies cluster management, offers powerful tools (EMR Studio, managed scaling, diverse frameworks) and a clear pricing model (per-second EMR + EC2/EBS, or pay-per-job Serverless). Customers like Yahoo, Foursquare, Thomson Reuters, and others have used EMR to speed up analytics and cut costs by tens of percent.

To learn more, explore the Amazon EMR Management Guide and AWS tutorials—AWS provides comprehensive EMR documentation to deepen your expertise.

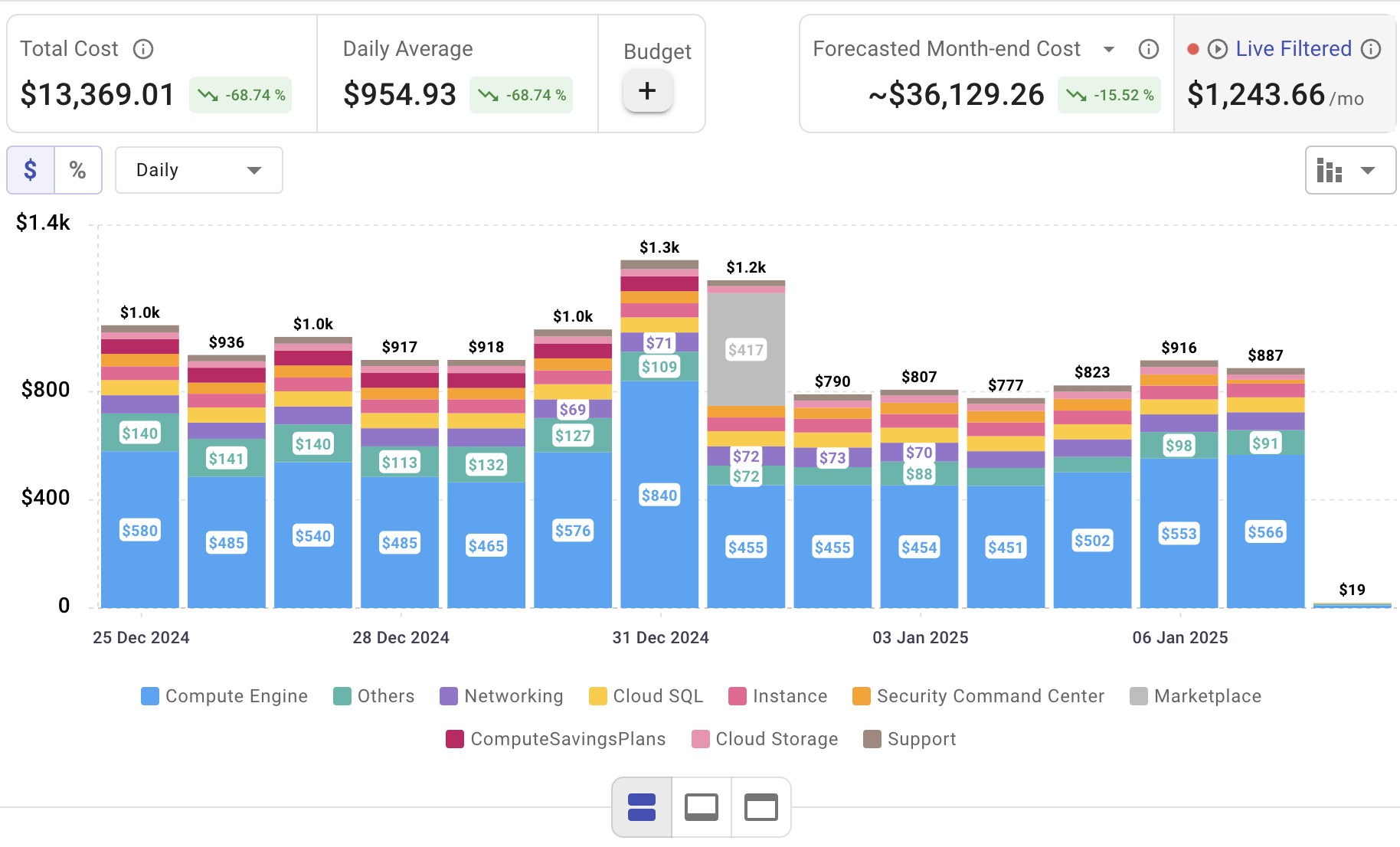

Monitor Your AWS EMR Spend with Cloudchipr

Setting up AWS EMR is only the beginning—actively managing cloud spend is vital to maintaining budget control. Cloudchipr offers an intuitive platform that delivers multi‑cloud cost visibility, helping you eliminate waste and optimize resources across AWS, Azure, and GCP.

Key Features of Cloudchipr

Automated Resource Management:

Easily identify and eliminate idle or underused resources with no-code automation workflows. This ensures you minimize unnecessary spending while keeping your cloud environment efficient.

Receive actionable, data-backed advice on the best instance sizes, storage setups, and compute resources. This enables you to achieve optimal performance without exceeding your budget.

Keep track of your Reserved Instances and Savings Plans to maximize their use.

Monitor real-time usage and performance metrics across AWS, Azure, and GCP. Quickly identify inefficiencies and make proactive adjustments, enhancing your infrastructure.

Take advantage of Cloudchipr’s on-demand, certified DevOps team that eliminates the hiring hassles and off-boarding worries. This service provides accelerated Day 1 setup through infrastructure as code, automated deployment pipelines, and robust monitoring. On Day 2, it ensures continuous operation with 24/7 support, proactive incident management, and tailored solutions to suit your organization’s unique needs. Integrating this service means you get the expertise needed to optimize not only your cloud costs but also your overall operational agility and resilience.

Experience the advantages of integrated multi-cloud management and proactive cost optimization by signing up for a 14-day free trial today, no hidden charges, no commitments.

.png)

.png)

.png)