The Complete Guide to Amazon EFS: Features, Pricing, and Use Cases

.png)

Introduction

In today’s cloud landscape, choosing the right storage solution is critical for performance and scalability. As organizations migrate more workloads to AWS, the need for storage systems that are both flexible and resilient has never been greater. Applications such as web services, containerized microservices, and machine learning pipelines require a storage layer that can adapt to dynamic demand. Amazon Elastic File System (Amazon EFS) – often referred to as AWS EFS – has emerged as a versatile, fully managed file storage service designed for cloud-native applications that need shared file access.

In this guide, we’ll explore what Amazon EFS is, how it works, its key features (such as performance, security, and lifecycle management), AWS EFS pricing, and common use cases. We will also compare AWS EFS vs EBS, EFS vs S3, and EFS vs FSx to help clarify when Amazon EFS is the best choice among AWS storage options.

What is Amazon EFS?

Amazon Elastic File System (EFS) is a fully managed, cloud-native file storage service provided by Amazon Web Services. It offers simple, scalable, and elastic file storage for use with AWS compute resources and on-premises servers. Amazon EFS is designed for workloads that need to share data across multiple instances or containers simultaneously – such as web applications, microservices, content management systems, and analytics pipelines. As a serverless solution, it eliminates the need to provision or manage storage capacity manually. EFS automatically adapts to changing storage demands, growing and shrinking as you add or remove files, and you pay only for what you use . It can seamlessly handle both high-performance needs and long-lived, infrequently accessed data by adjusting to unpredictable access patterns.

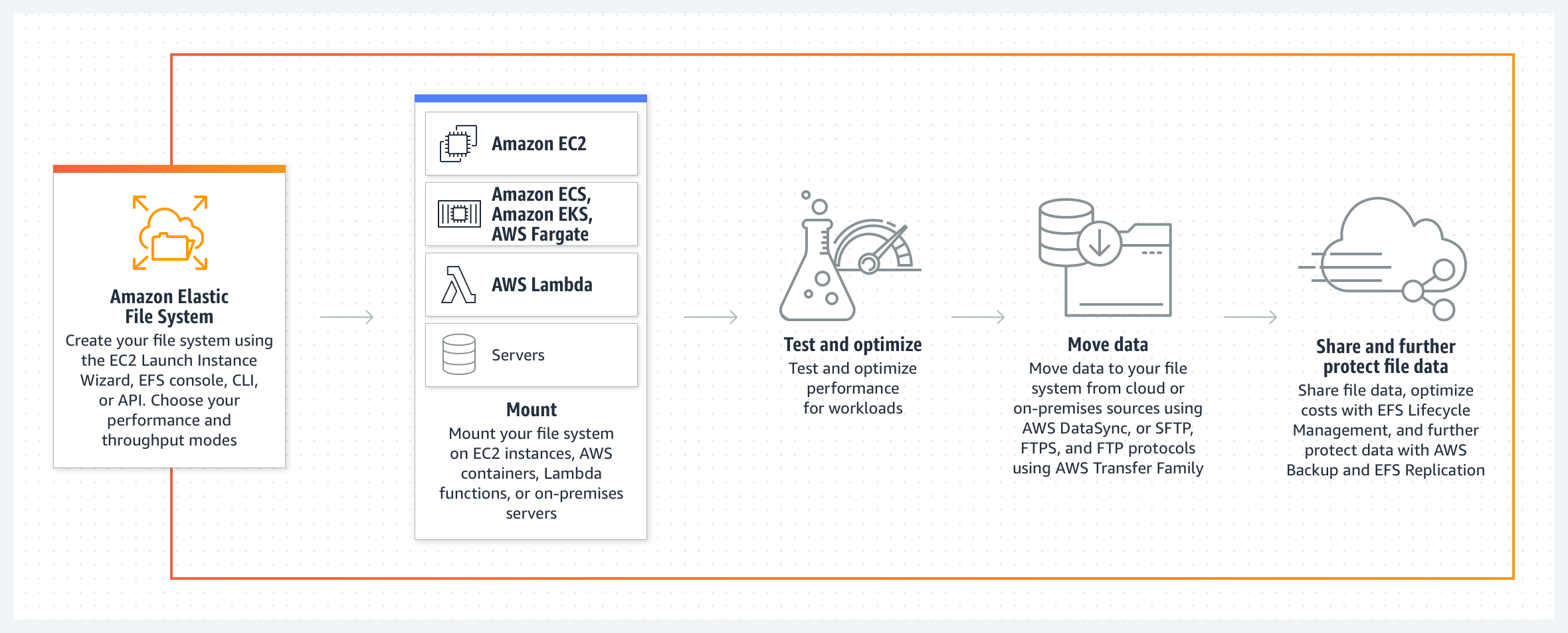

How Amazon EFS Works

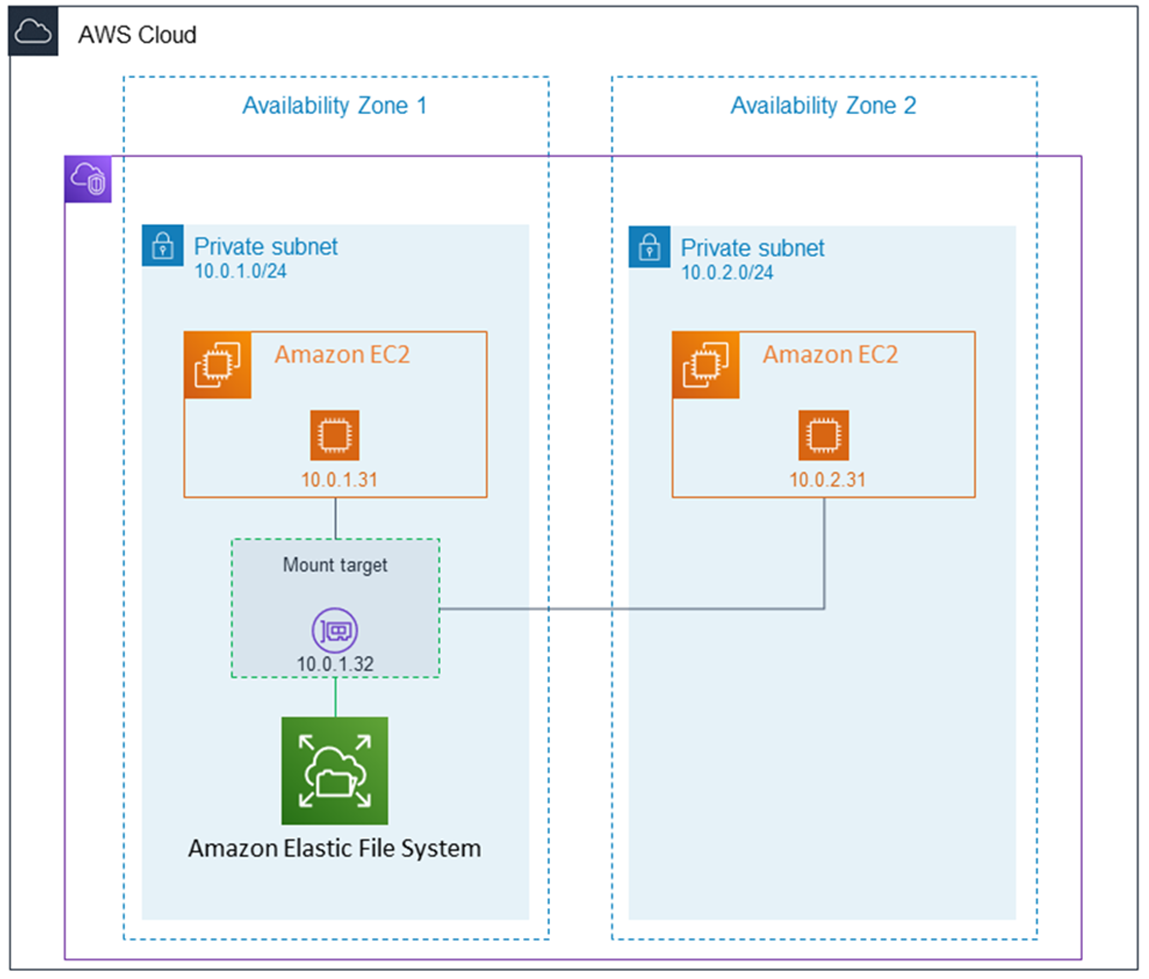

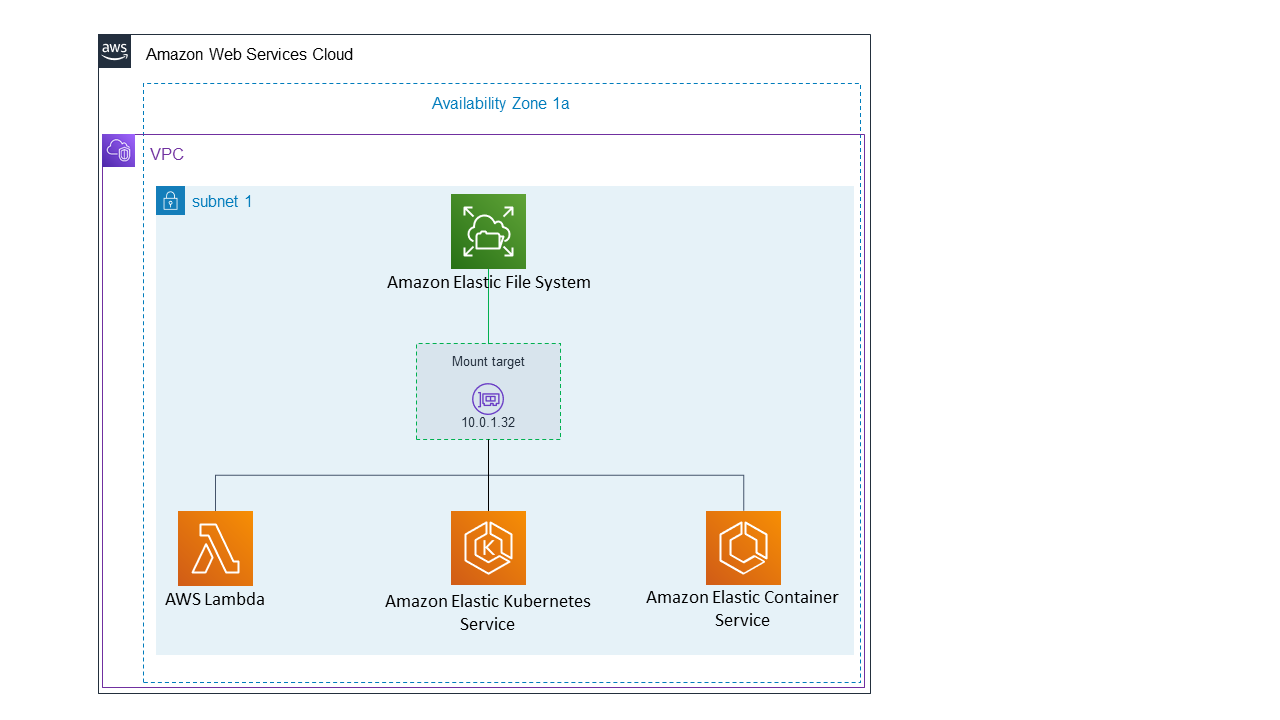

AWS EFS works by exposing a network file system endpoint (using the NFS protocol) that multiple compute services can mount concurrently. Once you create an EFS file system, it can be attached to Amazon EC2 instances, containers running on AWS ECS or EKS, and even AWS Lambda functions (via EFS Access Points) in real time. This allows many instances and services to read and write to a centralized, shared file system as if it were a local drive. EFS dynamically adjusts its capacity as files are added or removed, requiring no manual scaling. This elasticity ensures performance remains consistent as your storage needs grow.

Because of its broad integration with other AWS services and its ability to support hybrid cloud setups, EFS serves as a foundational component in many modern cloud architectures. In practice, you can mount EFS file systems on multiple EC2 instances (even across Availability Zones) to provide a common data source. The service’s scalability and managed nature mean you don’t have to worry about provisioning storage or performing capacity planning – Amazon EFS handles growth automatically behind the scenes.

Key Features of Amazon EFS

- Elastic and Scalable: Automatically expands and shrinks to meet demand without any manual intervention. EFS can scale from gigabytes to petabytes of data on the fly without disrupting applications .

- High Performance: Offers two performance modes for file systems:

- General Purpose – the default mode, optimized for low latency and ideal for latency-sensitive use cases like web or application servers.

- Max I/O – a mode that supports higher aggregate throughput and operations per second, designed for highly parallel, large-scale workloads (with a slight trade-off in per-operation latency) .

- Secure: Provides multiple layers of security. AWS EFS encryption can be enabled to protect data at rest and in transit . Integration with AWS Identity and Access Management (IAM) allows fine-grained control over which clients can access the file system and with what permissions (enforcing AWS EFS permissions at the resource level) . Network traffic to EFS can be restricted using VPC security groups and network ACLs. These AWS EFS security features ensure your data is protected both in storage and during transfer.

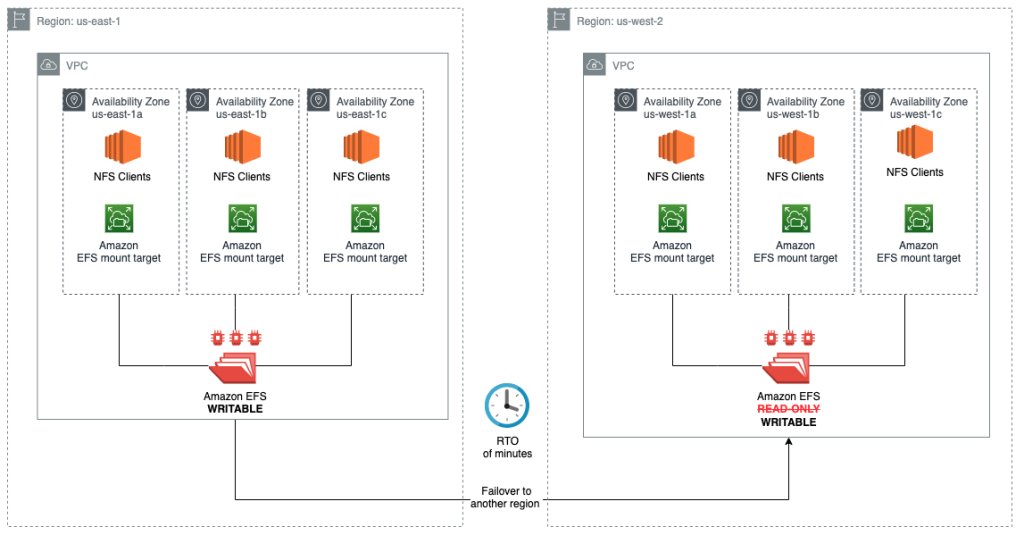

- Durability and Availability: Stores data redundantly across multiple Availability Zones within an AWS region for multi-AZ resilience. A Regional EFS file system is designed for 11 9’s durability over a given year and can sustain the loss of an entire AZ without data loss. For additional disaster-recovery protection, you can enable AWS EFS replication to automatically keep a copy of your file system in a different AWS Region (with a typical recovery point objective of 15 minutes) . One Zone file systems (explained below) trade away this redundancy for cost savings.

- Cost-Effective: Offers multiple storage classes with lifecycle management to optimize cost. For example, data can start in the Standard storage class (frequently accessed) and automatically transition to Infrequent Access (IA) or Archive classes as it becomes less active, using AWS EFS lifecycle management policies. This tiering happens behind the scenes to minimize storage costs while keeping data available when needed. (Standard is highest performance, IA costs ~92% less for infrequently accessed files, and Archive costs ~97% less for rarely accessed data, at the expense of higher access latencies .)

- Fully Managed: As a fully managed service, EFS handles provisioning, patching, and maintenance of the underlying infrastructure. You do not need to manage file servers or worry about software updates – AWS takes care of all upkeep.

- Backup and Restore Integration: Seamless integration with AWS Backup allows you to configure automatic backups of your EFS file systems for data protection and compliance. This provides point-in-time recovery of files and complements EFS’s multi-AZ durability with an additional layer of protection (including cross-region backups if needed).

- Monitoring and Logging: Built-in integration with monitoring and logging services. You can track file system metrics (like throughput, IOPS, and capacity) via Amazon CloudWatch, and audit EFS API calls via AWS CloudTrail. This makes it easier to observe usage patterns, set alarms for anomalous activity, and ensure compliance by reviewing access logs.

File System Types

Amazon EFS offers two types of file systems, allowing you to balance durability and cost requirements:

- Regional File Systems: (Recommended) Your data is stored across multiple Availability Zones in the region, offering the highest durability and availability. This multi-AZ replication means that even if one AZ goes down, your file system remains accessible from the others. Most production workloads use Regional EFS for resilience .

- One Zone File Systems: Data is stored within a single Availability Zone, which lowers costs (~47% cheaper storage than Regional for Standard class) but comes with the trade-off of reduced durability. If that AZ experiences an outage, the file system will be unavailable (and data could be lost in a catastrophic AZ failure) . One Zone EFS is suitable for non-critical and dev/test workloads that do not require multi-AZ resilience.

In summary, use Regional EFS for critical applications where high availability is a must, and consider One Zone EFS for cost-sensitive, fault-tolerant workloads (or where you have other backups) that can tolerate AZ-level risks.

Performance and Throughput

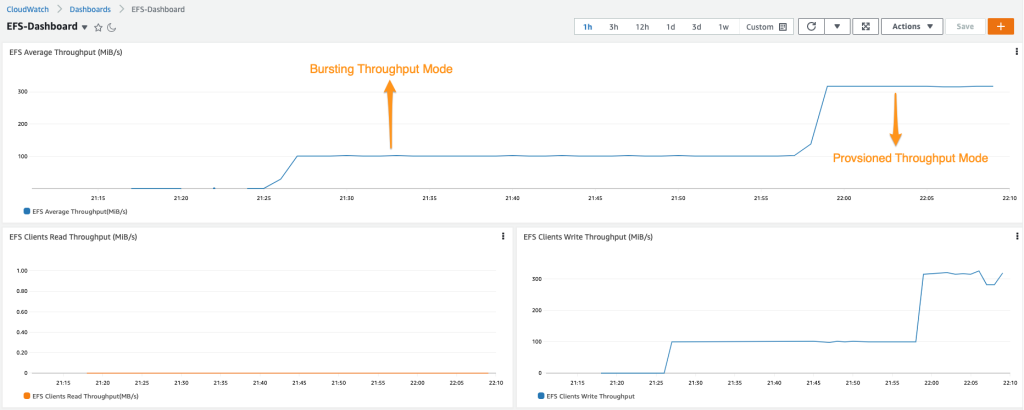

For optimal AWS EFS performance, the service provides two types of performance modes and flexible throughput settings to suit different workload needs:

Performance Modes: Amazon EFS offers two performance modes that determine how it optimizes for latency vs. throughput:

- General Purpose mode – This mode has the lowest per-operation latency and is the default for all EFS file systems. It’s ideal for the majority of workloads, especially those that are latency-sensitive (e.g. web servers, home directories, small file operations). One Zone file systems always use General Purpose mode.

- Max I/O mode – This mode is designed for highly parallelized workloads that need higher aggregate throughput and can tolerate slightly higher latencies. It can scale to higher levels of total operations per second across the file system . Max I/O is typically used for big data, analytics, or log processing jobs with many concurrent accesses. (Note that EFS file systems using Elastic Throughput or One Zone storage do not support Max I/O mode .)

Throughput Modes: In terms of AWS EFS throughput, there are also two options for how you pay for and achieve throughput to the file system:

- Elastic Throughput (Bursting) – This is the default and recommended throughput mode . In Elastic mode, EFS automatically scales the throughput up or down based on your workload’s demand. You do not pre-provision any throughput; you simply pay for the amount of data read/written (throughput) each month. This mode is ideal for spiky or unpredictable workloads because performance adjusts dynamically and you aren’t paying for idle capacity. Elastic Throughput ensures that even if your usage bursts above baseline levels, the file system can burst to high throughput for short periods (based on a credit system) and generally provides enough performance for most standard use cases. Essentially, throughput grows with storage: the more data stored, the more throughput capacity becomes available when needed.

- Provisioned Throughput – This mode lets you explicitly purchase a specific throughput capacity (in MiB/s) for your file system, independent of how much data is stored . Provisioned mode is useful for workloads that require consistently high throughput above what the Elastic mode would normally provide (for example, if your application needs a very high constant throughput but doesn’t store a lot of data, which would otherwise limit bursting). You will pay a fixed monthly rate for whatever throughput level you configure (on top of storage costs). (For example, provisioning 1 MiB/s of throughput costs about $6 per month in the us-east-1 region.) When using Provisioned Throughput, EFS still allows bursting above the provisioned level if you have accumulated burst credits, but you are ensured the provisioned baseline performance at all times.

Most users stick with Elastic Throughput because it simplifies management and typically costs less for variable workloads . You can switch between throughput modes as needed, though switching from Provisioned back to Elastic incurs a 24-hour cooldown period. Whichever mode you choose, Amazon EFS is designed to deliver consistent high performance for both latency-sensitive applications and high-throughput batch jobs.

Storage Classes and Lifecycle Management

Amazon EFS provides multiple storage classes to optimize cost for different data access patterns. AWS EFS lifecycle management automatically transitions files between these classes based on their age or last-accessed time, helping minimize costs without manual intervention . The storage classes are:

- EFS Standard: Backed by SSD storage for frequently accessed data. This tier offers the lowest latency (sub-millisecond to a few milliseconds) for file operations. It is the default class for new files and provides the highest performance, but at the highest cost per GB.

- EFS Infrequent Access (IA): A lower-cost storage class for files that are not accessed often (e.g., historical data or backups). EFS IA storage costs significantly less per GB (around $0.016/GB-month, roughly 1/94th the cost of Standard in US East region) . When a file in Standard hasn’t been accessed for a set period (e.g. 30 days), EFS can move it to IA automatically. Reading a file from IA incurs a small additional fee per GB (since it’s optimized for infrequent access), but you save on storage costs for data you rarely touch.

- EFS Archive: An even lower-cost tier for rarely accessed data, ideal for long-term retention of cold data. Archive storage is priced about $0.008/GB-month (about 1/97th the cost of Standard) , making it very cost-effective for archival files. When enabled, lifecycle rules can move files from IA to Archive after they haven’t been accessed for an extended period (e.g. 90 days of no access). Retrieval from Archive is slower (tens of milliseconds first-byte latency, as data may need to be restored), and there are fees to access and restore data from Archive. Archive also has a minimum 90-day retention (deletion before that still incurs 90 days of charges).

Lifecycle management policies tie these classes together: for example, you can set a policy that moves files to IA after 30 days of inactivity, and to Archive after 90 days of inactivity, automatically . When an archived file is read, EFS can automatically bring it back to Standard (if using intelligent tiering), or you can configure policies to do so. All of this tiering is transparent to applications – your applications just see a single file system, and EFS handles moving the data behind the scenes. By using lifecycle management, AWS EFS can dramatically lower your storage bills for data that you don’t access frequently, while still keeping the data accessible when needed.

(It’s important to note that EFS IA and Archive have a minimum object size charge of 128 KB – very small files won’t save much by moving to IA or Archive due to this overhead. Also, as best practice, enable lifecycle policies only if the cost saved outweighs any potential access fees when data is retrieved.)

Security and Access Control

Encryption: Amazon EFS offers robust encryption capabilities to protect your data. You can enable encryption at rest when creating a file system, which uses AWS Key Management Service (KMS) keys to transparently encrypt data on disk . This means that all files are encrypted using either an AWS-managed key or a customer-managed key, and are automatically decrypted when accessed by authorized clients – no application changes needed. Data encryption in transit is also supported: when mounting EFS, you can use the EFS mount helper or stunnel to ensure all NFS traffic is encrypted with TLS 1.2 . By default, EFS will enforce encryption in transit for file systems that have it enabled. These encryption features can be used together or separately, and they have minimal impact on performance (encrypting data adds only a small amount of latency overhead) .

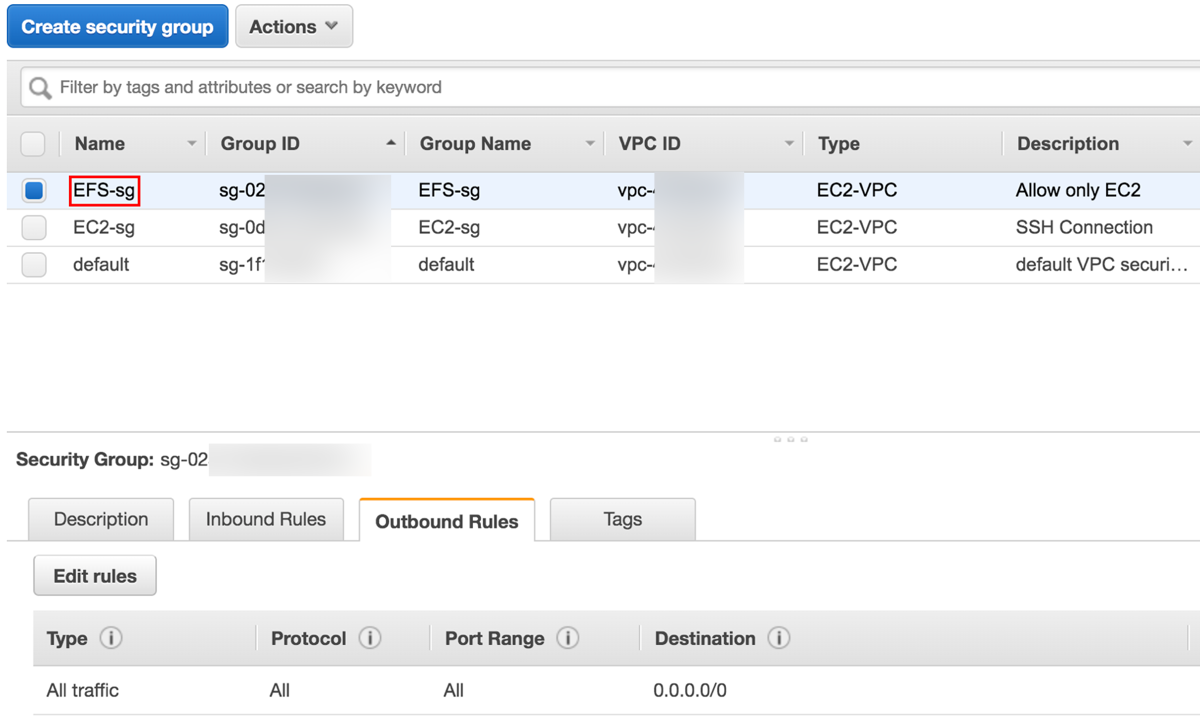

Access Control: Access to an EFS file system can be restricted at multiple levels, integrating with AWS’s security framework for fine-grained control:

- Network-level access: Amazon EFS lives within an AWS VPC, so you use VPC security groups to control inbound and outbound traffic to the EFS mount targets. For example, you can configure the security group to allow NFS connections (TCP port 2049) only from your EC2 instances or from specific IP ranges. This ensures that only authorized clients at the network level can even attempt to mount your file system .

- IAM policies: You can attach IAM policies to the EFS resource to control which AWS principals (users, roles) are allowed to perform actions on the file system (such as mounting it via the NFS client or using EFS Access Points). For instance, you might require that an EC2 instance has a certain IAM role in order to be able to mount the EFS. IAM policies can enforce read-only access or disable root access via EFS, adding an identity-based permission layer on top of network controls . This is useful in multi-tenant scenarios or to ensure compliance (e.g., only specific applications or services can use the file system).

- EFS Access Points: An Access Point is an EFS feature that provides a customizable entry point into your file system. Each access point can enforce a specific POSIX user/group identity and directory for the connecting client. This is powerful for multi-user environments: for example, each application or container can mount the same EFS file system via different access points, and each access point can expose a different directory with certain permissions. Access Points let you easily share a file system between multiple clients while isolating them at the file system level. They also integrate with IAM – you can require that a connection uses a specific IAM role and Access Point to mount the file system . In essence, Access Points help implement AWS EFS permissions in a simplified way for cloud-native apps, avoiding the need to coordinate Linux user IDs across instances.

- POSIX permissions: Within the file system itself, Amazon EFS supports standard POSIX file permissions and ownership, just like a typical Linux file server. This means you can use Unix user/group IDs and permission bits (rwx for owner, group, others) on files and directories to control access at the content level . When using EFS with EC2 or containers, the client’s Linux user ID is respected by EFS for file access (unless overridden by an Access Point). This allows existing applications to use familiar chmod/chown and file ACL practices for controlling access to files.

All of these layers work together to make EFS highly secure. For example, you might restrict EFS network access to only your application servers, enforce that those servers use an IAM role that is allowed to mount the file system (and require encryption in transit), and use an Access Point to constrain each server to a specific directory with certain permissions. By leveraging these features, AWS EFS security can meet stringent enterprise requirements for data protection and access governance. (Additionally, EFS is integrated with AWS CloudTrail, so any API calls or changes to file system settings can be logged and audited as part of your security compliance program.)

Integration and Hybrid Access

Amazon EFS is built on open standards, which makes it easy to integrate into various environments:

- Protocol support: EFS uses the NFSv4.1 and NFSv4.2 protocols for file access. This means any NFS-compatible client (most Linux/Unix systems and even Windows NFS Client) can mount an EFS file system using standard tools. The use of NFS provides a familiar interface – applications see a normal file system that supports typical file operations (read, write, append, directories, symlinks, etc.). Because it’s standard NFS, EFS can be accessed by a wide range of operating systems and applications without modification . AWS provides an optimized mount helper (amazon-efs-utils) for Linux, which simplifies mounting (automating TLS setup and recommended NFS mount options).

- AWS service integration: EFS integrates seamlessly with many AWS compute and container services. You can mount EFS on EC2 instances (including auto-scaling groups), attach it to ECS tasks or Fargate containers, and use it with EKS (Kubernetes) pods via the EFS CSI driver. AWS Lambda functions can also use EFS for persistent storage by configuring an Access Point, enabling serverless applications to share state or large reference data. This broad integration means you can use one EFS file system as a common data source across a variety of services. For example, a web server fleet on EC2, a set of Lambda functions, and a batch job on ECS could all concurrently access the same files on EFS (perhaps a set of user uploads or a library of media content).

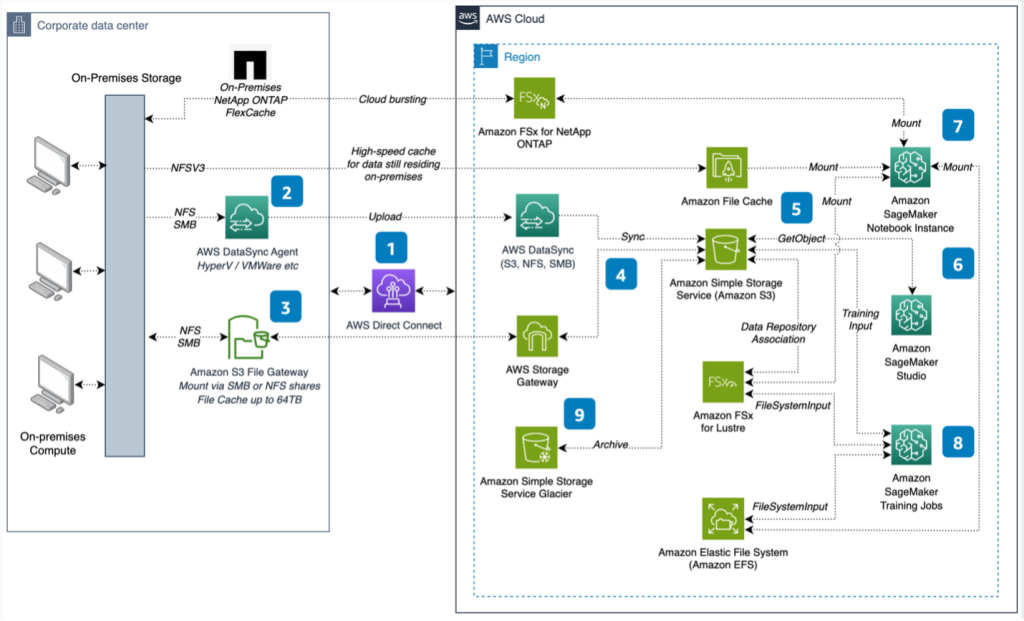

- Hybrid access: Amazon EFS can be accessed from on-premises data centers or other cloud environments as well. If you have AWS Direct Connect or a VPN connection into your VPC, your on-premises servers can mount EFS just like EC2 instances do. This is great for hybrid cloud workflows – for instance, you could migrate an on-prem application to AWS gradually, or use EFS as a central repository accessible by both cloud and on-prem resources. Many organizations use AWS DataSync to simplify moving data into EFS from existing on-prem NAS systems . DataSync can replicate large datasets over the network efficiently (with encryption and incremental sync) so you can populate your EFS file system with minimal downtime. Once in AWS, your data is accessible to all your cloud services via EFS.

- Consistency and locking: Since EFS uses NFSv4, it supports file locking and consistency semantics that traditional applications expect. All EC2 instances or clients mounting an EFS see a coherent view of the file system. This means multiple clients can safely read and write to the same files (with advisory locking if needed to coordinate). This is a major advantage over solutions like S3 for certain workloads, because applications can use file-system semantics (rename operations, append writes, file locks, etc.) which aren’t available in object storage.

Overall, Amazon EFS’s broad compatibility and integration capabilities allow it to act as a “file server in the cloud” for many different scenarios. Whether you’re lifting and shifting an enterprise application that expects a shared file system, or building a cloud-native solution that needs a persistent volume accessible by many microservices, EFS provides the glue to store and share files easily. Its ability to span multiple AZs and even extend on-premises makes it a flexible choice for hybrid architectures as well.

Use Cases

Amazon EFS is suitable for a variety of use cases that require shared, scalable storage:

- Web and Content Management – Ideal for websites, content management systems (CMS), and media hosting platforms that run on multiple servers. With EFS, all web servers can serve the same content files and upload new files to a common repository. For example, if you have a fleet of EC2 instances behind a load balancer serving a PHP application (like WordPress or Drupal), an EFS file system can store user uploads, plugins, and media so that each instance has access to the same files. This ensures consistency across a web farm without building a complex sync or proxy layer.

- Enterprise Applications – Many enterprise apps (ERP systems, home directories, internal tools) require a shared file system for storing reports, assets, or user data. EFS provides the scalable, durable storage needed for these applications with zero administration. Use cases include shared directories for business teams, central repositories for documents or code, and replacing traditional network attached storage (NAS) in the cloud. Because EFS supports standard file protocols and permissions, it can often be used by legacy applications with minimal changes.

- Database Backups & Disaster Recovery – EFS is a convenient target for database backup files, snapshots, and archives. For instance, you can dump backups from an RDS or Oracle database to an EFS mount, enabling multiple servers (or even on-prem systems) to access those backup files for restore or analysis. Its high durability and multi-AZ design make it safer than keeping backups on a single EC2 instance’s disk. Additionally, EFS with One Zone or Archive storage can serve as a cost-effective archive for periodic backups. In DR scenarios, an EFS file system can be replicated to another region, or mounted in read-only mode by analytics servers to verify data integrity. Because it’s accessible from anywhere with network connectivity (and proper permissions), EFS can facilitate disaster recovery workflows where you need data stored in a central, resilient location.

- Container Storage – EFS is a perfect solution for stateful containerized applications running in Amazon ECS, EKS (Kubernetes), or AWS Fargate. Containers are typically ephemeral, but with EFS, you can give them a persistent volume that lives beyond the container’s lifecycle. This is useful for workloads like content management, build systems, or any microservice that needs to retain files (user uploads, caches, etc.). In Kubernetes, for example, you might use an EFS-based PersistentVolume for shared storage between pods in a Deployment or for a single pod that might get rescheduled across nodes. EFS eliminates the need to tether container storage to a particular host (since any pod on any node can mount the shared file system). It’s also handy in cluster environments for shared configuration, libraries, or logging. Furthermore, EFS’s scalability means your storage can grow as your containerized application grows, without intervention.

- Analytics and Machine Learning – (Additional use case) Big data analytics frameworks and ML training jobs often need access to large datasets across many compute nodes. EFS can serve as a centralized data source for HPC clusters, Apache Spark jobs, or ML training scripts running on multiple instances. All nodes can read from the same file set concurrently. While EFS may not reach the extreme IOPS of FSx for Lustre (which is tailored for HPC), it provides a simpler setup for moderate-scale analytics that need shared files. For example, a genome sequencing pipeline or a financial analytics batch job could use EFS to store input data and intermediate results accessible to all worker nodes.

Pricing and Costs

AWS EFS pricing is straightforward and consumption-based, with no upfront fees. You pay only for the storage space you use (measured in GB-months), plus any data transfer operations (when reading or writing data, or moving data between storage classes). There are no minimum commitments or provisioning charges for standard usage – the service is truly pay-as-you-go .

Prices vary slightly by region. As a reference, here are the storage costs in the US East (N. Virginia) region (as of this writing):

- Standard storage: Approximately $0.30 per GB-month . This is the cost for data stored in the Standard class (frequently accessed, multi-AZ). This rate is higher because it provides the highest performance and redundancy.

- Infrequent Access storage (IA): About $0.016 per GB-month in the same region . This lower rate applies to data in the EFS IA class (infrequently accessed data). You are charged this rate only for the portion of data that has transitioned to IA. (Note: When data in Standard is moved to IA, you also incur a one-time transition fee, noted below.)

- Archive storage: About $0.008 per GB-month . This ultra-low rate applies to data in the EFS Archive class (rarely accessed cold storage). It’s roughly 1/97th the cost of Standard. However, keep in mind the 90-day minimum retention—if you delete or move data out of Archive sooner, you’ll still be billed as if it was stored for 90 days.

In addition to storage capacity, AWS charges for throughput and data access operations:

- Read and write operations: When data is read from or written to EFS (any storage class), a small fee applies per GB of data transferred. In N. Virginia, reads cost about $0.03 per GB and writes cost $0.06 per GB . These charges apply whether the data is in Standard, IA, or Archive. (They reflect the cost of servicing the data transfer and are in addition to the storage cost of the data.)

- Lifecycle transition (tiering) charges: When EFS automatically moves data between classes (Standard ↔ IA, or IA ↔ Archive), there is a nominal fee per GB transferred during the transition. This is roughly $0.01 per GB when moving data between Standard and IA, and $0.03 per GB when moving between IA and Archive . For example, if 100 GB of data transitions from Standard to IA in a month, you’ll incur about $1 in tiering fees. These fees encourage efficient use of lifecycle policies (to avoid constantly shuffling data). The one-time transition cost is usually far outweighed by the storage savings if data truly becomes infrequently accessed.

- Provisioned throughput (if used): If you opt for Provisioned Throughput mode, you will pay for the provisioned amount regardless of actual usage. The rate in US East is about $6 per 1 MB/s of throughput per month (so 10 MB/s provisioned would be ~$60/month) . This charge is in addition to the storage and operation costs above. (Elastic throughput has no fixed throughput charge – you just pay the read/write per-GB fees as noted.)

Note: All the above prices are region-specific examples (US East N. Virginia). AWS pricing can vary by region, so costs in other regions (e.g., Europe or APAC) may differ slightly. Always check the latest pricing for your region on the official AWS pricing page. Also, AWS EFS offers a Free Tier for new accounts – typically 5 GB of EFS Standard storage is free for 12 months , which can be useful for testing or small projects.

To illustrate costs, consider a simple example: Suppose you store 100 GB of files on EFS Standard and 500 GB on EFS IA. In a given month, you perform 10 GB of reads and 5 GB of writes, and 20 GB of your data transitions from Standard to IA due to infrequent access. Your approximate charges would be:

- Storage: 100 GB × $0.30 + 500 GB × $0.016 = $38.00

- Data access: 10 GB reads × $0.03 + 5 GB writes × $0.06 = $0.60

- Transition: 20 GB Standard→IA × $0.01 = $0.20

Total = $38.80 for that month in storage and usage charges. This example shows how using IA (and transitioning data to it) drastically cuts the storage cost (500 GB at Standard would have been $150, versus $8 on IA + minor fees). By leveraging lifecycle management, EFS can be very cost-efficient for the right workloads.

Comparison with Other AWS Storage Services

While Amazon EFS is a great solution for shared file storage, AWS offers other storage services optimized for different scenarios. It’s important to understand how EFS compares to these alternatives: Amazon EBS (block storage), Amazon S3 (object storage), and Amazon FSx (managed third-party file systems).

AWS EFS vs Amazon EBS

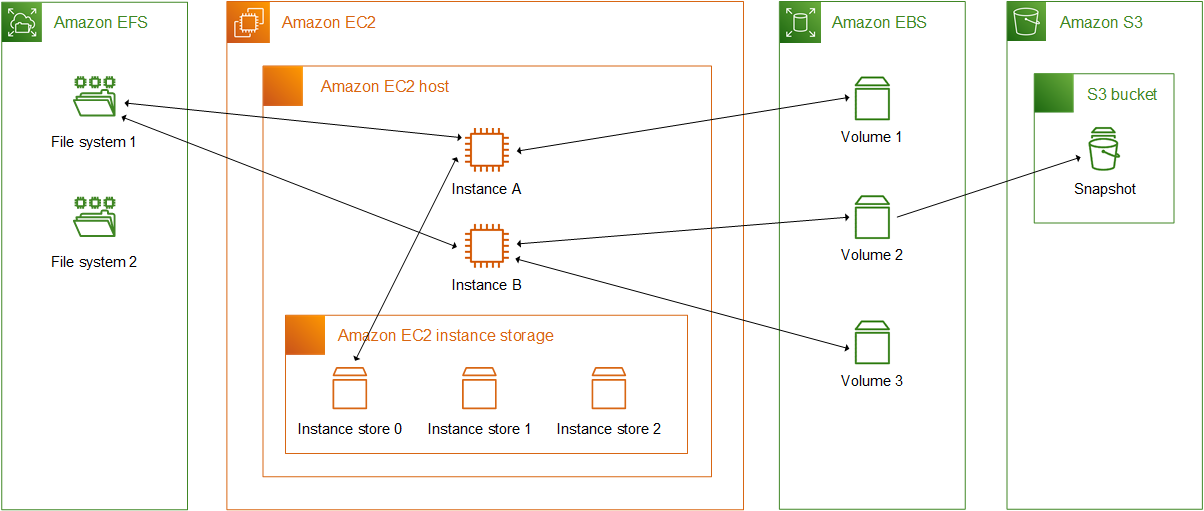

Amazon EFS and Amazon EBS serve different purposes in the storage hierarchy. Amazon EFS is a network file system that provides shared file storage accessible by multiple compute resources at the same time . It is ideal when you need a common data source that many EC2 instances, containers, or Lambda functions can all read/write concurrently. EFS storage grows and shrinks automatically, and it persists independently of any single compute instance.

Amazon EBS (Elastic Block Store), on the other hand, is direct-attached block storage for EC2 instances. An EBS volume is essentially like a virtual hard disk that you attach to one EC2 server at a time (though Multi-Attach can connect an EBS volume to a few instances in read-only or clustered scenarios, it’s not common for most workloads). EBS is optimized for single-instance performance – it offers very low latency and high IOPS to the attached EC2, making it ideal for use cases like databases, transactional workloads, or an EC2 instance’s primary filesystem (e.g., C: or /dev/xvda). Applications that require persistent, dedicated storage on one server (for example, a MySQL database on EC2) typically use EBS. EBS volumes are confined to a single Availability Zone (for durability, you can take point-in-time snapshots of EBS volumes to S3 or use multi-AZ replication for certain volume types) . In contrast, EFS is regional (spanning multiple AZs by default), so it’s inherently more fault-tolerant within a region.

To put it simply: EFS behaves like a NAS (network-attached storage) that multiple machines can share, whereas EBS is like a local disk attached to one machine. If you have a workload where multiple instances need to read/write the same files, EFS is the go-to choice (EBS cannot easily do this). But if you need high-performance storage for a single server (e.g., a high-end database on one EC2), EBS usually offers better performance characteristics. In fact, EBS can achieve very high IOPS (especially with Provisioned IOPS volumes) and lower latency per operation than EFS, because it’s not network-mounted in the same way and it doesn’t have to handle synchronization across instances.

Another difference is cost and throughput: EBS volumes charge by provisioned size (whether you use the space or not) and have performance tied to volume size/type, whereas EFS charges by usage and performance scales with usage (or provisioned throughput). For large sequential throughput workloads, EFS with Max I/O can handle high throughput, but EBS (with striping or larger io2 volumes) might reach higher IOPS for random access patterns on a single instance. Also, keep in mind EBS is usually cheaper per GB (for example, general-purpose SSD EBS gp3 is ~$0.08/GB-month in us-east-1, much lower than EFS’s $0.30 for Standard). However, that cost doesn’t include replication unless you snapshot it, whereas EFS’s $0.30 includes multi-AZ durability.

In summary, use Amazon EFS when you need a distributed, shared file system accessible by many instances or services. Use Amazon EBS when you need fast, block-level storage for one instance (like a disk drive in the cloud). Often, these services complement each other: for example, an EC2-based application might use an EBS volume for its database and an EFS file system for shared application data or logs.

AWS EFS vs Amazon S3

Amazon EFS and Amazon S3 are both scalable storage services, but they have very different interfaces and use cases. Amazon EFS is a file system – it provides a hierarchical directory structure, file-based access, and works with standard file system semantics (through NFS) . Amazon S3 is an object storage service – it stores data as objects in buckets and is accessed via a RESTful API or SDK calls, not as a traditional file system .

Key differences:

- Access method: EFS is mounted like a network drive. Applications can open files, write to them, list directories, and so on, using normal file I/O calls. S3 requires using the API/SDK (e.g., PUT, GET requests) to upload/download whole objects; you can’t mount S3 in the same native way (though there are third-party gateways). S3 is excellent for storing and retrieving whole objects (like images, videos, backups, etc.) using HTTP requests, but it’s not meant for byte-level random writes or executing programs directly off of storage.

- Concurrency and sharing: EFS allows multiple concurrent clients to read and write the same files with locking if needed, suitable for sharing data in real time. S3 allows concurrent access too, but because it’s object storage, if two clients PUT to the same object simultaneously, the last write wins (or you implement object-versioning). There’s no concept of file locking or append writes in S3 – clients typically don’t simultaneously modify the same object; they might upload different objects or use S3 versioning for concurrency. So, S3 is not a file-sharing solution in the way EFS is. If you need a common filesystem for application servers, S3 alone is not a replacement (though apps can be rearchitected to use S3 for shared data in some cases, usually with significant changes).

- Performance characteristics: EFS offers low latency access (operations in milliseconds), especially for cached metadata and small I/O, whereas S3 operations have higher latency (tens of milliseconds to retrieve even the first byte of an object) because it’s an HTTP-based service. However, S3 can massively scale throughput for large streams of data and handle very high request rates; it’s designed for “infinitely” scalable storage with an eventual consistency model (though now S3 is strongly consistent for new writes/deletes). S3 can serve thousands of parallel requests without performance degradation and is optimized for delivering objects over the internet. EFS, being in a VPC, is typically used by EC2s in the same region and provides high throughput within that environment.

- Durability and availability: Both are highly durable. S3 is designed for 11 9’s durability as well and can even be configured for cross-region replication. In fact, S3’s standard storage replicates data across at least three AZs by default (similar to EFS Regional) and offers additional features like Versioning, which can protect against accidental deletes. EFS Regional also spans multiple AZs, so durability is comparable for stored data. S3 has an edge in global accessibility – you can easily share data via presigned URLs or cross-region replication, whereas EFS is tied to a VPC unless you do VPC peering or use AWS DataSync for copying data to another region. Availability-wise, S3 is an HTTP service accessible from anywhere (with permissions), and EFS is within a VPC (accessible to your instances or via VPN). For public-facing content (like images for a website), S3 (often with CloudFront CDN) is the go-to solution, not EFS.

- Use cases: Amazon S3 excels for object storage use cases: static website hosting, data lakes, backups, log archives, media storage, etc. It’s fantastic for write-once/read-many patterns or streaming large files. Many big data and analytics workflows use S3 as the source of truth due to its durability and cheap cost per GB. Amazon EFS is used for scenarios where applications need file system semantics and shared access: content management, shared home directories, lift-and-shift enterprise apps, etc. For example, a legacy application expecting a POSIX file system can be moved to AWS and use EFS, but moving it to use S3 would require significant refactoring.

In practice, you might use both in a single solution: e.g., ingest data via an application to EFS (for immediate processing with file system semantics), then periodically copy results to S3 for long-term storage or distribution. Or use S3 as an origin store and have an EC2 fleet cache or process objects by pulling them from S3 and writing results to EFS for sharing with other components.

One more thing: cost. S3 is much cheaper per GB than EFS for large volumes of data (S3 Standard is ~$0.023/GB-month in us-east-1, vs EFS Standard $0.30). So for very large data sets that don’t need a file system, S3 is far more economical. S3 also has a whole suite of storage classes (like Intelligent Tiering, Glacier, etc.) for cost optimization. However, S3 charges for requests (per 1,000 PUTs/GETs) and data transfer out, which EFS does not (EFS charges by per-GB throughput instead). So, the cost model differs.

In summary: Use Amazon EFS when your application needs a shared interface or quick, concurrent file access by multiple clients. Use Amazon S3 for object storage – especially for static assets, backups, or streaming large objects to users – where a file system interface isn’t required. Each service is great in its domain: EFS for POSIX-compliant storage in a VPC, S3 for REST-based storage accessible everywhere.

AWS EFS vs Amazon FSx

Amazon FSx is a family of managed file system services that offer fully featured third-party file systems on AWS. Currently, Amazon FSx lets you choose among four file system types: FSx for Windows File Server, FSx for Lustre, FSx for NetApp ONTAP, and FSx for OpenZFS . These services are purpose-built for specific use cases and come with the features of their respective file system technologies. By contrast, Amazon EFS provides a general-purpose, Linux-compatible NFS file system that is simple and scalable, but not as feature-rich as some of the specialized file systems in FSx.

Let’s compare in simpler terms:

- Amazon EFS – Think of this as an elastic NFS share for Linux-based applications. It’s extremely easy to use (no complex settings), scalable, and serverless. It doesn’t support Windows SMB protocol or advanced filesystem features beyond NFS standards. Max throughput and IOPS scale with usage, but there are not many knobs to tune performance except the throughput mode and performance mode. EFS is great for most use cases where you need a basic shared file store in AWS, and you value simplicity and elasticity.

- Amazon FSx for Windows File Server – This service provides a fully managed Windows Server running the Windows-native file system (NTFS) and serving files over the SMB protocol. It’s the best choice if you have Windows applications or users that need a shared Windows file share, Active Directory integration, Windows ACLs/permissions, and features like user quotas, DFS namespaces, etc. EFS cannot do any of that – EFS is not accessible via SMB and doesn’t integrate with AD. So FSx for Windows is essentially “Windows NAS in the cloud.” For example, if you’re migrating a Windows-based file server or a SharePoint system’s file storage, you’d use FSx for Windows. It is single-AZ by default (with multi-AZ optional for HA) and requires specifying capacity and throughput levels. In contrast, EFS is more Linux-centric and simpler but lacks those Windows-specific capabilities.

- Amazon FSx for Lustre – This service provides a high-performance parallel file system (Lustre) often used in HPC (High Performance Computing) and ML training. Lustre is designed for speed, not durability – FSx for Lustre can synchronize with S3 for durability, but the file system itself is meant for fast scratch space, with throughput of hundreds of Gbps and millions of IOPS possible. If you have analytics or ML workloads that need very fast access to a shared dataset (e.g., processing large data sets in memory across a cluster of instances), FSx for Lustre might be ideal. Amazon EFS, while scalable, cannot reach the same single-stream throughput or IOPS as Lustre in these scenarios. However, FSx for Lustre lacks some features like multi-AZ durability (unless you back up to S3) and is more complex to manage (you provision size and throughput). EFS is simpler and multi-AZ, but slower for HPC-style workloads.

- Amazon FSx for NetApp ONTAP – This service brings the NetApp ONTAP filesystem (commonly used in enterprise data centers) to AWS. It supports both NFS and SMB, and includes advanced features like point-in-time snapshots, cloning, data deduplication, compression, tiering to S3, and multi-protocol access. Essentially, if you are a NetApp shop or need enterprise NAS features (and are willing to manage those features), FSx for ONTAP is the choice. It’s great for lift-and-shift of enterprise workloads that rely on NetApp’s ecosystem. Compared to EFS, FSx for ONTAP is more complex and offers many more features and tuning options. EFS has no concept of snapshots (outside AWS Backup) or compression, etc., whereas ONTAP does. However, with that power comes complexity – and cost (ONTAP on FSx is priced differently, with capacity and throughput charges). If you don’t need those features, EFS might be easier and cheaper for a simple use case.

- Amazon FSx for OpenZFS – Similar in concept to ONTAP, but using the open-source OpenZFS filesystem (popular in some on-prem NAS and storage systems). This provides features like snapshots, cloning, compression, and strong consistency using the ZFS technology. It’s again a specialized service for those who need ZFS features. If you specifically need something like consistent snapshots or to integrate with existing ZFS workflows, you might consider this. Otherwise, EFS suffices for general needs.

In summary, Amazon FSx services are chosen when you have a specific requirement: “I need a Windows file server”, “I need Lustre for HPC”, or “I need NetApp/ZFS features”. They give you the full capabilities of those file systems in a managed AWS service. Amazon EFS is the general, go-to solution for shared storage in AWS when you don’t have those specific needs. It’s simpler to operate (virtually zero maintenance or configuration) and automatically scalable, but it doesn’t offer the fine-grained control or some advanced features that FSx file systems might.

One way to decide: if your workload is already using NFS and just needs a scalable home in the cloud, EFS is likely the best fit. If your workload expects SMB (Windows) or needs something like a high-performance Lustre file system or NetApp’s data management, then FSx is the way to go for that scenario. Cost-wise, EFS Standard storage is a bit pricey per GB, but the FSx solutions can also be expensive especially when you provision high performance. So it really comes down to matching the storage to your application’s requirements.

Conclusion

Amazon EFS is a fully managed, scalable, and secure file storage option for AWS workloads that need shared, POSIX-compliant access. It automatically adjusts capacity and integrates crucial features—such as multiple performance modes, lifecycle management, and robust security—to balance cost and performance. While EFS may not suit every use case (for instance, if dedicated block storage or object-based approaches are better), it excels in Linux-based environments that require straightforward, multi-instance file sharing. Thanks to its serverless architecture and easy setup, EFS helps build resilient, cost-efficient cloud applications without the complexity of provisioning or maintaining file servers.

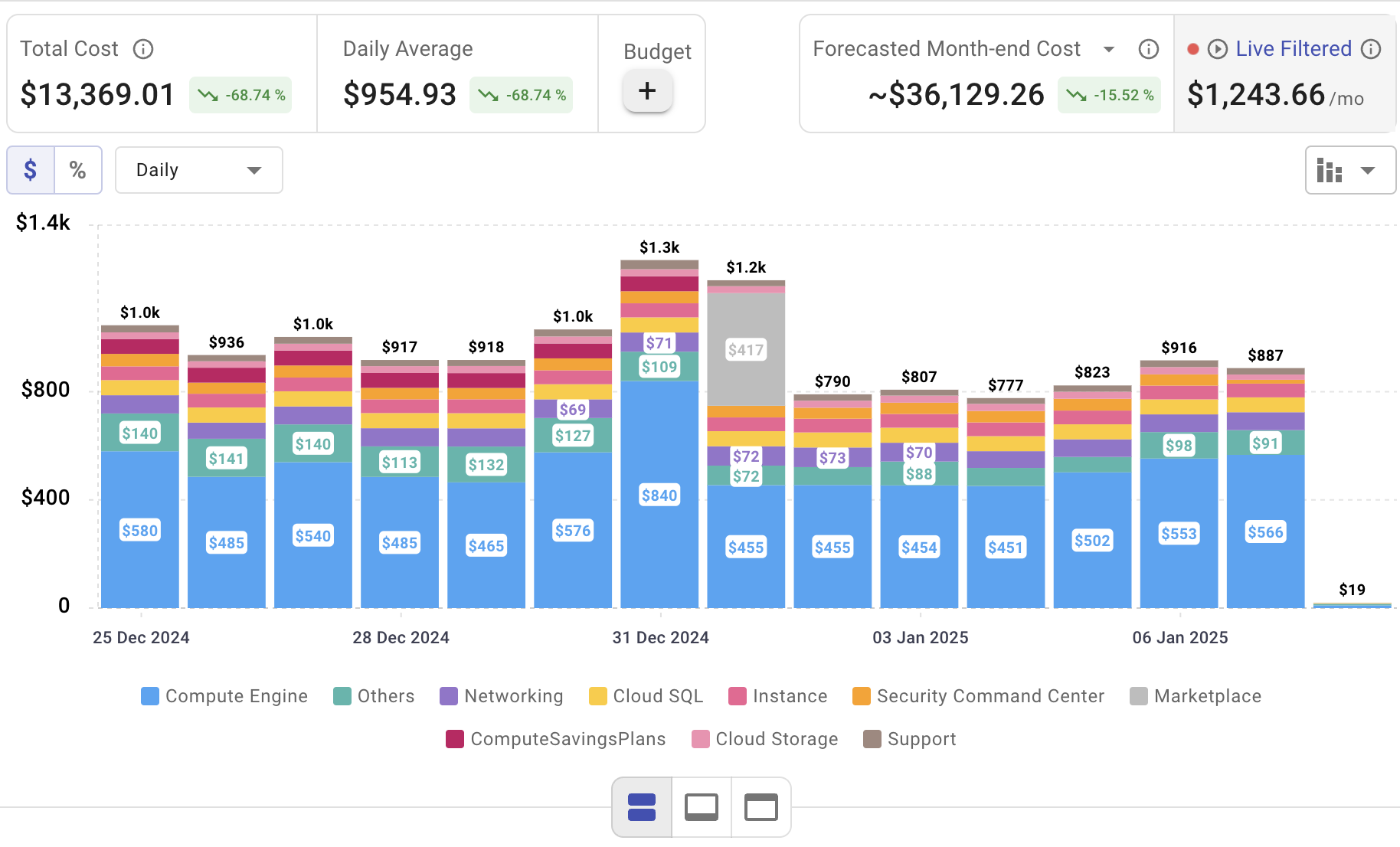

Optimize Cloud Storage with Cloudchipr

Choosing the right file storage solution is only part of the equation—tracking the cost and performance of your cloud file systems is equally critical. That’s where Cloudchipr steps in. It provides clear insights into how AWS EFS impacts your cloud spend, empowering you to optimize performance, scalability, and cost across your AWS environment.

Key Features of Cloudchipr

Automated Resource Management:

Easily identify and eliminate idle or underused resources with no-code automation workflows. This ensures you minimize unnecessary spending while keeping your cloud environment efficient.

Receive actionable, data-backed advice on the best instance sizes, storage setups, and compute resources. This enables you to achieve optimal performance without exceeding your budget.

Keep track of your Reserved Instances and Savings Plans to maximize their use.

Monitor real-time usage and performance metrics across AWS, Azure, and GCP. Quickly identify inefficiencies and make proactive adjustments, enhancing your infrastructure.

Take advantage of Cloudchipr’s on-demand, certified DevOps team that eliminates the hiring hassles and off-boarding worries. This service provides accelerated Day 1 setup through infrastructure as code, automated deployment pipelines, and robust monitoring. On Day 2, it ensures continuous operation with 24/7 support, proactive incident management, and tailored solutions to suit your organization’s unique needs. Integrating this service means you get the expertise needed to optimize not only your cloud costs but also your overall operational agility and resilience.

Experience the advantages of integrated multi-cloud management and proactive cost optimization by signing up for a 14-day free trial today, no hidden charges, no commitments.

.png)

.png)

.png)