AWS OpenSearch Deep Dive: Architecture, Pricing, and Best Practices

.png)

What is AWS OpenSearch?

.png)

Amazon OpenSearch Service (often referred to as AWS OpenSearch) is a fully managed search and analytics engine service on AWS. It allows you to deploy and scale open-source OpenSearch clusters in the cloud without the operational overhead of managing the underlying infrastructure. AWS OpenSearch Service was formerly known as Amazon Elasticsearch Service – it was renamed in late 2021 when AWS introduced OpenSearch 1.0 as the successor to Elasticsearch 7.10. In simple terms, OpenSearch is an Apache 2.0 licensed fork of the Elasticsearch project, created after Elastic’s licensing change, and AWS OpenSearch Service makes this technology available as an easy-to-use cloud service. It’s designed for a variety of use cases like interactive log analytics, real-time application monitoring, enterprise search, and more. In this comprehensive guide, we’ll explore the evolution of AWS OpenSearch from Elasticsearch, its architecture and key components, core features and tools, the available instance types and the new serverless architecture, a comparison of AWS OpenSearch vs. Elasticsearch, common use cases, pricing models (including AWS OpenSearch Serverless pricing), and best practices for deployment, security, scalability, and cost optimization. All information is based on official AWS OpenSearch documentation to ensure accuracy and currency.

Key Components and AWS OpenSearch Architecture

At its core, an AWS OpenSearch domain is an OpenSearch cluster – the terms are synonymous in AWS parlance. When you create an OpenSearch Service domain (whether via the console, CLI, or API), AWS provisions the necessary infrastructure (Amazon EC2 instances, storage, networking) to form an OpenSearch cluster according to your specifications . Each EC2 instance in the cluster runs as an OpenSearch node . You can choose how many nodes to allocate, what instance types they use, how much storage to attach, and other settings to tailor performance and capacity. AWS handles the heavy lifting of installing and configuring the OpenSearch software on these nodes, monitoring their health, and replacing nodes if they fail, so you don’t have to manage the servers yourself.

Cluster Architecture

A typical OpenSearch cluster consists of data nodes, which store indexed data and handle search and indexing requests, and optionally dedicated master nodes (also called cluster manager nodes) that offload cluster coordination tasks (like managing the cluster state, elections, and metadata). In Amazon OpenSearch Service, you can enable dedicated master nodes for production clusters to improve stability – these nodes do not hold data or serve queries, they just manage the cluster, reducing the load on data nodes. AWS recommends using dedicated master nodes especially for clusters with larger node counts or heavy ingestion rates, as they help maintain cluster health under load. You can also add ultra-warm nodes for cost-efficient storage of older, less-frequently accessed data (more on this in the features section). Under the hood, each index in OpenSearch is partitioned into shards (primary shards and replicas). The service distributes these shards across the data nodes in the cluster, which allows it to scale horizontally and handle large data volumes. By default, replicas are placed on different nodes (and even across Availability Zones if Multi-AZ is enabled) to ensure high availability – if one node fails, its replica on another node can serve the data. As a best practice, you should have at least one replica of each shard so that you have two copies of your data for fault tolerance.

Networking and Isolation

When setting up an OpenSearch domain, you also decide how it’s networked. You can deploy the domain within a VPC, meaning the cluster’s endpoints are only accessible within your AWS Virtual Private Cloud (and optionally via your corporate network if you use VPN/Direct Connect). This is the recommended approach for sensitive or production workloads, because it keeps traffic to and from the cluster off the public internet and adds an extra layer of isolation. Alternatively, you can make a domain publicly accessible (with proper access policies), which might be suitable for test or low-security scenarios, but generally VPC deployment is a best practice for security.

High Availability

AWS OpenSearch Service supports deployment across multiple AWS Availability Zones for high availability. In a Multi-AZ configuration, the service will place nodes across two or three AZs in the region. This way, if one AZ goes down or has issues, the cluster can continue operating from the other AZs. Multi-AZ, combined with shard replication, ensures that both data and node redundancy are in place. The service will also automatically replace unhealthy nodes and rebalance shards as needed, which provides a self-healing capability. Additionally, you can configure automated snapshots of your domain to back up data to Amazon S3 – by default, daily snapshots are taken and retained for 14 days, and you can snapshot on-demand as well. Snapshots enable you to restore your cluster or recover data if needed (for example, to clone a cluster or roll back to a point in time).

Data Ingestion and Access

Architecturally, an OpenSearch cluster typically sits between data ingest sources and client applications that query the data. You have flexible options to ingest data into Amazon OpenSearch Service. Commonly, log data or streaming data is shipped to OpenSearch using services like Amazon Kinesis Data Firehose, which can batch, transform, and load data into OpenSearch in near real-time. You can also use AWS Database Migration Service (DMS) or custom AWS Lambda functions for moving data from other data stores. Amazon OpenSearch Service has built-in integrations for Amazon S3 (to bulk import data or snapshots), Amazon Kinesis Streams/Firehose, and Amazon DynamoDB (via the DynamoDB streaming data connector), making it easier to load streaming or bulk data. For example, you might stream application logs from CloudWatch Logs or Firehose directly into OpenSearch for analysis. On the consumption side, users and applications can search and analyze data in OpenSearch using its RESTful APIs, query DSL, or more user-friendly tools like OpenSearch Dashboards (the visualization UI) and SQL or Piped Processing Language (PPL) queries. The cluster’s endpoint is the URL that clients use to send search or indexing requests (or to open in OpenSearch Dashboards in a browser). AWS handles the endpoint setup and will provide a domain endpoint (e.g., https://search-mydomainID.region.es.amazonaws.com) which you use for all API calls.

High-level data flow in Amazon OpenSearch Service: Data from various sources (logs, metrics, application events, etc.) is captured and ingested into an OpenSearch domain, where it is indexed and analyzed. Users can then query and visualize this data for different purposes – from application performance monitoring and security analytics (SIEM) to powering search features in applications. The managed service streamlines this pipeline by handling cluster provisioning, scaling, and maintenance, so you can focus on deriving insights from your data rather than running servers.

AWS OpenSearch Service’s architecture abstracts the underlying cluster components (instances, storage, networking) and provides a managed, scalable environment. Key components include domains (clusters), nodes (EC2 instances) with various roles (data, master, UltraWarm), indexes and shards for data organization, and a suite of integrated services for ingestion and monitoring. Now that we have an idea of the architecture, let’s look at the feature set that AWS OpenSearch offers out of the box.

Core Features and Tools in AWS OpenSearch Service

AWS OpenSearch Service inherits the rich feature set of Elasticsearch/OpenSearch and adds a range of capabilities and integrations that make it enterprise-ready. Here are some of the core features and tools provided:

- Scalability and Performance: OpenSearch Service can handle large-scale workloads by scaling out horizontally and vertically. You can choose from numerous EC2 instance types offering different mixes of CPU, memory, and storage, including the latest AWS Graviton-powered instances for better price-performance. The service supports clusters with up to 1000+ data nodes and petabytes of storage attached, so you can index and search massive data sets. For storage, beyond the standard hot storage on EBS or instance store, OpenSearch Service offers cost-effective tiers like UltraWarm and Cold Storage for older, read-only data. UltraWarm nodes allow you to retain huge volumes of logs or historical data at a much lower cost by storing data on Amazon S3 (but cached by warm nodes for query) – you can attach up to 25 PB of storage using UltraWarm. Cold storage goes even further by offloading infrequently accessed indices entirely to S3, without needing dedicated warm nodes, and you only pay for the S3 storage used. These tiered storage options enable a hot-warm-cold architecture, where recent data is kept on fast local storage for rapid queries, while older data remains searchable on cheaper storage.

- Search and Analytics Capabilities: As a search engine, OpenSearch provides full-text search with support for various text analyzers, keyword matching, relevance scoring, and aggregations. You can perform complex queries, aggregations (faceted analytics, metrics like max/min/avg), and even geospatial queries. Additionally, OpenSearch Service includes support for SQL querying and a pipeline query language (PPL), which allow you to write SQL-like queries or Unix-pipe-style queries to retrieve and aggregate data. This is great for users who are more comfortable with SQL or want to integrate OpenSearch with BI tools. The service supports custom plugins (packages) so you can extend search functionality – for example, you might install a phonetic text analysis plugin to improve search relevance for names. OpenSearch also has a built-in vector search capability (introduced in OpenSearch 2.x) that lets you perform semantic searches on vector embeddings – useful for AI/ML applications like similar document or image search and recommendations.

- OpenSearch Dashboards (Visualization and Analytics): Every OpenSearch domain can optionally have an OpenSearch Dashboards endpoint. OpenSearch Dashboards is the visualization UI (originating from Kibana) that lets you explore your data and build interactive dashboards, charts, and graphs. It’s included with the service, and AWS manages the deployment of Dashboards alongside your cluster. You can use it to do everything from writing queries and visualizing log data, to setting up advanced analytics and anomaly detection. The service supports multi-tenancy in OpenSearch Dashboards as well , meaning you can partition Dashboards access so that different users (or groups) see different data, useful for multi-user environments where each team should only access its own indices. Moreover, you can integrate Dashboards with authentication methods (Amazon Cognito user pools for OAuth, or internal users with username/password, etc.) to control access. For older Elasticsearch versions, AWS OpenSearch Service also supported Kibana, but since the fork, OpenSearch Dashboards is the primary tool (and it’s essentially the open-source continuation of Kibana).

- Security and Access Control: Security is a major feature set of AWS OpenSearch. At the cluster level, you can control network access using VPC security groups (if in a VPC) and resource-based policies (which act like IAM policies attached to the domain) to decide who or what can call the OpenSearch APIs. For fine-grained data protection, OpenSearch Service offers Fine-Grained Access Control (FGAC), which is a feature that lets you define permissions at the index, document, and field level. This means you could allow one role to read and write to a certain index, while another role can only read specific fields in that index, etc. FGAC can be tied into your corporate directory via Amazon Cognito or through SAML for single sign-on, or you can use internal users/roles. The service integrates with AWS Identity and Access Management (IAM) for authentication as well, so you can sign requests with AWS credentials and manage access through IAM policies. On the encryption side, all data stored in OpenSearch domains can be encrypted at rest using AWS KMS keys (AES-256). Data in transit is always encrypted by default when using HTTPS, and you also have the option to enforce node-to-node encryption (TLS) for traffic between nodes within the cluster. Combined, these features ensure that your data is secure both on disk and on the wire. Audit logging is another feature: you can enable audit logs to capture detailed records of access to the cluster, which is important for compliance and security monitoring. The audit logs (as well as slow logs and error logs) can be sent to Amazon CloudWatch Logs for storage and analysis.

- Integration with AWS Ecosystem: A big advantage of AWS OpenSearch Service is how it connects with other AWS services. For monitoring, OpenSearch metrics (like cluster health, CPU/Memory usage, indexing rate, search latency, etc.) are published to Amazon CloudWatch, so you can set up dashboards and alarms on cluster performance. AWS CloudTrail integrates as well, logging all configuration API calls made to your OpenSearch domains (e.g., creating or modifying a domain), which is useful for auditing changes. You can set up Amazon SNS notifications to get alerts (for example, if your cluster is approaching a storage threshold or if an alarm triggers). For data ingestion, as mentioned, services like Amazon Kinesis Firehose, AWS IoT, CloudWatch Logs, or AWS Glue can be used to continuously feed data into OpenSearch. OpenSearch also has features like Cross-Cluster Search and Cross-Cluster Replication, which allow searching data across multiple domains or replicating data from one domain to another (even across regions) – these are particularly useful for federated search use cases or disaster recovery setups, and are fully supported by the service. In summary, AWS OpenSearch doesn’t exist in a vacuum – it’s well-integrated into AWS’s ecosystem, making it easier to incorporate into larger architectures for data analytics and search.

- Managed Administration Features: Because this is a managed service, tasks that would normally require manual effort are automated. The service automatically replaces failed nodes, patching the OpenSearch software for bug fixes and security updates, and performs blue/green deployments during version upgrades to avoid downtime. It provides a one-click (or API call) way to scale out or scale up your cluster when needed . There’s also an Auto-Tune feature that can optimize some internal parameters of OpenSearch to better handle your workload (for example, adjusting thread pools or cache sizes based on usage patterns). Additionally, Index State Management (ISM) is available – this is a policy-driven automation that can, for example, roll over indices when they grow too large or too old, and move them to UltraWarm or cold storage, or delete them eventually. ISM is great for log management use cases where you want the system to curate indices over time (hot-warm-cold lifecycle management).

In essence, Amazon OpenSearch Service provides not just the raw search engine, but an ecosystem of features and tools to store data efficiently, search and analyze it powerfully, secure it, and keep the cluster running smoothly. All of these capabilities are built on official AWS OpenSearch documentation and reflect the service’s focus on being production-ready for enterprise search and analytics needs.

AWS OpenSearch Instance Types and Serverless Architecture

When deploying Amazon OpenSearch Service, you now have two primary deployment models to choose from: the traditional provisioned (instance-based) deployments and the newer Amazon OpenSearch Serverless option. Let’s break down both:

Provisioned Clusters and Instance Types:

In the provisioned model, you specify the instance types and the number of instances for your OpenSearch domain. AWS offers a wide range of EC2-based instance types for OpenSearch, tailored to different needs. For example, general-purpose instances (M-family like M6g.large.search), compute-optimized (C-family), memory-optimized (R-family), and storage-optimized instances (I-family for high IO) are available, including the latest generations. AWS regularly updates the service with new instance types that offer better performance or value. For best results, AWS recommends using the latest generation instances (like the newer Graviton2/Graviton3-based instances) for better price-performance . Graviton-powered instances (e.g., c7g, m7g, r7g families) can often deliver the same performance at a lower cost than previous-gen Intel/AMD-based instances. As an example, instead of using older m5.large.elasticsearch, you might use m7g.large.search which is more cost-effective. The instance types come in various sizes (large, xlarge, 2xlarge, etc.), scaling up CPU/RAM resources. You can also choose between instance storage vs. EBS storage for your data nodes on some instance families. Certain families like I3, I4i/I4g have local NVMe SSDs for very high-speed storage (great for log analytics workloads), whereas others use attached EBS volumes (gp3 SSDs are recommended for their high throughput and lower cost ). When using EBS, you can configure the volume size and IOPS as needed.

Within a cluster, you might have multiple instance types serving different roles: for example, hot data nodes on powerful compute instances, and UltraWarm nodes on storage-heavy, lower-cost instances. UltraWarm nodes have their own instance families (like ultrawarm1.large.search etc.) specifically optimized to cache warm data from S3. Additionally, t2/t3 small instances exist for dev/test or very low-throughput use cases (and are covered by the free tier, which provides 750 hours of t2.small/t3.small usage per month ). However, AWS cautions against using the smallest instance types for production, as they can become unstable under sustained load . It’s often better to start with at least r6g.large or similar for small production clusters.

The provisioned architecture gives you full control over capacity – you decide how many nodes and of what type. If you anticipate more load, you scale out by adding more nodes or scale up by choosing a larger instance type. You can do this scaling manually, or use AWS tools/scripts to adjust capacity in response to metrics. While OpenSearch Service doesn’t (at the time of writing) have an auto-scaling feature that automatically adds/removes nodes based on load, you can integrate CloudWatch alarms with Lambda or use the SDK to script scaling policies. The trade-off with the instance-based model is that you typically provision for peak capacity (to handle your highest query or indexing loads), which might mean during off-peak times your cluster has idle capacity. This is where the Serverless option comes in to offer more elasticity.

Amazon OpenSearch Serverless

Announced in late 2022 and made generally available in 2023, OpenSearch Serverless is a deployment option that completely eliminates the need to manage instances. Instead of defining instance types and counts, you simply create a Serverless collection and the service allocates resources on-demand to handle your workload. A collection in OpenSearch Serverless is analogous to a cluster or a group of indexes that serve a specific use case (for example, a “logs” collection or a “search” collection). Under the hood, the architecture of OpenSearch Serverless is quite different from the traditional cluster model. It uses a cloud-native, decoupled architecture where the indexing and search functions are separated and backed by a highly scalable storage tier on Amazon S3.

In OpenSearch Serverless, when you ingest data, it’s routed to indexing compute units that parse and index the documents and then write the index files to Amazon S3. When you run a search query, it’s handled by search compute units that fetch the relevant index shards from S3 (caching them locally for speed) and execute the query. This separation means each part can scale independently – if you have a spike in indexing (data incoming) but not in search queries, the service can scale out more indexing nodes without adding search nodes, and vice versa. It also provides better workload isolation (heavy ingestion won’t necessarily slow down search queries because they use different resources).

From a user perspective, using OpenSearch Serverless means you don’t choose instance types or manage scaling. You simply select a collection type based on your use case, such as a time-series collection for log analytics or a search collection for text search workloads . (There’s also a vector search collection type for semantic search and machine learning use cases .) OpenSearch Serverless will ensure that there’s enough compute capacity to handle your data ingest and query volume. It even handles software updates automatically – currently, Serverless runs OpenSearch 2.x, and as new versions come out, it will auto-upgrade in a backwards-compatible manner , so you always benefit from the latest improvements without manual intervention.

There are some differences and limitations with Serverless to be aware of. For example, not every single OpenSearch API or plugin is supported yet in serverless mode. You don’t get direct access to the underlying servers (since there are none to manage), so certain cluster-level settings are abstracted away. Also, features like taking manual snapshots are not applicable – data is continuously stored in S3 and redundancy is built-in, but you can’t, say, take a snapshot of a Serverless collection to restore elsewhere. However, the trade-off is that you get instant scalability and simplified operations. If you have unpredictable workloads or want to avoid provisioning for peak, Serverless can scale out and in as needed. AWS manages scaling by using units called OpenSearch Compute Units (OCUs) – each OCU represents a chunk of memory/CPU resources (roughly 6 GiB RAM plus vCPU and some ephemeral disk) allocated to your collections. The first collection you create will spin up a minimum of 2 OCUs (for indexing and search each) and, by default, an additional 2 standby OCUs in another AZ for high availability (so 4 total). For development or test collections, you have the option to disable these redundant replicas, which cuts the base capacity in half (only 2 total OCUs for the collection) . As load increases, OpenSearch Serverless will add more OCUs (scaling in increments of 1 OCU) to handle the throughput, and scale them back down when load subsides. This elasticity is fully managed; you just pay for what you consume (we’ll discuss pricing shortly).

AWS OpenSearch vs. Elasticsearch: What’s the Difference?

.png)

Because AWS OpenSearch began as a fork of Elasticsearch 7.10, the two engines remain API-compatible up to that version. Elastic’s recent move back to an Apache-2.0–compatible license removes the old legal divide, yet key practical differences persist.

Governance & Road-Map

- OpenSearch – Community-driven under Apache 2.0, with AWS as a major contributor. Road-map centers on open governance and AWS-native innovations (UltraWarm, Cold Storage, Serverless OCUs).

- Elasticsearch – Led by Elastic N.V. New capabilities (e.g., AI-assisted relevance) debut upstream first; OpenSearch may later re-implement or offer alternatives.

Managed Cloud Experience

- Amazon OpenSearch Service / OpenSearch Serverless – Runs inside AWS with IAM, VPC, CloudWatch, KMS, tiered storage, and pay-as-you-go scaling.

- Elastic Cloud (or self-hosted) – Operates outside AWS’s management plane; bundles Elastic APM, Enterprise Search, Maps, and Elastic’s own access controls.

Version Availability on AWS

- OpenSearch Service supports every OpenSearch release plus legacy Elasticsearch 1.5 → 7.10 for painless migration.

- To run Elasticsearch 8.x+ on AWS, you need Elastic Cloud or to self-manage on EC2/Kubernetes.

Feature Set Snapshot

- OpenSearch – SQL/PPL, alerting, index-state management, fine-grained security, anomaly detection, vector search—all open-source plugins.

- Elasticsearch – Similar core plus Elastic-only extras (some ML jobs, enterprise connectors) that may remain proprietary.

Client & API Compatibility

- OpenSearch retains REST compatibility with Elasticsearch 7.10; most 7.x language clients work unchanged.

- Elasticsearch clients track upstream 8.x; newer APIs may diverge from OpenSearch.

Key Takeaways

- Both engines are fully open-source, but their innovation paths now differ.

- Managed experience is the main separator: AWS-native convenience vs. Elastic-centric tooling.

- Compatibility is seamless for ≤ 7.10 workloads; beyond that, confirm API parity.

- Choose Amazon OpenSearch Service for deep AWS integration and granular cost controls; select Elastic Cloud or self-hosted Elasticsearch when Elastic-specific features or multi-cloud freedom are top priority.

Use Cases Across Industries and Applications

AWS OpenSearch Service is a general-purpose search and analytics platform, so its use cases span across many industries and domains. Here are some of the most common use cases (and industry examples) for OpenSearch:

- Log Analytics and Operational Monitoring: This is perhaps the number one use case for OpenSearch Service. Organizations collect logs from servers, applications, and devices and use OpenSearch to index and analyze these logs in near real-time. With OpenSearch Dashboards, users can create dashboards to monitor system health, error rates, and performance metrics. For example, in IT operations (DevOps/SRE teams), OpenSearch is used to aggregate application logs, server logs, and container logs so that engineers can search across them to troubleshoot issues or monitor trends. The real-time application monitoring capability means you can detect anomalies or spikes (e.g., sudden increase in error logs) as they happen. Many managed service providers or cloud teams use OpenSearch for centralized logging (the ELK/OpenSearch stack is a staple in the observability toolkit). Industries like telecommunications and gaming also use it to analyze event logs and user telemetry streaming from devices or games to ensure everything is running smoothly.

- Security Analytics (SIEM): Related to log analytics, a specialized use case is security information and event management. OpenSearch can ingest logs from firewalls, intrusion detection systems, authentication systems, and more, and serve as a search and analytics engine to identify security threats. For example, a bank’s security team might use OpenSearch to store and query logs of user login attempts, API calls, or network traffic to detect unusual patterns that could indicate breaches. OpenSearch’s fast search and aggregation make it possible to sift through millions of events quickly. The alerting feature can be used to trigger notifications when certain conditions are met (e.g., too many failed logins from a single IP within an hour). AWS even provides integrations where services like AWS CloudTrail, VPC Flow Logs, and GuardDuty findings can be indexed into OpenSearch for a more custom analysis. In the healthcare or public sector, OpenSearch might be used to ensure compliance and security by tracking access to sensitive data systems in real-time.

- Enterprise Search (Internal Document or Site Search): Another classic use case is powering search for websites or applications. OpenSearch is essentially a search engine, so it can index not only logs but any text or structured data. Companies often use Amazon OpenSearch Service to index their website content, product catalogs, or document repositories to provide fast and relevant search results to users. For example, an e-commerce retailer can use OpenSearch to build a product search feature that supports filtering, free-text search, suggestions, etc. The low latency querying and text analysis capabilities help users find products even with typos or partial matches. Similarly, a media or publishing company might use OpenSearch to index articles and enable website visitors to search the news archives. Internally, enterprises use OpenSearch to index documents, intranet pages, or knowledge base articles so that employees can quickly search through internal data (this is sometimes called “enterprise search”). Government agencies might use it to index public records and data so they are searchable by staff and the public. OpenSearch’s ability to scale to millions of documents and its support for multi-language text analysis makes it suitable for these applications.

- Analytics on Clickstreams and Event Data: Many businesses track user interactions (clicks, page views, transactions) for analytics. OpenSearch can ingest these clickstream events (often via Kinesis or Kafka pipelines) and then analysts can use aggregations or even SQL to derive insights – for example, creating dashboards of website funnel conversion rates, or most viewed content in real-time. Because OpenSearch can do aggregations at scale, it’s useful for business intelligence on semi-structured data where using a data warehouse might be overkill or too slow for real-time needs. For instance, a streaming platform might log every “play” event and use OpenSearch to show how many people are watching a show at this moment and from which regions. Marketing teams in retail might analyze OpenSearch data to see how users navigate the site or which searches are popular (and perhaps yielding no results, indicating a gap in inventory). These are scenarios where OpenSearch acts as an analytics engine for event data, often complementing more static data warehouses.

- IoT and Time-Series Data Analytics: OpenSearch isn’t a time-series database per se, but it is often used for time-stamped data analysis due to its log heritage. For IoT scenarios – think sensors emitting readings, smart devices sending status updates – OpenSearch can store the time-series logs and allow querying and visualization of metrics over time. For example, an energy company might use OpenSearch to monitor data from smart meters or IoT sensors on equipment; operators can search the telemetry for anomalies or visualize trends (like temperature or pressure readings over time). Its integration with Kibana/OpenSearch Dashboards means those time-series can be charted easily. In the automotive industry, connected cars produce a stream of events; OpenSearch could store those for analytics on driving patterns or vehicle health. While specialized time-series databases exist, OpenSearch is often chosen when teams want a unified solution for logs and metrics together, or when they need the flexible search on top of the time-series data (e.g., search by device ID, then analyze a metric).

- Vector Search and ML-driven Applications: A more cutting-edge use case for OpenSearch (especially as of 2024/2025) is in vector search for machine learning applications. With the rise of AI, companies generate vector embeddings for things like images, text, or products (using ML models) to enable semantic search – finding similar items not by exact text matching but by meaning or likeness in vector space. OpenSearch 2.x introduced vector fields and k-NN search, which means OpenSearch can serve as a vector database in addition to text search. This opens up use cases like image similarity search (e.g., “find products that look similar to this one”), document recommendation, or using OpenSearch as the back-end for chatbot knowledge bases (where user queries are turned into vectors and matched against a vector index of documents). Industries like e-commerce use this for recommendation systems, and media for content discovery. While this is an emerging use case, it demonstrates OpenSearch’s versatility beyond traditional text/keyword search. AWS OpenSearch Serverless even has a dedicated “vector search” collection type to make it easier to stand up such solutions.

These examples barely scratch the surface – virtually any scenario that requires searching through large amounts of text or log data, or performing real-time analytics on streaming data, can leverage AWS OpenSearch. The service’s flexibility (supporting structured and unstructured data, free-text queries and aggregations, etc.) means it’s used in domains from finance (e.g., analyzing trade logs or compliance monitoring) to healthcare (e.g., searching through medical records or genomics data), and from small startups needing a search engine for their app to large enterprises offloading big analytics workloads from relational databases. According to AWS, tens of thousands of customers use OpenSearch Service for use cases like “interactive log analytics, real-time application monitoring, website search, and more” – a testament to its broad applicability.

AWS OpenSearch Pricing

Understanding AWS OpenSearch pricing is key to optimizing cost. Amazon OpenSearch Service offers multiple pricing models and options, including on-demand instance pricing, reserved instances, tiered storage pricing, and the serverless usage-based model. Here’s a breakdown:

Core Billing Pillars

AWS groups charges into three broad buckets: compute, storage, and data transfer. You pay for the node or OCU hours you keep alive, the amount and tier of storage you provision, and any traffic that leaves the service. There are no minimum fees or long-term commitments unless you choose to buy them for savings.

Compute Options

Managed Clusters

- On-Demand instances – pay hourly for each data, master, warm, or cold node you run. Good for bursty or trial workloads.

- Reserved Instances (RIs) – one- or three-year commitments that cut the hourly rate up to ~50 % (No-Upfront, Partial-Upfront, or All-Upfront plans). Ideal for steady 24 × 7 clusters.

Serverless Collections

- OpenSearch Compute Units (OCUs) – metered per second for separate indexing and search pools (6 GiB RAM each). A new account’s first collection starts with a 2-OCU baseline for high availability; dev-test mode can run at half that.

- Billing is purely usage-based: scale up on spikes, shrink when idle. S3 backs all data, so storage is a separate line item.

Auxiliary Compute

- OpenSearch Ingestion – stream/transform pipelines priced per OCU (15 GiB RAM, 2 vCPUs) with per-minute granularity; you can pause pipelines to hit $0.

- Direct Query – run SQL-style queries over external data sources; billed per OCU (8 GiB RAM, 2 vCPUs) only while queries run.

Storage Tiers

Hot storage

This is the fast lane where new data lands first—think dashboards that need sub-second queries. In us-east-1, gp3 runs about $0.08 per GB-month, roughly 20 % cheaper than older gp2 volumes, while still delivering SSD-class performance and letting you dial up IOPS or throughput separately.

UltraWarm

When logs are a few weeks old and query frequency drops, you can shift them to UltraWarm. The raw bits now live in Amazon S3, but each shard stays cached on a lightweight warm node so queries remain interactive. Storage itself costs a flat $0.024 per GB-month, and you add an hourly fee for the warm nodes that back it— for example, ultrawarm1.medium.search is $0.238 per hour and serves about 1.5 TiB; the larger ultrawarm1.large.search is $2.68 per hour for ~20 TiB. AWS guidance says the math favors UltraWarm once you have roughly 3 TiB or more of “warm” data; below that, the node surcharge may outweigh the savings over hot EBS.

Cold storage

For compliance archives you rarely touch, cold storage drops the standing compute cost altogether. Data stays in S3 at the same $0.024 per GB-month managed-storage rate, but there’s no hourly node fee—you spin up a warm node only when you need to re-attach and search that index. Because compute is detached, cold storage drives total cost closest to native S3 pricing while still keeping the data searchable on demand.

Putting it together. A common lifecycle is hot → UltraWarm → cold: keep the last 30 days on gp3 for blazing speed, roll anything older than a month into UltraWarm, and age the data into cold S3 after 90 days. Index State Management automates each hop, so you keep performance where you need it and pay rock-bottom rates for everything else.

Data Transfer & Misc.

- Within a multi-AZ domain – shard-replication traffic is free.

- Cross-service or internet egress – standard AWS data-transfer rates apply.

- Snapshots – stored in S3; you pay normal S3 rates, but the service does not charge for the snapshot operation itself.

- Extended support – running very old Elasticsearch versions incurs a small per-instance surcharge (shown in the pricing page’s “Extended support costs”).

Free Tier & Calculator

- Free Tier – 750 instance-hours of t2/t3.small plus 10 GB of EBS each month for one year—perfect for testing.

- AWS Pricing Calculator – build what-if estimates that mix clusters, serverless, ingestion, and storage in a single view.

You decide which compute model (instances, serverless OCUs, ingestion OCUs) and which storage tier (hot, UltraWarm, cold, S3) fit your workload. Add reserved pricing for steady traffic, lean on serverless for bursts, and tier older data to warm/cold to flatten the bill. Everything else—data transfer, snapshots, optional extended support—shows up only as you use it.

Best Practices for Deployment, Scalability, Security, and Cost Optimization

After understanding the components and features of AWS OpenSearch, it’s important to follow best practices to ensure a successful deployment that is scalable, secure, and cost-effective. Here we’ll outline key best practices (drawn from AWS’s official guidance) in several areas:

Deployment and Scalability Best Practices

- Plan Capacity and Shards Appropriately: Before launching a production cluster, estimate your data size, retention period, and query throughput needs. This will inform your choice of instance types and counts. A common mistake is to create too many shards (each index’s shards consume resources). Instead, right-size shard count so that each shard is in the tens of GB range at most – avoid lots of tiny shards. Use at least one replica shard for high availability. If you have a large index, consider sharding it by time or function (e.g., monthly indices for logs). AWS’s shard strategy advice is to err on the side of fewer, larger shards versus many small ones, and to use Index State Management to automate rollover of indices when they grow too large or old.

- Use Multiple AZs in Production: As mentioned, deploy your domain in a Multi-AZ configuration for resilience. This will distribute nodes (and thus shard replicas) across AZs so that an AZ outage won’t take down your cluster. True high availability for OpenSearch requires at least 2 (preferably 3) AZs with replica shards enabled. This is essentially a checkbox in AWS OpenSearch settings – make sure to enable it for any critical cluster.

- Enable Dedicated Master Nodes for Larger Clusters: If your cluster is sizable (generally if you have more than ~10 data nodes or if you anticipate heavy indexing rates), configure dedicated master nodes. Use 3 master nodes (to form a quorum) of an appropriate instance type (they can be smaller, like c5.large or r6g.large, as they only handle metadata). Dedicated masters prevent cluster instability under load by taking care of cluster state separately. AWS often recommends them when your cluster has more than a few nodes or when stability is paramount.

- Use Auto-Tune and Monitor Cluster Health: AWS OpenSearch Service provides an Auto-Tune capability that can adjust certain internal settings (e.g., thread pool sizes) based on observed usage. Keep it enabled to get those optimizations (it’s enabled by default on most domains). Additionally, monitor key metrics: ClusterStatus (should be Green), JVMMemoryPressure (ensure it’s not consistently high, which might indicate you need more memory or to adjust heap), CPUUtilization, and Indexing/Search Rates. Set up CloudWatch alarms on these metrics – for example, if CPU is above 80% for a sustained period, you might scale out. Monitoring and alerting are the first line of defense to catch issues early.

- Test and Tune: Every use case is different. AWS recommends to deploy, test, and tune in a cyclical process. For instance, load test your cluster with anticipated query patterns and index loads. This can reveal bottlenecks (maybe you need faster disks, or more memory, or to adjust your shard count). By tuning before going live, you can avoid fire-fighting later. Also, perform failure testing: e.g., remove a node (or simulate an AZ failure) in a test to ensure your multi-AZ setup works as expected.

- Leverage Caching and Performance Features: OpenSearch has query result caching and indices can be configured for refresh intervals etc. For heavy search use cases, consider using coordinating (client) nodes if your query load is high – these are nodes that don’t hold data but handle query aggregation, which you can enable in AWS OpenSearch (by not assigning data or master roles to an instance, effectively making it a coordinator). Also, if you have time-series data, consider using rollups or transforms to pre-aggregate older data to reduce query load.

Security Best Practices

- Enable Fine-Grained Access Control: If multiple applications or teams use the same cluster, use FGAC to isolate data access . For example, you might have logs from two systems in one cluster – FGAC can ensure Team A only sees Index A and Team B only sees Index B, even if they accidentally query others. This prevents privilege escalation and helps in multi-tenant scenarios. AWS recommends turning on FGAC on your domains (it’s just a setting when you create the domain, which then allows you to set up users/roles and permissions).

- Run Clusters in a VPC: As noted, always prefer deploying your OpenSearch domain into a VPC rather than using a public endpoint . This way, access is limited to within your private network. You can then use security groups to allow only certain EC2 instances or IP ranges to connect. This vastly reduces the risk of exposure – there have been incidents in the past of unsecured Elasticsearch clusters on the internet; using a VPC avoids that risk altogether. Within a VPC, all traffic to the cluster is kept within AWS’s network.

- Restrictive Access Policies (Least Privilege): Whether or not you use a VPC, you should attach an access policy to the domain that follows the principle of least privilege. An access policy is a JSON policy that specifies which AWS principals (IAM users/roles) or IP addresses can access the domain’s endpoints. Avoid using a wildcard * principal in these policies (which would allow anyone access), unless it’s absolutely necessary and you’ve layered other security like FGAC. A common best practice is to allow only an IAM role (e.g., an EC2 instance role or a specific user) full access, and require everyone to sign their requests with AWS credentials. This way, even if the endpoint were known, no one can do anything without proper IAM auth. If you use FGAC with an internal user database, you might temporarily open the policy (as AWS notes), but ideally, still restrict by source IP (e.g., allow only your corporate proxy or specific addresses).

- Encryption Everywhere: Enable encryption at rest for your domain – this is a simple checkbox in AWS OpenSearch console or a parameter in CloudFormation . It ensures that the data on disk (EBS volumes, S3 for UltraWarm) is encrypted with KMS keys, which is important for security compliance (HIPAA, SOC, etc.) and protecting data if disks were accessed. Also enable node-to-node encryption (TLS) within the cluster, so that even the internal traffic between OpenSearch nodes is encrypted. AWS OpenSearch Service supports this with a toggle. It’s especially important if you run in multi-AZ (as data might traverse AZ networks) or if you have any concern of someone sniffing traffic (though within a VPC that’s unlikely, but good practice). Both of these encryption features may have minor overhead but are worth it for the security they add.

- Audit and Monitor Security: Turn on audit logging in OpenSearch (this is available when FGAC is enabled). Audit logs will record things like user login attempts, data access events, permission changes, etc. Send these logs to CloudWatch and set up alerts for suspicious patterns (for example, multiple failed login attempts could indicate someone trying to brute force a password). Additionally, AWS Security Hub provides managed rules to continuously audit your OpenSearch Service configurations . For example, Security Hub can check if your domains have encryption enabled, if they’re in a VPC, if the policies aren’t wide open, etc., and flag any misconfigurations. Enabling these checks ensures you don’t accidentally leave a security gap.

- Network Isolation and Proxy: For an extra layer, some companies put OpenSearch domains in a private subnet with no internet access and then access them only through a bastion host or via an intermediary service. While not required, this can add to defense-in-depth. Also, make sure any clients accessing the domain use HTTPS (which is by default with AWS endpoints). If you expose an OpenSearch Dashboards endpoint, consider restricting it to certain IPs or putting an authentication proxy (if not using Cognito or SAML for auth) in front of it.

Cost Optimization Best Practices

- Choose Efficient Instance Types and Storage: As discussed, use the latest-gen instances and gp3 volumes for best performance per dollar. Graviton2/3 instances (e.g., R7g, C7g) can reduce costs by up to 30% for the same performance compared to older x86 instances. gp3 EBS volumes are cheaper and faster than gp2 (the older default) – you can migrate volumes to gp3 and provision IOPS as needed, which often allows smaller volumes to achieve higher throughput, saving cost.

- Use Tiered Storage for Logs: Do not keep everything in expensive hot storage if you don’t need instant access. A common pattern is to keep recent data (say last 30 days) in hot storage, next few months in UltraWarm, and anything older in cold storage (or even search it directly in S3 with something like Athena if you don’t need OpenSearch for it). By using UltraWarm and cold storage for time-series log data, you can save up to 90% on storage costs for that historical data . Set up Index State Management (ISM) policies to automate this: e.g., an index rolls over daily; when it’s 7 days old, the policy moves it to UltraWarm; when it’s 90 days old, it moves to cold (detached S3); after 1 year, delete. This way your OpenSearch cluster’s expensive nodes are only hosting data that needs fast access.

- Consider Serverless for Variable Workloads: If your usage is highly variable (e.g., spiky traffic or periodic batch indexing jobs), consider using OpenSearch Serverless or switching non-peak workloads to it. Serverless ensures you only pay for the capacity used. For example, you might shut off a dev/test cluster at night – with serverless, that happens automatically (scales down). Or you might have a multi-tenant platform where usage per tenant is unpredictable – serverless will allocate just what’s needed for each collection. This can prevent over-provisioning and thus save cost. Always compare costs – AWS’s pricing examples and calculators can help decide if serverless pricing would be lower than an always-on cluster in your scenario.

- Use Reserved Instances for Steady Loads: For clusters that run 24/7 and have predictable load, purchase Reserved Instances to cut costs . Even a 1-year commitment can yield about 30% savings over on-demand. If you have the budget and certainty, 3-year all-upfront RIs give the maximum savings (around 50%). It’s essentially prepaying for capacity at a discount. Keep an eye on AWS’s recommendations (in AWS Cost Explorer, you can see RI recommendations for OpenSearch after a couple of weeks of usage data) .

- Monitor and Right-Size Continuously: Cost optimization isn’t one-and-done. Regularly review your OpenSearch domains. Are they underutilized? If CPU and memory are low, maybe you can reduce instance size or count. Is the cluster struggling? Then perhaps the cost of adding one more node is worth avoiding throttled performance. Use CloudWatch metrics and even AWS Budgets to track spending. Sometimes enabling a feature like Auto-Tune can improve efficiency (by optimizing resource usage internally). Also, delete indices you don’t need – stale data not only incurs storage cost, it also might be taking up RAM and CPU (for instance, field data caches).

- Optimize Data and Queries: Though a bit beyond pure cost, optimizing how you index data can save resources. For example, avoid storing unnecessary fields or duplicative data (store raw logs in S3 as backup, but maybe only index certain fields in OpenSearch if full-text of every log line isn’t needed for search). Smaller index size means less storage and faster queries. Use templates to disable indexing or norms on fields where not needed. Efficient queries (using filters, avoiding wildcard leading searches if possible, etc.) reduce CPU usage which could allow smaller clusters.

By adhering to these best practices for deployment, security, performance, and cost, you set yourself up for success with AWS OpenSearch Service. AWS’s operational best practices guide emphasizes continuous testing and tuning, as well as using the features of the service (like UltraWarm, snapshots, and fine-grained access control) to build a robust solution . Always refer to the official AWS OpenSearch documentation for the latest recommendations, as the service continues to evolve (for example, new features or instance types might introduce new best practices in the future).

Conclusion

AWS OpenSearch Service delivers the power of Elasticsearch’s open-source lineage with AWS’s managed convenience. Choose traditional clusters for fine-grained control or the new serverless model for automatic, pay-as-you-go scalability—both backed by a rich feature set, strong security, and deep AWS integrations. Add tiered storage (UltraWarm, cold S3) and reserved instances or OCU limits to keep costs in check, and follow best-practice basics—Multi-AZ, right-sized shards, encryption—to keep data safe and performance steady. From live log analytics to AI-driven vector search, OpenSearch scales from hobby projects (free tier) to petabyte workloads while staying open, flexible, and cost-effective. Dive into the docs, spin up a test domain, and let your data stay searchable, insightful, and under control—happy searching!

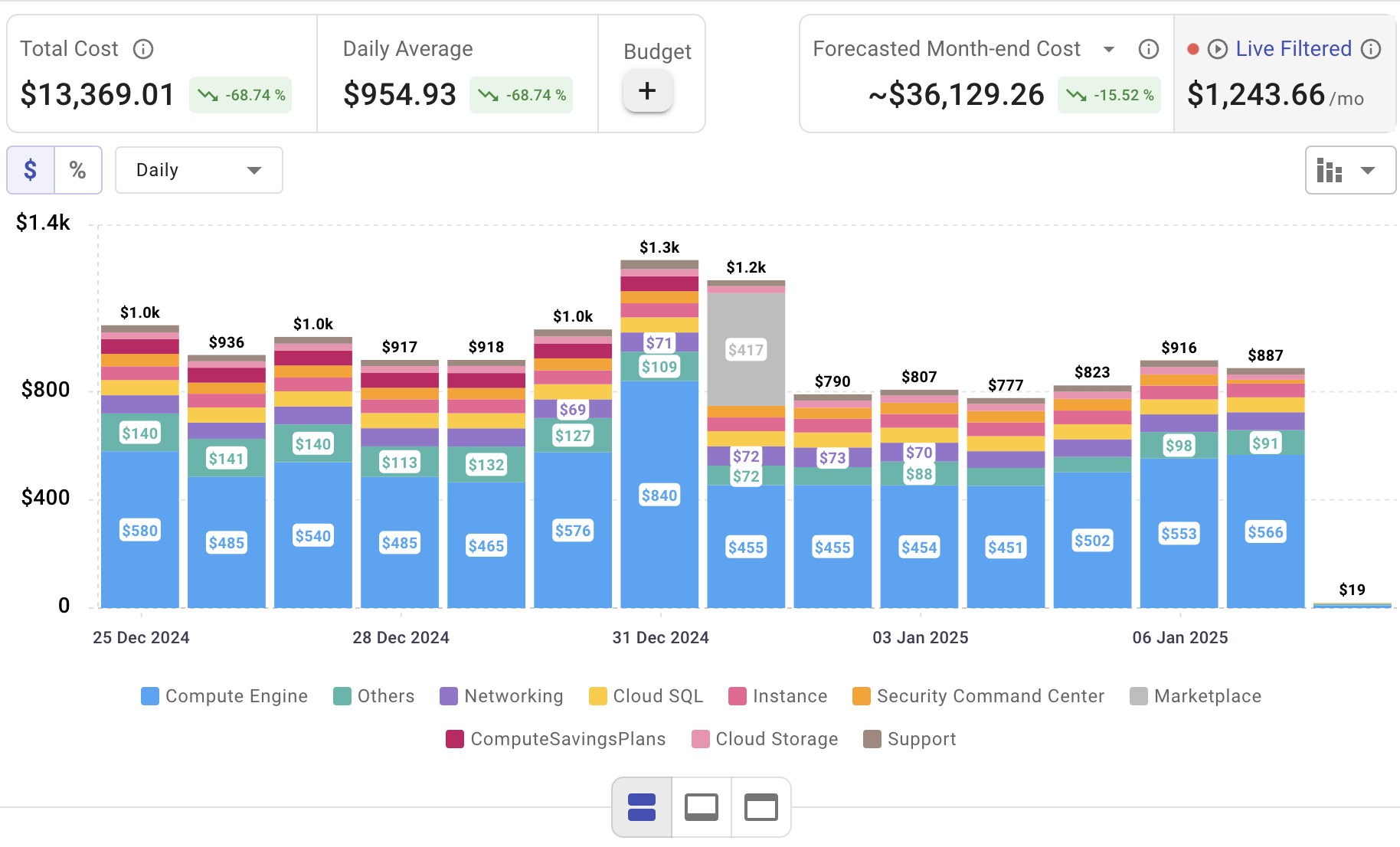

Monitor Your AWS OpenSearch Spend with Cloudchipr

Setting up AWS OpenSearch is only the beginning—actively managing cloud spend is vital to maintaining budget control. Cloudchipr offers an intuitive platform that delivers multi‑cloud cost visibility, helping you eliminate waste and optimize resources across AWS, Azure, and GCP.

Key Features of Cloudchipr

Automated Resource Management:

Easily identify and eliminate idle or underused resources with no-code automation workflows. This ensures you minimize unnecessary spending while keeping your cloud environment efficient.

Receive actionable, data-backed advice on the best instance sizes, storage setups, and compute resources. This enables you to achieve optimal performance without exceeding your budget.

Keep track of your Reserved Instances and Savings Plans to maximize their use.

Monitor real-time usage and performance metrics across AWS, Azure, and GCP. Quickly identify inefficiencies and make proactive adjustments, enhancing your infrastructure.

Take advantage of Cloudchipr’s on-demand, certified DevOps team that eliminates the hiring hassles and off-boarding worries. This service provides accelerated Day 1 setup through infrastructure as code, automated deployment pipelines, and robust monitoring. On Day 2, it ensures continuous operation with 24/7 support, proactive incident management, and tailored solutions to suit your organization’s unique needs. Integrating this service means you get the expertise needed to optimize not only your cloud costs but also your overall operational agility and resilience.

Experience the advantages of integrated multi-cloud management and proactive cost optimization by signing up for a 14-day free trial today, no hidden charges, no commitments.

.png)

.png)

.png)