Google Cloud Run Pricing in 2025: A Comprehensive Guide

.png)

Introduction

Google Cloud Run is a popular serverless platform that lets you run containerized applications without worrying about managing servers. One of its biggest selling points is a transparent pay-as-you-go pricing model: you only pay for the resources you actually use, rounded up to the nearest 100 milliseconds. In this guide, we’ll break down Cloud Run pricing as of 2025 – including the free tier, how costs are calculated, new features like GPU support, and tips to optimize your spend. The goal is to provide a clear, authoritative overview of Cloud Run pricing (no fluff, just facts) so you can leverage this service confidently.

Pay-As-You-Go Pricing Model Basics

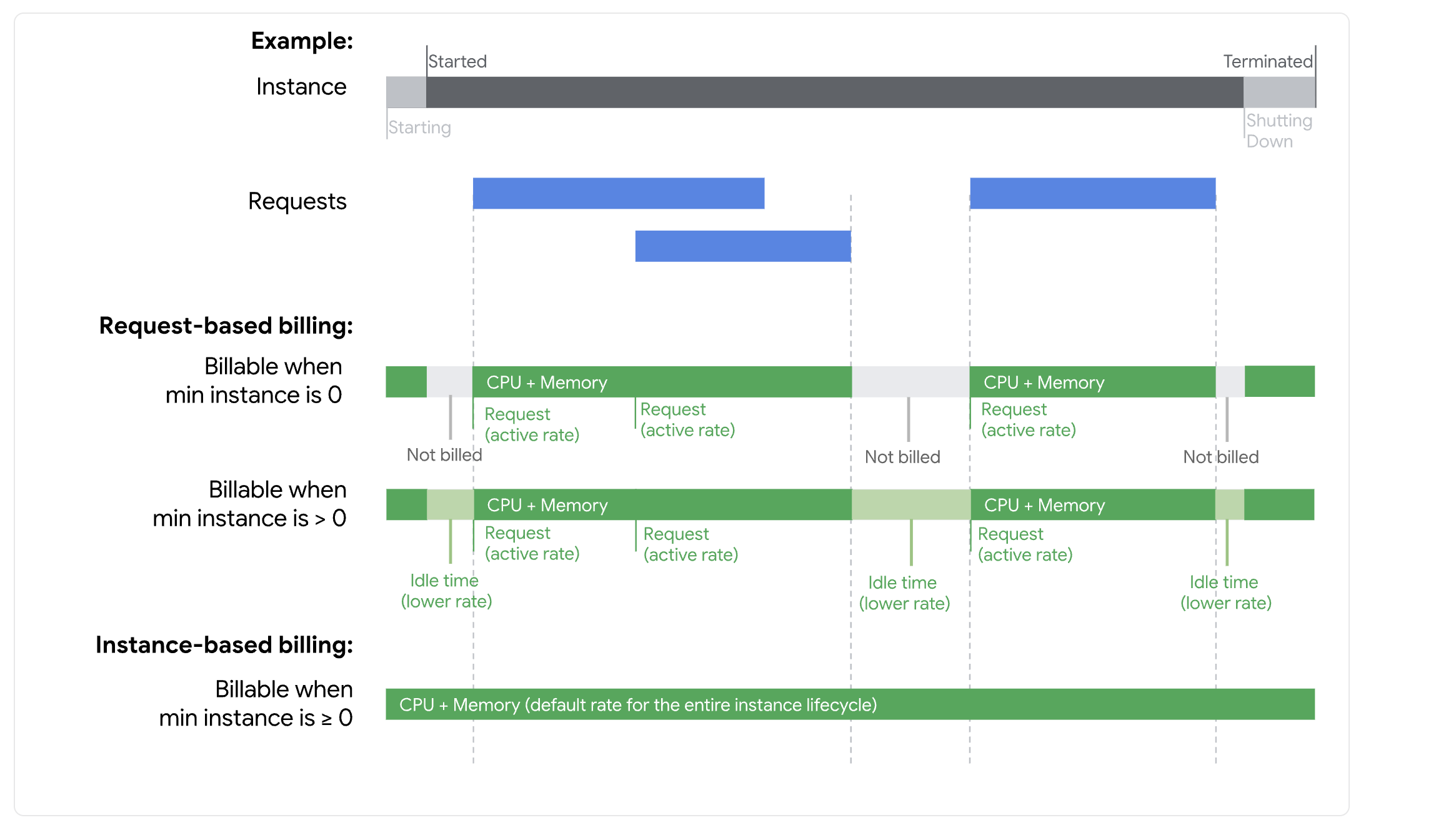

Cloud Run charges by CPU, memory, and requests. When your container receives a request, the platform measures how long your code runs and how much memory it uses. Charges are accumulated per vCPU-second and GiB-second (RAM), plus a small fee per request beyond the free quota. By default (with request-based billing), Cloud Run containers incur costs only while processing requests – not when sitting idle. In practical terms, billable time starts when a request arrives and ends when the request completes, with billing paused in between requests. This granular model can be very cost-effective for spiky or low-traffic workloads, since you’re not paying for idle server time.

Resource unit costs are low, reflecting the fine-grained billing. For example, in a standard region the rate is on the order of $0.000024 per vCPU-second and $0.0000025 per GiB-second for active usage. (That’s about $0.086 per hour for a full vCPU, and under a penny per hour for 1 GiB of RAM!) Each million requests beyond the free tier costs $0.40 in most regions. These figures highlight how Cloud Run’s pricing scales with usage: a few seconds of compute here or there only amount to fractions of a cent.

Network egress (outbound data transfer) is charged separately at Google’s standard networking rates. Cloud Run uses the Premium Network tier, and you get a free 1 GB of egress per month within North America. Data sent between Cloud Run services in the same region is free, and traffic to Google services (like Cloud Storage or BigQuery in the same region) is also free. This means normal API calls or database queries within the same region won’t incur data charges. Keep in mind that any egress beyond the free 1 GB (or egress to other continents) will add to your bill at the usual Google Cloud networking prices.

Concurrency and instance scaling also play into costs. Cloud Run allows you to handle multiple requests simultaneously on one instance (configurable concurrency). If your service can safely process, say, 10 requests in parallel, you might need fewer instances – which can reduce overall cost since you’re utilizing the allocated CPU and memory more efficiently. All those concurrent requests share the same instance’s CPU/RAM allocation, so you’re not charged extra for additional concurrency; you’re still paying for the time/resources that instance consumes. In contrast, if you limit concurrency to 1, Cloud Run will spin up more instances to handle traffic, which could raise your total bill (each instance has its own CPU/RAM usage). Tuning concurrency is thus a knob to balance performance vs. cost.

Always-Free Usage: Cloud Run’s Generous Free Tier

One reason Cloud Run is attractive, especially for new projects and hobby deployments, is its generous free tier. As of 2025, the “always free” monthly allowances for Cloud Run (per billing account) are:

- CPU: First 180,000 vCPU-seconds per month free

- Memory: First 360,000 GiB-seconds per month free

- Requests: First 2 million requests per month free

- Networking: 1 GB egress per month free (North America)

To put that in perspective, 180,000 vCPU-seconds is 50 hours of CPU time. So if your container only runs a few minutes at a time, you can handle quite a lot of traffic without incurring CPU charges. Similarly, 360,000 GiB-seconds is 100 hours for a 1 GiB container (or 200 hours for a 512 MiB container, etc.). And 2 million requests cover a substantial amount of usage for a small service. In short, small or intermittent workloads can run entirely free on Cloud Run’s free tier.

For example, Google notes that a batch job running once per hour for 1 minute (with 1 vCPU and 512 MiB memory) would cost literally $0.00 per month because it stays within these free allowances (it would have cost about $0.45 without the free tier). That’s a real testament to how far the free tier can take you. Even a constantly-running background worker (1 vCPU, 0.5 GiB RAM always on) was estimated around $11.61/month after free credits – meaning the free tier knocked off about 30% of the cost in that always-on scenario.

Important: The free tier is applied per billing account across all your Cloud Run services, and it resets monthly. However, the free tier benefits only apply in specific regions. Currently, you must run your services in one of the designated free tier regions (us-central1, us-east1, or us-west1) to get the free usage credits. These are the U.S. regions where Google offers free tier resources. If you deploy Cloud Run services in other regions (Europe, Asia, etc.), they will still work, but your usage there won’t count towards the free tier allotment. Many users stick to a free-tier region for dev and testing to take advantage of the freebies, and then use other regions for production if needed.

Once you exceed the free tier limits, you simply pay the standard rates for additional usage. There’s no hard cap – overages just convert to billable usage. For instance, beyond 2 million requests in a month, each extra million requests costs $0.40 (in a Tier 1 region). Exceeding 180k CPU-seconds will incur the ~$0.000024 per-second charge, and so on.

How Cloud Run Calculates Your Costs

Cloud Run’s billing can be thought of in a few components: compute time, memory time, request count, and network egress. Let’s break down an example to see how these add up in practice.

Suppose you have a web API running on Cloud Run in a Tier 1 region (like Iowa us-central1). You allocate 1 vCPU and 512 MiB memory to it, and you get about 10 million requests per month. Each request takes on average 400 milliseconds of processing. If concurrency is set high (allowing many requests per instance), the service might autoscale between 0 and, say, 20 instances at peak, but often reuses the same instances for multiple sequential requests.

- CPU & Memory: 10 million requests × 0.4 seconds each = 4 million seconds of instance active time. With 1 vCPU allocated, that’s 4 million vCPU-seconds. Similarly, 0.5 GiB memory × 4 million seconds = 2 million GiB-seconds. These usage numbers sound large, but remember, Cloud Run only bills when requests are being handled. After applying the free tier (180k CPU-sec and 360k GiB-sec free), you’d pay for the remaining usage. The cost for that workload comes out to roughly $13.69 per month in Google’s example. Without the free tier it would have been about $18.91, so the free tier saved ~27%.

- Request Count: Out of 10 million requests, the first 2 million are free. The remaining 8 million would be billed. At $0.40 per million, that’s $3.20 in request charges. (This is included in the total above.) The request fee is relatively small, but it ensures even very CPU-light services contribute something if they have huge traffic.

- Network: If each request responds with, say, 50 KB of data, 10 million requests would send ~500 GB outbound. Subtract the 1 GB free, and about 499 GB would be billed at networking rates. For intra-North America egress on Premium tier, that might be on the order of $0.12/GB (just an example; exact rates vary) – meaning around $59 in egress charges. Notice that network costs can actually dominate in high-volume scenarios. Cloud Run itself doesn’t add any premium on top of Google’s base network pricing, but it’s something to monitor if your service transfers a lot of data. (Tip: if clients and servers are in the same region, use that advantage – in-region calls are free of charge.)

Cloud Run’s pricing page provides a helpful breakdown and even a calculator for different scenarios. Google also offers committed use discounts (CUDs) if you have predictable steady usage. For example, if you know you’ll need at least one instance running continuously, you can purchase a commitment for a certain amount of vCPU and memory usage, and get a discounted rate in return. Committed use discounts for Cloud Run are “flexible” across other compute services as well – meaning a commitment can cover usage on Cloud Run, GKE, or Compute Engine collectively. This is useful if your workloads span services.

New in 2025: Cloud Run GPU Pricing

Cloud Run now allows attaching NVIDIA GPUs to services (currently the NVIDIA L4), enabling serverless ML inference, video processing, and other accelerated workloads. GPU usage is billed per second separately from CPU and memory, and there is no free tier for GPUs.

- Supported GPUs and pricing: In Tier 1 regions, an NVIDIA L4 is around $0.0001867 per second without zonal redundancy, which is roughly $0.67 per hour. With zonal redundancy, pricing is higher (about $0.0002909 per second). Exact rates vary by region and configuration.

- Operational model: GPU-enabled Cloud Run services require CPU to remain allocated during instance lifetime, and you pay for the full lifetime per second while the instance is running. Autoscaling can still reduce instances to zero when traffic stops, helping control cost between bursts.

- When to use: GPUs on Cloud Run shine for bursty or unpredictable workloads where you want on-demand scaling and per-second billing, without managing VM lifecycles. For steady 24/7 loads, compare costs with alternatives like GKE or committed GPU VMs.

In short, Cloud Run GPUs bring flexible, on-demand acceleration with serverless ergonomics, while billing remains straightforward: per-second charges for GPU, plus the usual CPU, memory, and requests for the service.

Cloud Run vs. Cloud Functions: Pricing Differences

You might be wondering how Cloud Run pricing compares to Google Cloud Functions (another serverless offering). Indeed, Google has “Cloud Run functions” which are part of the second-generation Cloud Functions, running on Cloud Run’s infrastructure. The key differences in pricing mostly come down to the resources and flexibility:

- Cloud Run services (and 2nd-gen Cloud Functions) use the model we’ve described: CPU, memory, requests, and optional concurrency. You can choose CPU/memory allocations, up to 4 vCPUs or more, run background tasks, etc. Pricing is granular in vCPU-seconds and GiB-seconds.

- 1st-gen Cloud Functions have a simpler model: your code runs with a fixed CPU (proportional to memory size) and charges are based on function execution time in 100ms intervals, plus invocations. The free tier for Cloud Functions is similar in spirit (e.g. 2 million invocations per month free) but with slightly different limits – for instance, Cloud Functions offers 5 GB egress free and up to 400,000 GB-seconds of memory free, a bit more generous on networking. However, Cloud Functions (gen1) cannot run longer than a few minutes per invocation and doesn’t support concurrency or custom container images.

In practice, Cloud Run is often more cost-efficient for many workloads because you have more control. You could run a single Cloud Run container handling multiple requests concurrently, which might use resources more effectively than many single-threaded function invocations. Also, Cloud Run’s CPU/memory options let you fine-tune your container size (even as low as 0.1 vCPU) which can lower cost for lightweight tasks. The pricing for Cloud Run and second-gen functions is unified (second-gen functions are essentially Cloud Run under the hood), whereas first-gen functions have their own pricing page and quotas.

One thing to note: when you deploy code from source either as a Cloud Run service or a Cloud Run function, Google will use Cloud Build and store the container image in Artifact Registry. Those services have their own free tiers (for example, Artifact Registry has some free storage and Cloud Build has free build minutes) but heavy use of them might incur small charges outside of Cloud Run itself. It’s usually negligible, but for completeness: if you do automated frequent deployments or store large images, monitor those costs too (they’re listed under Cloud Build/Artifact Registry billing, not Cloud Run). Event-driven Cloud Run services using Eventarc will similarly incur Eventarc charges beyond the Cloud Run cost if you go beyond its free usage.

Tips to Optimize GCP Cloud Run Pricing

Finally, here are a few tips drawn from experience to keep your GCP Cloud Run pricing under control without sacrificing performance:

- Start Small & Right-size: Begin with minimal CPU and memory and scale up only if needed. Cloud Run lets you adjust CPU (0.125, 0.25, 0.5 vCPU, etc.) and memory in small increments. Right-sizing your container’s resources means you’re not paying for horsepower you don’t use. For example, a simple API might run fine with 256 MiB RAM and 0.25 vCPU – significantly cheaper than 1 vCPU/1 GiB default settings.

- Leverage the Free Tier Regions: If latency permits, deploy non-critical or dev services in us-central1, us-east1, or us-west1 to soak up the free tier credits. Even for global user bases, those regions are reasonably accessible. You can also front your Cloud Run service with a global HTTPS Load Balancer (which has its own small costs) if needed to route traffic, while still keeping the service itself in a free tier region.

- Use Concurrency for I/O-bound Workloads: If your service spends time waiting (for example, calling external APIs or doing I/O), set a higher concurrency so one instance handles multiple requests in parallel. This maximizes the utility of each instance’s CPU time. Google’s pricing model does not charge extra per concurrent request, so you only pay for the actual CPU seconds consumed. Just be careful with this on CPU-intensive workloads – if each request needs full CPU, concurrency won’t magically make the CPU do more than 100% total, and could just slow each request down.

- Avoid Unnecessary Always-On Instances: The min instances feature is tempting for keeping latency low, but each always-on instance costs money every hour. If you can tolerate a bit of startup delay (cold start), keep min instances at 0 so that you’re not charged when no one is using your service. For latency-sensitive production services, consider a single min instance (which as we saw might be around $10-$12/month after free tier for a small service) – it’s a reasonable trade-off for never having cold starts. But scale it down at nights or weekends if possible by adjusting min instances via automation or using separate services for off-peak.

- Monitor with budgets and alerts: Since Cloud Run is usage-based, a traffic spike or bug (e.g., a runaway loop) could generate unexpected costs. Set up Google Cloud budgets and alerts on your billing account so you get notified if costs exceed a threshold. This way you won’t be unpleasantly surprised at month’s end. Cloud Run also integrates with Cloud Monitoring, so you can track metrics like CPU-seconds and request counts in real time.

- Consider Committed Use Discounts for steady loads: If you know your service will consistently run a baseline level (e.g., you always keep 2 vCPU busy with background jobs), look into 1-year or 3-year committed use discounts. Google offers flexible CUDs that automatically apply to Cloud Run, GKE, or Compute Engine usage, saving you around 17% for 1-year or 30%+ for 3-year commitments on CPU/Mem. It’s like reserving capacity in exchange for a lower rate. Just ensure your usage will actually meet the commitment, or you’ll pay for unused capacity.

- Keep an eye on related services: As mentioned, Cloud Run integrates with other GCP services. If you trigger Cloud Run via Pub/Sub or Cloud Scheduler, those have minimal costs. If your Cloud Run writes to Cloud Storage or Databases, those will have their own billing. Always consider the end-to-end cost of a solution. The good news is many Google services have free tiers too, and Cloud Run’s pricing is straightforward enough that it usually isn’t the cost bottleneck unless you’re running at massive scale.

Conclusion

Google Cloud Run pricing in 2025 remains one of the more developer-friendly and cost-effective models in cloud computing. It’s simple on the surface – pay only for what you use – yet it offers flexibility to fine-tune costs via concurrency, instance sizing, and now even GPU usage for advanced workloads. The always-free tier gives everyone a chance to try out Cloud Run (or run small apps indefinitely) at no cost, which lowers the barrier to entry significantly.

By understanding how Google bills Cloud Run and using the provided tools and free allowances, you can architect solutions that scale from zero to planet-scale without breaking the bank. Whether you’re a hobbyist on the free tier or a business running production services, Cloud Run’s pricing model rewards efficient usage and good design. Use that to your advantage, and you’ll find Cloud Run not only technically convenient but also economically sensible for your needs.

.png)

.png)

.png)