Understanding Databricks Pricing on AWS, Azure, and Beyond

.png)

Introduction

Databricks pricing may feel daunting at first glance, yet it’s built on a single, cloud-agnostic framework. This guide unpacks that framework—covering Databricks Units (DBUs), cloud-specific rates, subscription tiers, and the pricing calculator—so you can see exactly where the dollars go. We’ll trace how charges accrue for clusters, SQL Warehouses, and the latest serverless options on AWS, Azure, and, where available, Google Cloud. By the end, you’ll be able to budget with confidence and squeeze maximum value from every DBU.

The Databricks Pricing Model: Pay-as-You-Go with DBUs

At the core of the Databricks pricing model is the Databricks Unit (DBU). A DBU is essentially a normalized measure of compute power, used to track usage across different instance types and workloads. In practice, you accrue DBUs as your workloads run, and your bill is based on the number of DBUs consumed multiplied by a $/DBU rate. Billing is per second, so you only pay for exactly what you use with no long-term lock-in. As Databricks notes, there are “No up-front costs. Only pay for the compute resources you use at per-second granularity with simple pay-as-you-go pricing”. This pay-as-you-go approach means if you run a cluster for 10 minutes, you’re charged for 10 minutes of usage (calculated in seconds), not a full hour.

How are DBUs calculated?

The number of DBUs a workload consumes depends on the resources used. For example, larger or more powerful VMs consume more DBUs per hour than smaller ones, because a DBU represents a certain amount of processing capability. According to Databricks, “the number of DBUs a workload consumes is driven by processing metrics, which may include the compute resources used and the amount of data processed”. In simple terms, a beefy 16-core cluster will burn more DBUs per minute than a modest 4-core cluster doing the same work. This normalized unit makes it easier to compare costs across different instance types and services.

Pricing = DBUs × Rate

Each DBU has an associated price (in USD) that depends on your subscription plan, cloud, and workload type. For instance, if your job consumes 50 DBUs in a day and your rate is $0.15 per DBU, the Databricks platform fee would be 50 × $0.15 = $7.50 for that day (cloud infrastructure costs are separate, which we’ll discuss later). The key is that Databricks DBU pricing rates vary by the type of workload and the edition (more on editions soon).

Committed-use discounts

While on-demand pricing is straightforward, Databricks also offers the option of committing to usage levels for a discount. Enterprises with steady workloads can purchase Databricks commit units (prepaid DBUs) for 1 or 3 years to get a lower effective rate. The larger the commitment, the bigger the discount on the list price. For example, a 12-month commitment of a certain size might yield a 20% discount on DBU costs. This is analogous to cloud reserved instances – you trade some commitment for savings. Importantly, these committed use contracts are flexible across workloads and even clouds (Databricks lets you use commitments across multiple clouds), giving you cloud-agnostic purchasing power.

Pricing Tiers: Standard, Premium, and Enterprise

Databricks offers multiple pricing tiers (also called editions or plans) that unlock different feature sets. Historically, these were Standard, Premium, and Enterprise tiers. The Standard tier provides the core Databricks functionality, while Premium adds advanced security and administrative features (like role-based access control, data governance tools, etc.), and Enterprise includes even more governance and security options for the most regulated environments. Each tier comes at a different DBU cost. Premium and Enterprise tiers carry a higher $/DBU rate than Standard, reflecting the additional capabilities.

For example, on Azure Databricks the Standard tier rate for an all-purpose cluster is about 0.4 units per DBU, whereas the Premium tier is 0.55 units – roughly a 37.5% increase in price for Premium. In terms of dollar pricing, this corresponds to roughly $0.40/DBU vs $0.55/DBU in that case (exact rates vary by cloud and region). Jobs workloads might be $0.15/DBU on Standard versus $0.30 on Premium, etc., doubling in that example. The Enterprise tier is typically even higher – for instance, an all-purpose cluster on an Enterprise plan on AWS might be around $0.65/DBU (vs $0.55 on Premium). Enterprise pricing is usually only quoted in specific sales engagements, but it’s generally the highest due to the extra features and support.

It’s worth noting that pricing tiers are evolving. Databricks has indicated that the Standard tier is being phased out on some clouds. In fact, for AWS and Google Cloud, new customers can no longer sign up for Standard – Premium is now the base level, and existing Standard-tier customers on those clouds will be automatically upgraded to Premium by Oct 1, 2025. On Azure, which is managed through Azure’s marketplace, Standard and Premium are still available at the time of writing. Keep these differences in mind if you’re comparing costs; on AWS/GCP you’ll likely be using Premium by default going forward.

Which tier should you choose? Feature needs aside, the tier influences cost: Premium is typically ~1.3–1.5× the cost of Standard for the same workload, and Enterprise slightly more. Many organizations find Premium’s extra capabilities worth the cost. But if you’re cost-sensitive and the advanced features (e.g. granular access controls) aren’t needed, Standard (where still available) could save money. Just remember to factor in that a Premium plan’s DBU price is higher, so every running cluster accrues costs faster than on Standard.

Cloud Differences: Azure Databricks Pricing vs. AWS and GCP

One great aspect of Databricks is that you can run it on your cloud of choice – AWS, Azure, or Google Cloud – with a unified experience. However, Azure Databricks pricing is a little different from Databricks AWS pricing, due to how the service is sold:

- Azure Databricks is offered as an Azure service. Microsoft sets the rates for DBUs on Azure, and you purchase Azure Databricks through your Azure account. Your Azure bill will include Databricks usage (measured in DBUs) as a line item, along with the Azure VM compute costs. The pricing is region-specific and listed on Azure’s official pricing page. For example, in the East US region, Azure’s price might be on the order of $0.40 per DBU for an all-purpose cluster in Standard tier, and around $0.55 per DBU in Premium (these are reference figures; check Azure’s pricing page for exact up-to-date numbers). Microsoft’s pricing page confirms, “A DBU is a unit of processing capability, billed on a per-second basis. The DBU consumption depends on the size and type of instance running Azure Databricks.” In other words, Azure handles the metering and charges by the second, just like Databricks on other clouds.

- Databricks on AWS and GCP is sold directly by Databricks (or via cloud marketplaces). Here, you typically pay Databricks for the DBUs and pay your cloud provider separately for the underlying EC2 or GCE VM instances. This means your AWS bill covers EC2, S3, etc., while your Databricks bill (through your Databricks account or AWS Marketplace) covers the platform usage (DBUs). The rates on AWS/GCP are set by Databricks and tend to be in a similar ballpark to Azure’s, though there are sometimes slight differences. For instance, the list price for a Databricks all-purpose compute DBU on AWS Premium tier is about $0.55/DBU. On Azure, as noted, it’s roughly comparable (Azure’s Premium all-purpose DBU was about $0.55 as well in our example). Databricks AWS pricing for jobs compute might be around $0.15/DBU on Premium (which matches Azure’s Premium jobs DBU rate). In short, the numbers are similar, but keep in mind that on AWS/GCP you’ll see two bills (cloud infra + Databricks), whereas on Azure, it’s consolidated.

Geography and region pricing: Note that all cloud pricing varies by region. Databricks is no exception. For example, Databricks SQL Serverless on AWS is priced at $0.70 per DBU in US regions but is higher in Europe (around $0.91/DBU in Ireland/Frankfurt) to reflect cloud cost differences. Azure Databricks rates also differ by region (e.g., running in Azure West Europe may cost a bit more per DBU than East US). Always check the pricing for the region you plan to use.

Cluster and Compute Pricing Breakdown

When you spin up a Databricks cluster (the compute environment for notebooks, jobs, etc.), the cost has two components: cloud compute costs and Databricks costs. The cloud compute cost is simply the VM hourly rate (plus any attached storage) for the instances running in the cluster. The Databricks cost is measured in DBUs for the workload running on those instances.

Databricks has a few workload categories, primarily: All-Purpose Compute (for interactive notebooks and ad-hoc analysis), Jobs Compute (for scheduled jobs and automated workloads), and Jobs Light (a lower-power job run option, used for example by web terminal sessions or minimalist jobs). Each accrues DBUs at different rates:

- All-Purpose Compute (interactive clusters) incur the highest DBU rates because they are intended for collaboration, have interactive capabilities, and historically include features like notebook SQL editors. For instance, on AWS Premium tier this is around $0.55 per DBU . If such a cluster ran for an hour on 8 nodes and consumed 10 DBUs/hour per node, that’s 80 DBUs total = 80 × $0.55 = $44 Databricks cost for that hour (plus the EC2 costs for 8 nodes). On Azure Standard tier, the equivalent might be ~ $0.40/DBU, so that would be $32 for 80 DBUs.

- Jobs Compute (automated job clusters) have a lower DBU cost. They are meant for production jobs, so Databricks offers them at a cheaper rate per unit of compute. On AWS Premium, Jobs DBUs cost about $0.15 each (Azure Premium is similar, ~$0.30/DBU per Azure’s ratio, since Azure Premium is roughly double Standard for jobs). This means using job clusters for non-interactive workloads can be much more cost-efficient. In the above example, if a jobs cluster also consumed 80 DBUs in an hour, at $0.15, that’s only $12 of Databricks charges (plus VM costs), versus $44 on an all-purpose cluster. This is why it’s best practice to use jobs clusters for scheduled pipelines instead of interactive notebooks.

- Jobs Light Compute is an even more restricted environment (used for things like lightweight jobs and maintenance scripts). On Azure’s price sheet, Jobs Light was around $0.07/DBU on Standard, $0.22 on Premium. Not all clouds list “light” separately; on AWS, it may be bundled under Jobs. But the idea is that some lightweight tasks can be run at a fraction of the cost of a full, all-purpose cluster.

Databricks SQL Warehouse Pricing

Databricks isn’t just for Spark jobs – it also offers a data warehouse endpoint called Databricks SQL (previously SQL Analytics). Understanding Databricks SQL pricing is important if you’re using it for BI or ad-hoc queries.

A Databricks SQL Warehouse (formerly “SQL endpoint”) is essentially a compute cluster optimized for SQL workloads. Databricks provides it in different “sizes” and capabilities:

- SQL Classic vs SQL Pro: These refer to feature tiers of SQL warehouses. SQL Classic is a no-frills SQL endpoint, while SQL Pro offers more performance features (such as Photon acceleration, fine-grained controls, etc.). In pricing, SQL Classic is cheaper per DBU than SQL Pro. For example, on Azure, SQL Classic might correspond to a lower DBU cost (Standard tier doesn’t even support some SQL endpoints, as seen by “N/A” in Azure pricing for classic in Standard tier ). On AWS, an SQL Classic warehouse runs at about $0.22 per DBU (this is roughly the rate for a classic SQL endpoint in Premium) – notably lower than an all-purpose cluster because it’s a stripped-down engine. An SQL Pro warehouse costs more, roughly the same as an all-purpose interactive cluster in Premium, about $0.55/DBU in AWS US regions. Essentially, SQL Pro is premium priced.

- Serverless SQL Warehouse: Databricks offers a Serverless SQL option, which is fully managed. In this case, you do not pay separately for cloud VM instances – the cost is bundled into a higher DBU price. The advantage is that you get instant auto-scaling and optimized resource usage without managing clusters. The cost for Databricks serverless pricing (SQL) is about $0.70 per DBU in US regions, and this rate already includes the underlying compute. For example, if you run a query on a serverless SQL warehouse that consumes 10 DBUs, you’d be charged 10 × $0.70 = $7.00, and you won’t see a separate AWS or Azure VM charge – it’s all in that $7.00. In other regions, the rate is higher (e.g. ~$0.91/DBU in the EU) to account for cloud costs. Serverless SQL can be cost-effective for sporadic or bursty workloads since you’re not keeping clusters running, but the per-DBU rate is higher to cover the convenience of fully managed infrastructure.

To put it together, Databricks SQL warehouse pricing ranges from low (classic SQL on dedicated VMs, but you pay VM separately) to higher (serverless SQL with everything included). If you have steady, heavy SQL query loads, a classic or pro warehouse on reserved VMs might be cheaper (when combined with cloud instance savings plans). If you have infrequent or unpredictable queries, serverless may save money by not running idle.

One more note: As of 2025, new features like Materialized Views and Streaming Tables in Databricks SQL may incur additional charges (billed via serverless DLT costs). Always keep an eye on the documentation for such nuances, as using certain advanced SQL features could multiply the effective DBUs (for example, some features apply a 2× DBU multiplier ). These details are beyond the basics, but it’s good to be aware that not all DBUs are 1:1 – some serverless features use multipliers.

Model Serving and Other Serverless Features

Beyond clusters and SQL, Databricks has expanded into Serverless Model Serving and other AI-centric services. These have their own pricing, also measured in DBUs:

- Model Serving Pricing: Databricks Model Serving allows you to deploy machine learning models as endpoints. The pricing is again per DBU, and importantly, it includes the cloud instance cost (since it’s serverless). The rate for model serving (CPU endpoints) is around $0.070 per DBU – note this is in dollars per DBU, not per hour of uptime, and it’s much lower than the $0.70 we saw for SQL because model serving workloads tend to consume many DBUs quickly (each prediction request might count for a fraction of a DBU). That $0.07/DBU rate covers the VM, so you only see one charge. If you use a GPU for model serving, the cost is higher; effectively, the endpoint consumes more DBUs per hour. For example, a small GPU instance might be rated around 10.48 DBUs/hour, which at $0.07/DBU would be about $0.734/hour – still very reasonable for a T4 GPU. Larger multi-GPU serving instances consume much more DBUs (hundreds per hour) according to Databricks’ documentation. The key is that you’re billed by the second for the active time your model endpoint is running and processing requests.

- MosaicML / AI Functions: Databricks offers foundation model serving (through MosaicML integration and others). These can have specialized pricing, often measured per million tokens for inference or per hour for model runtime. For instance, the Mosaic AI serving of certain large models might have a rate like 7.143 DBUs per 1M input tokens for some model. Those details are highly specific, but if you’re using these cutting-edge services, be aware that their costs can add up differently (often tied to usage metrics like tokens, images generated, etc.). Always check the latest Databricks documentation for these new services’ pricing, as they are updated frequently.

Using the Databricks Pricing Calculator

To estimate costs tailored to your specific scenario, you should leverage the Databricks pricing calculator. Databricks provides a comprehensive online calculator for AWS, Azure, and GCP that lets you plug in your planned workloads and get cost estimates. Using the calculator, you can:

- Select your cloud and region: Pricing can vary, so choose (for example) Azure West Europe vs AWS Oregon, etc., to see region-specific numbers.

- Choose workload types: You can input how many hours of all-purpose cluster, how many jobs DBUs, how many SQL endpoints, etc. The calculator knows the rates for each scenario on each cloud.

- Pick instance types and sizes: For cluster computations, you can specify if you plan to use, say, 4 × r5d.2xlarge instances for 10 hours a day. The calculator will factor both the cloud VM cost (if applicable) and the DBU cost.

- Include any reserved commitment discounts: On Azure, for instance, you might toggle an option to apply Azure Hybrid Benefit or reserved instance pricing for the VMs. For Databricks, if you have a commit contract, you’d effectively use your discounted DBU rate.

The output will show an estimated monthly cost breakdown, which is incredibly useful for budgeting. This tool takes into account all the factors we’ve discussed – cloud, region, tier, instance size, workload type – and computes an estimate. Keep in mind it’s an estimate; actual costs can vary with usage patterns (for example, if your cluster autoscales up and down, or if jobs finish faster than expected). But it’s a great starting point to understand your potential spend.

Conclusion

Databricks pricing ultimately boils down to paying for the compute you use, measured in a cloud-agnostic unit (DBUs) that is charged at different rates depending on workload and tier. To recap key points:

- Pay-as-you-go, per-second billing: You’re charged only for what you use, with no upfront fees. This gives flexibility to scale clusters up and down freely.

- DBUs as the meter: All workloads consume Databricks Units. How many DBUs and the price per DBU depend on cluster size, workload type, cloud, and subscription tier.

- Cloud differences: Azure Databricks is sold via Azure (with Azure-specific rates ), whereas AWS/GCP Databricks, you pay Databricks directly. Ensure you account for cloud VM costs in addition to DBUs (except in serverless offerings where it’s bundled).

- Workload types: Interactive clusters (all-purpose) have higher DBU costs, while job clusters are cheaper. Use the right type to optimize costs. SQL warehouses and model serving have their own pricing nuances (with serverless options bundling compute cost at a higher DBU rate ).

- Pricing tiers: Premium and Enterprise plans cost more per DBU than Standard, but may be required for certain features (and Standard is being phased out on some clouds). Align your tier with your needs – don’t pay for Enterprise if Standard would suffice, but also note that Standard may not be available in all cases going forward.

- Estimate and monitor: Always run the numbers with the Databricks pricing calculator before deploying large workflows, and use Databricks cost reporting (and cloud cost management) to track actual usage. If costs are higher than expected, check if clusters are over-provisioned or if interactive clusters are being used when jobs would do, etc.

With a solid grasp of these concepts, you can approach Databricks pricing with confidence. It’s a powerful platform, and understanding the cost structure will help you maximize its value while staying within budget. Happy data analytics, and may your Databricks pricing model always be efficient and predictable!

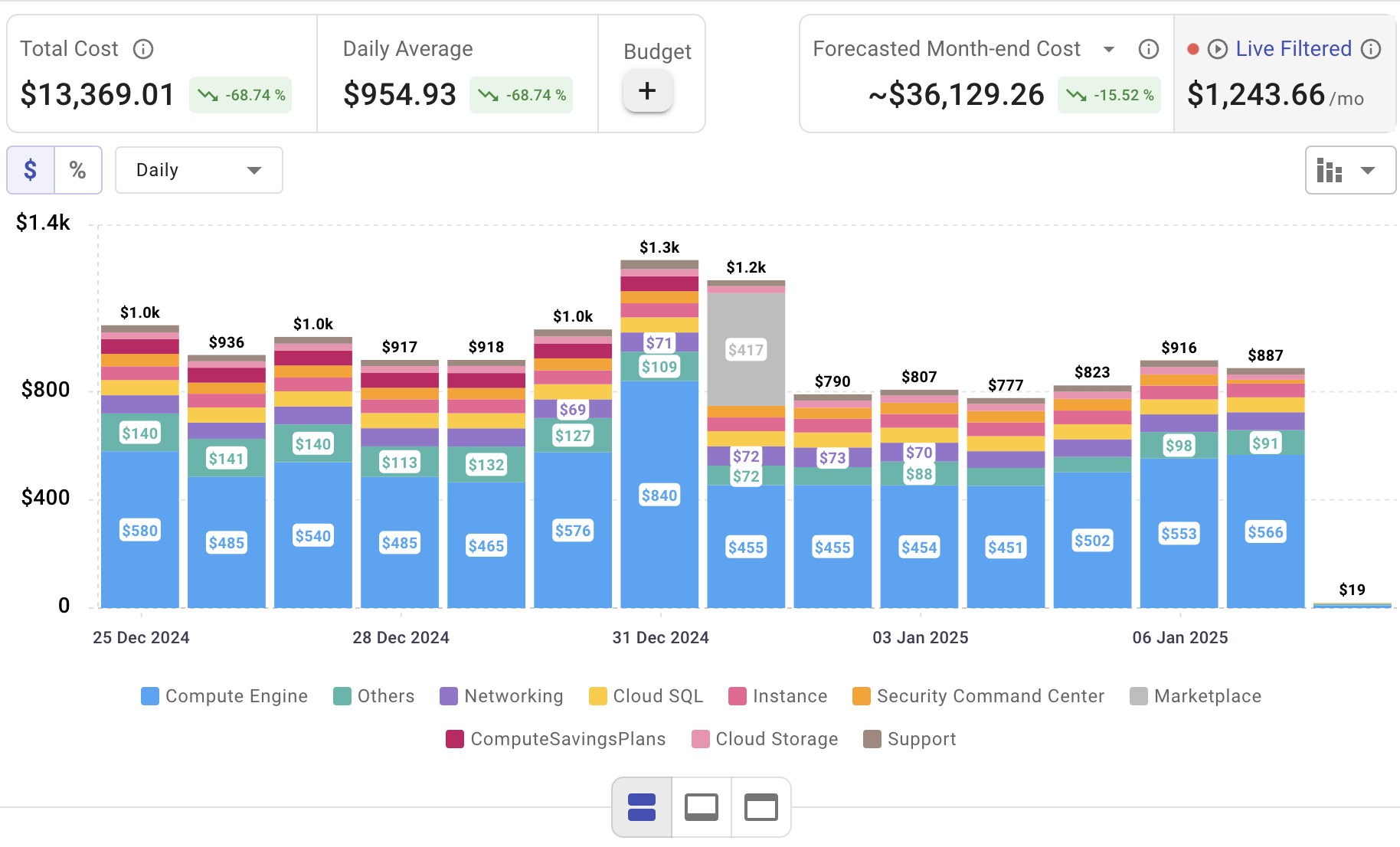

Optimize Your Cloud Costs with Cloudchipr

Spinning up Databricks clusters is simple—keeping DBU spend under control is not. Cloudchipr unifies AWS, Azure, and GCP cost data in one dashboard, flags idle or oversized resources, and highlights concrete savings on clusters, SQL Warehouses, and storage. Cut waste early and keep every cloud dollar working for you.

Key Features of Cloudchipr

Automated Resource Management:

Easily identify and eliminate idle or underused resources with no-code automation workflows. This ensures you minimize unnecessary spending while keeping your cloud environment efficient.

Receive actionable, data-backed advice on the best instance sizes, storage setups, and compute resources. This enables you to achieve optimal performance without exceeding your budget.

Keep track of your Reserved Instances and Savings Plans to maximize their use.

Monitor real-time usage and performance metrics across AWS, Azure, and GCP. Quickly identify inefficiencies and make proactive adjustments, enhancing your infrastructure.

Take advantage of Cloudchipr’s on-demand, certified DevOps team that eliminates the hiring hassles and off-boarding worries. This service provides accelerated Day 1 setup through infrastructure as code, automated deployment pipelines, and robust monitoring. On Day 2, it ensures continuous operation with 24/7 support, proactive incident management, and tailored solutions to suit your organization’s unique needs. Integrating this service means you get the expertise needed to optimize not only your cloud costs but also your overall operational agility and resilience.

Experience the advantages of integrated multi-cloud management and proactive cost optimization by signing up for a 14-day free trial today, no hidden charges, no commitments.

.png)

.png)

.png)